Posts Tagged epistemology

The End of Science Again

Posted by Bill Storage in History of Science, Philosophy of Science on October 24, 2025

Dad says enough of this biblical exegesis and hermeneutics nonsense. He wants more science and history of science for iconoclasts and Kuhnians. I said that if prophetic exegesis was good enough for Isaac Newton – who spent most of his writing life on it – it’s good enough for me. But to keep the family together around the spectroscope, here’s another look at what’s gone terribly wrong with institutional science.

It’s been thirty years since John Horgan wrote The End of Science, arguing that fundamental discovery was nearing its end. He may have overstated the case, but his diagnosis of scientific fatigue struck a nerve. Horgan claimed that major insights – quantum mechanics, relativity, the big bang, evolution, the double helix – had already given us a comprehensive map of reality unlikely to change much. Science, he said, had become a victim of its own success, entering a phase of permanent normality, to borrow Thomas Kuhn’s term. Future research, in his view, would merely refine existing paradigms, pose unanswerable questions, or spin speculative theories with no empirical anchor.

Horgan still stands by that thesis. He notes the absence of paradigm-shifting revolutions and a decline in disruptive research. A 2023 Nature study analyzed forty-five million papers and nearly four million patents, finding a sharp drop in genuinely groundbreaking work since the mid-twentieth century. Research increasingly consolidates what’s known rather than breaking new ground. Horgan also raises the philosophical point that some puzzles may simply exceed our cognitive reach – a concern with deep historical roots. Consider consciousness, quantum interpretation, or other problems that might mark the brain’s limits. Perhaps AI will push those limits outward.

Students of History of Science will think of Auguste Comte’s famous claim that we’d never know the composition of the stars. He wasn’t stupid, just cautious. Epistemic humility. He knew collecting samples was impossible. What he couldn’t foresee was spectrometry, where the wavelengths of light a star emits reveal the quantum behavior of its electrons. Comte and his peers could never have imagined that; it was data that forced quantum mechanics upon us.

The same confidence of finality carried into the next generation of physics. In 1874, Philipp von Jolly reportedly advised young Max Planck not to pursue physics, since it was “virtually a finished subject,” with only small refinements left in measurement. That position was understandable: Maxwell’s equations unified electromagnetism, thermodynamics was triumphant, and the Newtonian worldview seemed complete. Only a few inconvenient anomalies remained.

Albert Michelson, in 1894, echoed the sentiment. “Most of the grand underlying principles have been firmly established,” he said. Physics had unified light, electricity, magnetism, and heat; the periodic table was filled in; the atom looked tidy. The remaining puzzles – Mercury’s orbit, blackbody radiation – seemed minor, the way dark matter does to some of us now. He was right in one sense: he had interpreted his world as coherently as possible with the evidence he had. Or had he?

Michelson’s remark came after his own 1887 experiment with Morley – the one that failed to detect Earth’s motion through the ether and, in hindsight, cracked the door to relativity. The irony is enormous. He had already performed the experiment that revealed something was deeply wrong, yet he didn’t see it that way. The null result struck him as a puzzle within the old paradigm, not a death blow to it. The idea that the speed of light might be constant for all observers, or that time and space themselves might bend, was too far outside the late-Victorian imagination. Lorentz, FitzGerald, and others kept right on patching the luminiferous ether.

Logicians will recognize the case for pessimistic meta-induction here: past prognosticators have always been wrong about the future, and inductive reasoning says they will be wrong again. Horgan may think his case is different, but I can’t see it. He was partially right, but overconfident about completeness – treating current theories as final, just as Comte, von Jolly, and Michelson once did.

Where Horgan was most right – territory he barely touched – is in seeing that institutions now ensure his prediction. Science stagnates not for lack of mystery but because its structures reward safety over risk. Peer review, grant culture, and the fetish for incrementalism make Kuhnian normal science permanent. Scientific American canned Horgan soon after The End of Science appeared. By the mid-90s, the magazine had already crossed the event horizon of integrity.

While researching his book, Horgan interviewed Edward Witten, already the central figure in the string-theory marketing machine. Witten rejected Kuhn’s model of revolutions, preferring a vision of seamless theoretical progress. No surprise. Horgan seemed wary of Witten’s confidence. He sensed that Witten’s serene belief in an ever-tightening net of theory was itself a symptom of closure.

From a Feyerabendian perspective, the irony is perfect. Paul Feyerabend would say that when a scientific culture begins to prize formal coherence, elegance, and mathematical completeness over empirical confrontation, it stops being revolutionary. In that sense, the Witten attitude itself initiates the decline of discovery.

String theory is the perfect case study: an extraordinary mathematical construct that’s absorbed immense intellectual capital without yielding a falsifiable prediction. To a cynic (or realist), it looks like a priesthood refining its liturgy. The Feyerabendian critique would be that modern science has been rationalized to death, more concerned with internal consistency and social prestige than with the rude encounter between theory and world. Witten’s world has continually expanded a body of coherent claims – they hold together, internally consistent. But science does not run on a coherence model of truth. It demands correspondence. (Coherence vs. correspondence models of truth was a big topic in analytic philosophy in the last century.) By correspondence theory of truth, we mean that theories must survive the test against nature. The creation of coherent ideas means nothing without it. Experience trumps theory, always – the scientific revolution in a nutshell.

Horgan didn’t say – though he should have – that Witten’s aesthetic of mathematical beauty has institutionalized epistemic stasis. The problem isn’t that science has run out of mysteries, as Horgan proposed, but that its practitioners have become too self-conscious, too invested in their architectures to risk tearing them down. Galileo rolls over.

Horgan sensed the paradox but never made it central. His End of Science was sociological and cognitive; a Feyerabendian would call it ideological. Science has become the very orthodoxy it once subverted.

From Aqueducts to Algorithms: The Cost of Consensus

Posted by Bill Storage in History of Science on July 9, 2025

The Scientific Revolution, we’re taught, began in the 17th century – a European eruption of testable theories, mathematical modeling, and empirical inquiry from Copernicus to Newton. Newton was the first scientist, or rather, the last magician, many historians say. That period undeniably transformed our understanding of nature.

Historians increasingly question whether a discrete “scientific revolution” ever happened. Floris Cohen called the label a straightjacket. It’s too simplistic to explain why modern science, defined as the pursuit of predictive, testable knowledge by way of theory and observation, emerged when and where it did. The search for “why then?” leads to Protestantism, capitalism, printing, discovered Greek texts, scholasticism, even weather. That’s mostly just post hoc theorizing.

Still, science clearly gained unprecedented momentum in early modern Europe. Why there? Why then? Good questions, but what I wonder, is why not earlier – even much earlier.

Europe had intellectual fireworks throughout the medieval period. In 1320, Jean Buridan nearly articulated inertia. His anticipation of Newton is uncanny, three centuries earlier:

“When a mover sets a body in motion he implants into it a certain impetus, that is, a certain force enabling a body to move in the direction in which the mover starts it, be it upwards, downwards, sidewards, or in a circle. The implanted impetus increases in the same ratio as the velocity. It is because of this impetus that a stone moves on after the thrower has ceased moving it. But because of the resistance of the air (and also because of the gravity of the stone) … the impetus will weaken all the time. Therefore the motion of the stone will be gradually slower, and finally the impetus is so diminished or destroyed that the gravity of the stone prevails and moves the stone towards its natural place.”

Robert Grosseteste, in 1220, proposed the experiment-theory iteration loop. In his commentary on Aristotle’s Posterior Analytics, he describes what he calls “resolution and composition”, a method of reasoning that moves from particulars to universals, then from universals back to particulars to make predictions. Crucially, he emphasizes that both phases require experimental verification.

In 1360, Nicole Oresme gave explicit medieval support for a rotating Earth:

“One cannot by any experience whatsoever demonstrate that the heavens … are moved with a diurnal motion… One can not see that truly it is the sky that is moving, since all movement is relative.”

He went on to say that the air moves with the Earth, so no wind results. He challenged astrologers:

“The heavens do not act on the intellect or will… which are superior to corporeal things and not subject to them.”

Even if one granted some influence of the stars on matter, Oresme wrote, their effects would be drowned out by terrestrial causes.

These were dead ends, it seems. Some blame the Black Death, but the plague left surprisingly few marks in the intellectual record. Despite mass mortality, history shows politics, war, and religion marching on. What waned was interest in reviving ancient learning. The cultural machinery required to keep the momentum going stalled. Critical, collaborative, self-correcting inquiry didn’t catch on.

A similar “almost” occurred in the Islamic world between the 10th and 16th centuries. Ali al-Qushji and al-Birjandi developed sophisticated models of planetary motion and even toyed with Earth’s rotation. A layperson would struggle to distinguish some of al-Birjandi’s thought experiments from Galileo’s. But despite a wealth of brilliant scholars, there were few institutions equipped or allowed to convert knowledge into power. The idea that observation could disprove theory or override inherited wisdom was socially and theologically unacceptable. That brings us to a less obvious candidate – ancient Rome.

Rome is famous for infrastructure – aqueducts, cranes, roads, concrete, and central heating – but not scientific theory. The usual story is that Roman thought was too practical, too hierarchical, uninterested in pure understanding.

One text complicates that story: De Architectura, a ten-volume treatise by Marcus Vitruvius Pollio, written during the reign of Augustus. Often described as a manual for builders, De Architectura is far more than a how-to. It is a theoretical framework for knowledge, part engineering handbook, part philosophy of science.

Vitruvius was no scientist, but his ideas come astonishingly close to the scientific method. He describes devices like the Archimedean screw or the aeolipile, a primitive steam engine. He discusses acoustics in theater design, and a cosmological models passed down from the Greeks. He seems to describe vanishing point perspective, something seen in some Roman art of his day. Most importantly, he insists on a synthesis of theory, mathematics, and practice as the foundation of engineering. His describes something remarkably similar to what we now call science:

“The engineer should be equipped with knowledge of many branches of study and varied kinds of learning… This knowledge is the child of practice and theory. Practice is the continuous and regular exercise of employment… according to the design of a drawing. Theory, on the other hand, is the ability to demonstrate and explain the productions of dexterity on the principles of proportion…”

“Engineers who have aimed at acquiring manual skill without scholarship have never been able to reach a position of authority… while those who relied only upon theories and scholarship were obviously hunting the shadow, not the substance. But those who have a thorough knowledge of both… have the sooner attained their object and carried authority with them.”

This is more than just a plea for well-rounded education. H e gives a blueprint for a systematic, testable, collaborative knowledge-making enterprise. If Vitruvius and his peers glimpsed the scientific method, why didn’t Rome take the next step?

The intellectual capacity was clearly there. And Roman engineers, like their later European successors, had real technological success. The problem, it seems, was societal receptiveness.

Science, as Thomas Kuhn famously brough to our attention, is a social institution. It requires the belief that man-made knowledge can displace received wisdom. It depends on openness to revision, structured dissent, and collaborative verification. These were values that the Roman elite culture distrusted.

When Vitruvius was writing, Rome had just emerged from a century of brutal civil war. The Senate and Augustus were engaged in consolidating power, not questioning assumptions. Innovation, especially social innovation, was feared. In a political culture that prized stability, hierarchy, and tradition, the idea that empirical discovery could drive change likely felt dangerous.

We see this in Cicero’s conservative rhetoric, in Seneca’s moralism, and in the correspondence between Pliny and Trajan, where even mild experimentation could be viewed as subversive. The Romans could build aqueducts, but they wouldn’t fund a lab.

Like the Islamic world centuries later, Rome had scholars but not systems. Knowledge existed, but the scaffolding to turn it into science – collective inquiry, reproducibility, peer review, invitations for skeptics to refute – never emerged.

Vitruvius’s De Architectura deserves more attention, not just as a technical manual but as a proto-scientific document. It suggests that the core ideas behind science were not exclusive to early modern Europe. They’ve flickered into existence before, in Alexandria, Baghdad, Paris, and Rome, only to be extinguished by lack of institutional fit.

That science finally took root in the 17th century had less to do with discovery than with a shift in what society was willing to do with discovery. The real revolution wasn’t in Newton’s laboratory, it was in the culture.

Rome’s Modern Echo?

It’s worth asking whether we’re becoming more Roman ourselves. Today, we have massively resourced research institutions, global scientific networks, and generations of accumulated knowledge. Yet, in some domains, science feels oddly stagnant or brittle. Dissenting views are not always engaged but dismissed, not for lack of evidence, but for failing to fit a prevailing narrative.

We face a serious, maybe existential question. Does increasing ideological conformity, especially in academia, foster or hamper science?

Obviously, some level of consensus is essential. Without shared standards, peer review collapses. Climate models, particle accelerators, and epidemiological studies rely on a staggering degree of cooperation and shared assumptions. Consensus can be a hard-won product of good science. And it can run perilously close to dogma. In the past twenty years we’ve seen consensus increasingly enforced by legal action, funding monopolies, and institutional ostracism.

String theory may (or may not) be physics’ great white whale. It’s mathematically exquisite but empirically elusive. For decades, critics like Lee Smolin and Peter Woit have argued that string theory has enjoyed a monopoly on prestige and funding while producing little testable output. Dissenters are often marginalized.

Climate science is solidly evidence-based, but responsible scientists point to constant revision of old evidence. Critics like Judith Curry or Roger Pielke Jr. have raised methodological or interpretive concerns, only to find themselves publicly attacked or professionally sidelined. Their critique is labeled denial. Scientific American called Curry a heretic. Lawsuits, like Michael Mann’s long battle with critics, further signal a shift from scientific to pre-scientific modes of settling disagreement.

Jonathan Haidt, Lee Jussim, and others have documented the sharp political skew of academia, particularly in the humanities and social sciences, but increasingly in hard sciences too. When certain political assumptions are so embedded, they become invisible. Dissent is called heresy in an academic monoculture. This constrains the range of questions scientists are willing to ask, a problem that affects both research and teaching. If the only people allowed to judge your work must first agree with your premises, then peer review becomes a mechanism of consensus enforcement, not knowledge validation.

When Paul Feyerabend argued that “the separation of science and state” might be as important as the separation of church and state, he was pushing back against conservative technocratic arrogance. Ironically, his call for epistemic anarchism now resonates more with critics on the right than the left. Feyerabend warned that uniformity in science, enforced by centralized control, stifles creativity and detaches science from democratic oversight.

Today, science and the state, including state-adjacent institutions like universities, are deeply entangled. Funding decisions, hiring, and even allowable questions are influenced by ideology. This alignment with prevailing norms creates a kind of soft theocracy of expert opinion.

The process by which scientific knowledge is validated must be protected from both politicization and monopolization, whether that comes from the state, the market, or a cultural elite.

Science is only self-correcting if its institutions are structured to allow correction. That means tolerating dissent, funding competing views, and resisting the urge to litigate rather than debate. If Vitruvius teaches us anything, it’s that knowing how science works is not enough. Rome had theory, math, and experimentation. What it lacked was a social system that could tolerate what those tools would eventually uncover. We do not yet lack that system, but we are testing the limits.

Extraordinary Popular Miscarriages of Science, Part 6 – String Theory

Posted by Bill Storage in History of Science, Philosophy of Science on May 3, 2025

Introduction: A Historical Lens on String Theory

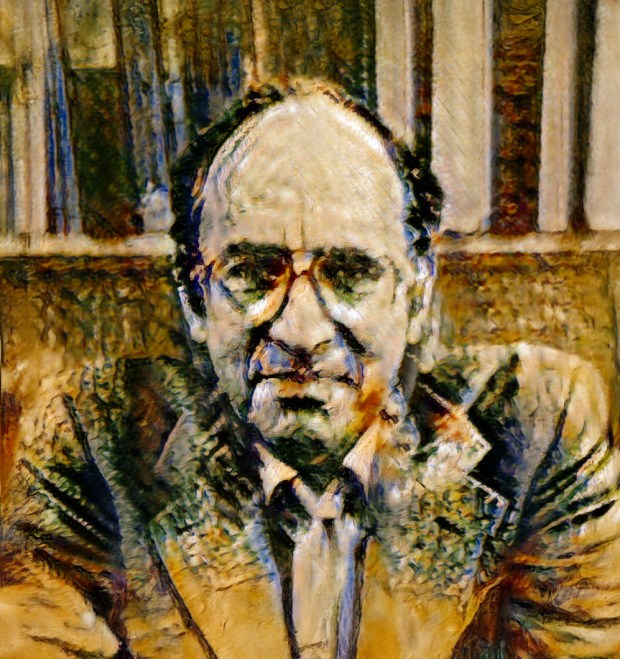

In 2006, I met John Heilbron, widely credited with turning the history of science from an emerging idea into a professional academic discipline. While James Conant and Thomas Kuhn laid the intellectual groundwork, it was Heilbron who helped build the institutions and frameworks that gave the field its shape. Through John I came to see that the history of science is not about names and dates – it’s about how scientific ideas develop, and why. It explores how science is both shaped by and shapes its cultural, social, and philosophical contexts. Science progresses not in isolation but as part of a larger human story.

The “discovery” of oxygen illustrates this beautifully. In the 18th century, Joseph Priestley, working within the phlogiston theory, isolated a gas he called “dephlogisticated air.” Antoine Lavoisier, using a different conceptual lens, reinterpreted it as a new element – oxygen – ushering in modern chemistry. This was not just a change in data, but in worldview.

When I met John, Lee Smolin’s The Trouble with Physics had just been published. Smolin, a physicist, critiques string theory not from outside science but from within its theoretical tensions. Smolin’s concerns echoed what I was learning from the history of science: that scientific revolutions often involve institutional inertia, conceptual blind spots, and sociopolitical entanglements.

My interest in string theory wasn’t about the physics. It became a test case for studying how scientific authority is built, challenged, and sustained. What follows is a distillation of 18 years of notes – string theory seen not from the lab bench, but from a historian’s desk.

A Brief History of String Theory

Despite its name, string theory is more accurately described as a theoretical framework – a collection of ideas that might one day lead to testable scientific theories. This alone is not a mark against it; many scientific developments begin as frameworks. Whether we call it a theory or a framework, it remains subject to a crucial question: does it offer useful models or testable predictions – or is it likely to in the foreseeable future?

String theory originated as an attempt to understand the strong nuclear force. In 1968, Gabriele Veneziano introduced a mathematical formula – the Veneziano amplitude – to describe the scattering of strongly interacting particles such as protons and neutrons. By 1970, Pierre Ramond incorporated supersymmetry into this approach, giving rise to superstrings that could account for both fermions and bosons. In 1974, Joël Scherk and John Schwarz discovered that the theory predicted a massless spin-2 particle with the properties of the hypothetical graviton. This led them to propose string theory not as a theory of the strong force, but as a potential theory of quantum gravity – a candidate “theory of everything.”

Around the same time, however, quantum chromodynamics (QCD) successfully explained the strong force via quarks and gluons, rendering the original goal of string theory obsolete. Interest in string theory waned, especially given its dependence on unobservable extra dimensions and lack of empirical confirmation.

That changed in 1984 when Michael Green and John Schwarz demonstrated that superstring theory could be anomaly-free in ten dimensions, reviving interest in its potential to unify all fundamental forces and particles. Researchers soon identified five mathematically consistent versions of superstring theory.

To reconcile ten-dimensional theory with the four-dimensional spacetime we observe, physicists proposed that the extra six dimensions are “compactified” into extremely small, curled-up spaces – typically represented as Calabi-Yau manifolds. This compactification allegedly explains why we don’t observe the extra dimensions.

In 1995, Edward Witten introduced M-theory, showing that the five superstring theories were different limits of a single 11-dimensional theory. By the early 2000s, researchers like Leonard Susskind and Shamit Kachru began exploring the so-called “string landscape” – a space of perhaps 10^500 (1 followed by 500 zeros) possible vacuum states, each corresponding to a different compactification scheme. This introduced serious concerns about underdetermination – the idea that available empirical evidence cannot determine which among many competing theories is correct.

Compactification introduces its own set of philosophical problems. Critics Lee Smolin and Peter Woit argue that compactification is not a prediction but a speculative rationalization: a move designed to save a theory rather than derive consequences from it. The enormous number of possible compactifications (each yielding different physics) makes string theory’s predictive power virtually nonexistent. The related challenge of moduli stabilization – specifying the size and shape of the compact dimensions – remains unresolved.

Despite these issues, string theory has influenced fields beyond high-energy physics. It has informed work in cosmology (e.g., inflation and the cosmic microwave background), condensed matter physics, and mathematics (notably algebraic geometry and topology). How deep and productive these connections run is difficult to assess without domain-specific expertise that I don’t have. String theory has, in any case, produced impressive mathematics. But mathematical fertility is not the same as scientific validity.

The Landscape Problem

Perhaps the most formidable challenge string theory faces is the landscape problem: the theory allows for an enormous number of solutions – on the order of 10^500. Each solution represents a possible universe, or “vacuum,” with its own physical constants and laws.

Why so many possibilities? The extra six dimensions required by string theory can be compactified in myriad ways. Each compactification, combined with possible energy configurations (called fluxes), gives rise to a distinct vacuum. This extreme flexibility means string theory can, in principle, accommodate nearly any observation. But this comes at the cost of predictive power.

Critics argue that if theorists can forever adjust the theory to match observations by choosing the right vacuum, the theory becomes unfalsifiable. On this view, string theory looks more like metaphysics than physics.

Some theorists respond by embracing the multiverse interpretation: all these vacua are real, and our universe is just one among many. The specific conditions we observe are then attributed to anthropic selection – we could only observe a universe that permits life like us. This view aligns with certain cosmological theories, such as eternal inflation, in which different regions of space settle into different vacua. But eternal inflation can exist independent of string theory, and none of this has been experimentally confirmed.

The Problem of Dominance

Since the 1980s, string theory has become a dominant force in theoretical physics. Major research groups at Harvard, Princeton, and Stanford focus heavily on it. Funding and institutional prestige have followed. Prominent figures like Brian Greene have elevated its public profile, helping transform it into both a scientific and cultural phenomenon.

This dominance raises concerns. Critics such as Smolin and Woit argue that string theory has crowded out alternative approaches like loop quantum gravity or causal dynamical triangulations. These alternatives receive less funding and institutional support, despite offering potentially fruitful lines of inquiry.

In The Trouble with Physics, Smolin describes a research culture in which dissent is subtly discouraged and young physicists feel pressure to align with the mainstream. He worries that this suppresses creativity and slows progress.

Estimates suggest that between 1,000 and 5,000 researchers work on string theory globally – a significant share of theoretical physics resources. Reliable numbers are hard to pin down.

Defenders of string theory argue that it has earned its prominence. They note that theoretical work is relatively inexpensive compared to experimental research, and that string theory remains the most developed candidate for unification. Still, the issue of how science sets its priorities – how it chooses what to fund, pursue, and elevate – remains contentious.

Wolfgang Lerche of CERN once called string theory “the Stanford propaganda machine working at its fullest.” As with climate science, 97% of string theorists agree that they don’t want to be defunded.

Thomas Kuhn’s Perspective

The logical positivists and Karl Popper would almost certainly dismiss string theory as unscientific due to its lack of empirical testability and falsifiability – core criteria in their respective philosophies of science. Thomas Kuhn would offer a more nuanced interpretation. He wouldn’t label string theory unscientific outright, but would express concern over its dominance and the marginalization of alternative approaches. In Kuhn’s framework, such conditions resemble the entrenchment of a paradigm during periods of normal science, potentially at the expense of innovation.

Some argue that string theory fits Kuhn’s model of a new paradigm, one that seeks to unify quantum mechanics and general relativity – two pillars of modern physics that remain fundamentally incompatible at high energies. Yet string theory has not brought about a Kuhnian revolution. It has not displaced existing paradigms, and its mathematical formalism is often incommensurable with traditional particle physics. From a Kuhnian perspective, the landscape problem may be seen as a growing accumulation of anomalies. But a paradigm shift requires a viable alternative – and none has yet emerged.

Lakatos and the Degenerating Research Program

Imre Lakatos offered a different lens, seeing science as a series of research programs characterized by a “hard core” of central assumptions and a “protective belt” of auxiliary hypotheses. A program is progressive if it predicts novel facts; it is degenerating if it resorts to ad hoc modifications to preserve the core.

For Lakatos, string theory’s hard core would be the idea that all particles are vibrating strings and that the theory unifies all fundamental forces. The protective belt would include compactification schemes, flux choices, and moduli stabilization – all adjusted to fit observations.

Critics like Sabine Hossenfelder argue that string theory is a degenerating research program: it absorbs anomalies without generating new, testable predictions. Others note that it is progressive in the Lakatosian sense because it has led to advances in mathematics and provided insights into quantum gravity. Historians of science are divided. Johansson and Matsubara (2011) argue that Lakatos would likely judge it degenerating; Cristin Chall (2019) offers a compelling counterpoint.

Perhaps string theory is progressive in mathematics but degenerating in physics.

The Feyerabend Bomb

Paul Feyerabend, who Lee Smolin knew from his time at Harvard, was the iconoclast of 20th-century philosophy of science. Feyerabend would likely have dismissed string theory as a dogmatic, aesthetic fantasy. He might write something like:

“String theory dazzles with equations and lulls physics into a trance. It’s a mathematical cathedral built in the sky, a triumph of elegance over experience. Science flourishes in rebellion. Fund the heretics.”

Even if this caricature overshoots, Feyerabend’s tools offer a powerful critique:

- Untestability: String theory’s predictions remain out of reach. Its core claims – extra dimensions, compactification, vibrational modes – cannot be tested with current or even foreseeable technology. Feyerabend challenged the privileging of untested theories (e.g., Copernicanism in its early days) over empirically grounded alternatives.

- Monopoly and suppression: String theory dominates intellectual and institutional space, crowding out alternatives. Eric Weinstein recently said, in Feyerabendian tones, “its dominance is unjustified and has resulted in a culture that has stifled critique, alternative views, and ultimately has damaged theoretical physics at a catastrophic level.”

- Methodological rigidity: Progress in string theory is often judged by mathematical consistency rather than by empirical verification – an approach reminiscent of scholasticism. Feyerabend would point to Johannes Kepler’s early attempt to explain planetary orbits using a purely geometric model based on the five Platonic solids. Kepler devoted 17 years to this elegant framework before abandoning it when observational data proved it wrong.

- Sociocultural dynamics: The dominance of string theory stems less from empirical success than from the influence and charisma of prominent advocates. Figures like Brian Greene, with their public appeal and institutional clout, help secure funding and shape the narrative – effectively sustaining the theory’s privileged position within the field.

- Epistemological overreach: The quest for a “theory of everything” may be misguided. Feyerabend would favor many smaller, diverse theories over a single grand narrative.

Historical Comparisons

Proponents say other landmark theories emerging from math predated their experimental confirmation. They compare string theory to historical cases. Examples include:

- Planet Neptune: Predicted by Urbain Le Verrier based on irregularities in Uranus’s orbit, observed in 1846.

- General Relativity: Einstein predicted the bending of light by gravity in 1915, confirmed by Arthur Eddington’s 1919 solar eclipse measurements.

- Higgs Boson: Predicted by the Standard Model in the 1960s, observed at the Large Hadron Collider in 2012.

- Black Holes: Predicted by general relativity, first direct evidence from gravitational waves observed in 2015.

- Cosmic Microwave Background: Predicted by the Big Bang theory (1922), discovered in 1965.

- Gravitational Waves: Predicted by general relativity, detected in 2015 by the Laser Interferometer Gravitational-Wave Observatory (LIGO).

But these examples differ in kind. Their predictions were always testable in principle and ultimately tested. String theory, in contrast, operates at the Planck scale (~10^19 GeV), far beyond what current or foreseeable experiments can reach.

Special Concern Over Compactification

A concern I have not seen discussed elsewhere – even among critics like Smolin or Woit – is the epistemological status of compactification itself. Would the idea ever have arisen apart from the need to reconcile string theory’s ten dimensions with the four-dimensional spacetime we experience?

Compactification appears ad hoc, lacking grounding in physical intuition. It asserts that dimensions themselves can be small and curled – yet concepts like “small” and “curled” are defined within dimensions, not of them. Saying a dimension is small is like saying that time – not a moment in time, but time itself – can be “soon” or short in duration. It misapplies the very conceptual framework through which such properties are understood. At best, it’s a strained metaphor; at worst, it’s a category mistake and conceptual error.

This conceptual inversion reflects a logical gulf that proponents overlook or ignore. They say compactification is a mathematical consequence of the theory, not a contrivance. But without grounding in physical intuition – a deeper concern than empirical support – compactification remains a fix, not a forecast.

Conclusion

String theory may well contain a correct theory of fundamental physics. But without any plausible route to identifying it, string theory as practiced is bad science. It absorbs talent and resources, marginalizes dissent, and stifles alternative research programs. It is extraordinarily popular – and a miscarriage of science.

Fuck Trump: The Road to Retarded Representation

Posted by Bill Storage in History of Science on April 2, 2025

-Bill Storage, Apr 2, 2025

On February 11, 2025, the American Federation of Government Employees (AFGE) staged a “Rally to Save the Civil Service” at the U.S. Capitol. The event aimed to protest proposed budget cuts and personnel changes affecting federal agencies under the Trump administration. Notable attendees included Senators Brian Schatz (D-HI) and Chris Van Hollen (D-MD), and Representatives Donald Norcross (D-NJ) and Maxine Dexter (D-OR).

Dexter took the mic and said that “we have to fuck Trump.” Later Norcross led a “Fuck Trump” chant. The senators and representatives then joined a song with the refrain, “We want Trump in jail.” “Fuck Donald Trump and Elon Musk,” added Rep. Mark Pocan (D-WI).

This sort of locution might be seen as a paradigmatic example of free speech and authenticity in a moment of candid frustration, devised to align the representatives with a community that is highly critical of Trump. On this view, “Fuck Trump” should be understood within the context of political discourse and rhetorical appeal to a specific audience’s emotions and cultural values.

It might also be seen as a sad reflection of how low the Democratic Party has sunk and how low the intellectual bar has dropped to become a representative in the US congress.

I mostly write here about the history of science, more precisely, about History of Science, the academic field focused on the development of scientific knowledge and the ways that scientific ideas, theories, and discoveries have evolved over time. And how they shape and are shaped by cultural, social, political, and philosophical contexts. I held a Visiting Scholar appointment in the field at UC Berkeley for a few years.

The Department of the History of Science at UC Berkeley was created in 1960. There in 1961, Thomas Kuhn (1922 – 1996) completed the draft of The Structure of Scientific Revolutions, which very unexpectedly became the most cited academic book of the 20th century. I was fortunate to have second-hand access to Kuhn through an 18-year association with John Heilbron (1924 – 2023), who, outside of family, was by far the greatest influence on what I spend my time thinking about. John, Vice-Chancellor Emeritus of the UC System and senior research fellow at Oxford, was Kuhn’s grad student and researcher while Kuhn was writing Structure.

I want to discuss here the uncannily direct ties between Thomas Kuhn’s analysis of scientific revolutions and Rep. Norcross’s chanting “Fuck Trump,” along with two related aspects of the Kuhnian aftermath. The second is academic precedents that might be seen as giving justification to Norcross’s pronouncements. Third is the decline in academic standards over the time since Kuhn was first understood to be a validation of cultural relativism. To make this case, I need to explain why Thomas Kuhn became such a big deal, what relativism means in this context, and what Kuhn had to do with relativism.

To do that I need to use the term epistemology. I can’t do without it. Epistemology deals with questions that were more at home with the ancient Greeks than with modern folk. What counts as knowledge? How do we come to know things? What can be known for certain? What counts as evidence? What do we mean by probable? Where does knowledge come from, and what justifies it?

These questions are key to History of Science because science claims to have special epistemic status. Scientists and most historians of science, including Thomas Kuhn, believe that most science deserves that status.

Kernels of scientific thinking can be found in the ancient Greeks and Romans and sporadically through the Middle Ages. Examples include Adelard of Bath, Roger Bacon, John of Salisbury, and Averroes (Ibn Rushd). But prior to the Copernican Revolution (starting around 1550 and exploding under Galileo, Kepler, and Newton) most people were happy with the idea that knowledge was “received,” either through the ancients or from God and religious leaders, or from authority figures of high social status. A statement or belief was considered “probable”, not if it predicted a likely future outcome but if it could be supported by an authority figure or was justified by received knowledge.

Scientific thinking, roughly after Copernicus, introduced the radical notion that the universe could testify on its own behalf. That is, physical evidence and observations (empiricism) could justify a belief against all prior conflicting beliefs, regardless of what authority held them.

Science, unlike the words of God, theologians, and kings, does not deal in certainty, despite the number of times you have heard the phrase “scientifically proven fact.” There is no such thing. Proof is in the realm of math, not science. Laws of nature are generalizations about nature that we have good reason to act as if we know them to be universally and timelessly true. But they are always contingent. 2 + 2 is always 4, in the abstract mathematical sense. Two atoms plus two atoms sometimes makes three atoms. It’s called fission or transmutation. No observation can ever show 2 + 2 = 4 to be false. In contrast, an observation may someday show E = MC2 to be false.

Science was contagious. Empiricism laid the foundation of the Enlightenment by transforming the way people viewed the natural world. John Locke’s empirical philosophy greatly influenced the foundation of the United States. Empiricism contrasts with rationalism, the idea that knowledge can be gained by shear reasoning and through innate ideas. Plato was a rationalist. Aristotle thought Plato’s rationalism was nonsense. His writings show he valued empiricism, though was not a particularly good empiricist (“a dreadfully bad physical scientist,” wrote Kuhn). 2400 years ago, there was tension between rationalism and empiricism.

The ancients held related concerns about the contrast between absolutism and relativism. Absolutism posits that certain truths, moral principles, and standards are universally and timelessly valid, regardless of perspectives, cultures, or circumstances. Relativism, in contrast, holds that truth, morality, and knowledge are context-sensitive and are not universal or timeless.

In Plato’s dialogue, Theaetetus, Plato, examines epistemological relativism by challenging his adversary Protagoras, who asserts that truth and knowledge are not absolute. In Theaetetus Socrates, Plato’s mouthpiece, asks, “If someone says, ‘This is true for me, but that is true for you,’ then does it follow that truth is relative to the individual?”

Epistemological relativism holds that truth is relative to a community. It is closely tied to the anti-enlightenment romanticism that developed in the late 1700s. The romantics thought science was spoiling the mystery of nature. “Our meddling intellect mis-shapes the beauteous forms of things: We murder to dissect,” wrote Wordsworth.

Relativism of various sorts – epistemological, moral, even ontological (what kinds of things exist) – resurged in the mid 1900s in poststructuralism and postmodernism. I’ll return to postmodernism later.

The contingent nature of scientific beliefs (as opposed to the certitude of math), right from the start in the Copernican era, was not seen by scientists or philosophers as support for epistemological relativism. Scientists – good ones, anyway – hold it only probable, not certain, that all copper is conductive. This contingent state of scientific knowledge does not, however, mean that copper can be conductive for me but not for you. Whatever evidence might exist for the conductivity of copper, scientists believe, can speak for itself. If we disagreed about conductivity, we could pull out an Ohmmeter and that would settle the matter, according to scientists.

Science has always had its enemies, at times including clerics, romantics, Luddites, and environmentalists. Science, viewed as an institution, could be seen as the monster that spawned atomic weapons, environmental ruin, stem cell hubris, and inequality. But those are consequences of science, external to its fundamental method. They don’t challenge science’s special epistemic status, but epistemic relativists do.

Relativism about knowledge – epistemological relativism – gained steam in the 1800s. Martin Heidegger, Karl Marx (though not intentionally), and Sigmund Freud, among others, brought the idea into academic spheres. While moral relativism and ethical pluralism (likely influenced by Friedrich Nietzsche) had long been in popular culture, epistemological relativism was sealed in Humanities departments, apparently because the objectivity of science was unassailable.

Enter Thomas Kuhn, Physics PhD turned historian for philosophical reasons. His Structure was originally published as a humble monograph in International Encyclopedia of Unified Science, then as a book in 1962. One of Kuhn’s central positions was that evidence cannot really settle non-trivial scientific debates because all evidence relies on interpretation. One person may “see” oxygen in the jar while another “sees” de-phlogisticated air. (Phlogiston was part of a theory of combustion that was widely believed before Antoine Lavoisier “disproved” it along with “discovering” oxygen.) Therefore, there is always a social component to scientific knowledge.

Kuhn’s point, seemingly obvious and innocuous in retrospect, was really nothing new. Others, like Michael Polanyi, had published similar thoughts earlier. But for reasons we can only guess about in retrospect, Kuhn’s contention that scientific paradigms are influenced by social, historical, and subjective factors was just the ammo that epistemological relativism needed to escape the confines of Humanities departments. Kuhn’s impact probably stemmed from the political climate of the 1960s and the detailed way he illustrated examples of theory-laden observations in science. His claim that, “even in physics, there is no standard higher than the assent of the relevant community” was devoured by socialists and relativists alike – two classes with much overlap in academia at that time. That makes Kuhn a relativist of sorts, but he still thought science to be the best method of investigating the natural world.

Kuhn argued that scientific revolutions and paradigm shifts (a term coined by Kuhn) are fundamentally irrational. That is, during scientific revolutions, scientific communities depart from empirical reasoning. Adherents often defend their theories illogically, discounting disconfirming evidence without grounds. History supports Kuhn on this for some cases, like Copernicus vs. Ptolemy, Einstein vs. Newton, quantum mechanics vs. Einstein’s deterministic view of the subatomic, but not for others like plate tectonics and Watson and Crick’s discovery of the double-helix structure of DNA, where old paradigms were replaced by new ones with no revolution.

The Strong Programme, introduced by David Bloor, Barry Barnes, John Henry and the Edinburgh School as Sociology of Scientific Knowledge (SSK), drew heavily on Kuhn. It claimed to understand science only as a social process. Unlike Kuhn, it held that all knowledge, not just science, should be studied in terms of social factors without privileging science as a special or uniquely rational form of knowledge. That is, it denied that science had a special epistemic status and outright rejected the idea that science is inherently objective or rational. For the Strong Programme, science was “socially constructed.” The beliefs and practices of scientific communities are shaped solely by social forces and historical contexts. Bloor and crew developed their “symmetry principle,” which states that the same kinds of causes must be used to explain both true and false scientific beliefs.

The Strong Programme folk called themselves Kuhnians. What they got from Kuhn was that science should come down from its pedestal, since all knowledge, including science, is relative to a community. And each community can have its own truth. That is, the Strong Programmers were pure epistemological relativists. Kuhn repudiated epistemological relativism (“I am not a Kuhnian!”), and to his chagrin, was still lionized by the strong programmers. “What passes for scientific knowledge becomes, then, simply the belief of the winners. I am among those who have found the claims of the strong program absurd: an example of deconstruction gone mad.” (Deconstruction is an essential concept in postmodernism.)

“Truth, at least in the form of a law of noncontradiction, is absolutely essential,” said Kuhn in a 1990 interview. “You can’t have reasonable negotiation or discourse about what to say about a particular knowledge claim if you believe that it could be both true and false.”

No matter. The Strong Programme and other Kuhnians appropriated Kuhn and took it to the bank. And the university, especially the social sciences. Relativism had lurked in academia since the 1800s, but Kuhn’s scientific justification that science isn’t justified (in the eyes of the Kuhnians) brought it to the surface.

Herbert Marcuse, ” Father of the New Left,” also at Berkeley in the 1960s, does not appear to have had contact with Kuhn. But Marcuse, like the Strong Programme, argued that knowledge was socially constructed, a position that Kuhnians attributed to Kuhn. Marcuse was critical of the way that Enlightenment values and scientific rationality were used to legitimize oppressive structures of power in capitalist societies. He argued that science, in its role as part of the technological apparatus, served the interests of oppressors. Marcuse saw science as an instrument of domination rather than emancipation. The term “critical theory” originated in the Frankfurt School in the early 20th century, but Marcuse, once a main figure in Frankfurt’s Institute for Social Research, put Critical Theory on the map in America. Higher academics began its march against traditional knowledge, waving the banners of Marcusian cynicism and Kuhnian relativism.

Postmodernism means many things in different contexts. In 1960s academia, it referred to a reaction against modernism and Enlightenment thinking, particularly thought rooted in reason, progress, and universal truth. Many of the postmodernists saw in Kuhn a justification for certain forms of both epistemic and moral relativism. Prominent postmodernists included Jean-François Lyotard, Michel Foucault, Jean Baudrillard, Richard Rorty, and Jacques Derrida. None of them, to my knowledge, ever made a case for unqualified epistemological relativism. Their academic intellectual descendants often do.

20th century postmodernism had significant intellectual output, a point lost on critics like Gross and Levitt (Higher Superstition, 1994) and Dinesh De Souza. Derrida’s application of deconstruction of written text took hermeneutics to a new level and has proved immensely valuable to analysis of ancient texts, as has the reader-response criticism approach put forth by Louise Rosenblatt (who was not aligned with the radical skepticism typical of postmodernism) and Jacques Derrida, and embraced by Stanley Fish (more on whom below). All practicing scientists would benefit from Richard Rorty’s elaborations on the contingency of scientific knowledge, which are consistent with those held by Descartes, Locke, and Kuhn.

Michel Foucault attacked science directly, particularly psychology and, oddly, from where we stand today, sociology. He thought those sciences constructed a specific normative picture of what it means to be human, and that the farther a person was from the idealized clean-cut straight white western European male, the more aberrant those sciences judged the person to be. Males, on Foucault’s view, had repressed women for millennia to construct an ideal of masculinity that serves as the repository of political power. He was brutally anti-Enlightenment and was disgusted that “our discourse has privileged reason, science, and technology.” Modernity must be condemned constantly and ruthlessly. Foucault was gay, and for a time, he wanted sex to be the center of everything.

Foucault was once a communist. His influence on identity politics and woke ideology is obvious, but Foucault ultimately condemned communism and concluded that sexual identity was an absurd basis on which to form one’s personal identity.

Rosenblatt, Rorty, Derrida, and even at times Foucault, despite their radical positions, displayed significant intellectual rigor. This seems far less true of their intellectual offspring. Consider Sandra Harding, author of “The Gender Dimension of Science and Technology” and consultant to the U.N. Commission on Science and Technology for Development. Harding argues that the Enlightenment resulted in a gendered (male) conception of knowledge. She wrote in The Science Question in Feminism that it would be “illuminating and honest” to call Newton’s laws of motion “Newton’s rape manual.”

Cornel West, who has held fellowships at Harvard, Yale, Princeton, and Dartmouth, teaches that the Enlightenment concepts of reason and of individual rights, which were used since the Enlightenment were projected by the ruling classes of the West to guarantee their own liberty while repressing racial minorities. Critical Race Theory, the offspring of Marcuse’s Critical Theory, questions, as stated by Richard Delgado in Critical Race Theory, “the very foundations of the liberal order, including equality theory, legal reasoning, Enlightenment rationalism, and neutral principles of constitutional law.”

Allan Bloom, a career professor of Classics who translated Plato’s Republic in 1968, wrote in his 1987 The Closing of the American Mind on the decline of intellectual rigor in American universities. Bloom wrote that in the 1960s, “the culture leeches, professional and amateur, began their great spiritual bleeding” of academics and democratic life. Bloom thought that the pursuit of diversity and universities’ desire to increase the number of college graduates at any cost undermined the outcomes of education. He saw, in the 1960s, social and political goals taking priority over the intellectual and academic purposes of education, with the bulk of unfit students receiving degrees of dubious value in the Humanities, his own area of study.

At American universities, Marx, Marcuse, and Kuhn were invoked in the Humanities to paint the West, and especially the US, as cultures of greed and exploitation. Academia believed that Enlightenment epistemology and Enlightenment values had been stripped of their grandeur by sound scientific and philosophical reasoning (i.e. Kuhn). Bloom wrote that universities were offering students every concession other than education. “Openness used to be the virtue that permitted us to seek the good by using reason. It now means accepting everything and denying reason’s power,” wrote Bloom, adding that by 1980 the belief that truth is relative was essential to university life.

Anti-foundationalist Stanley Fish, Visiting Professor of Law at Yeshiva University, invoked Critical Theory in 1985 to argue that American judges should think of themselves as “supplementers” rather than “textualists.” As such, they “will thereby be marginally more free than they otherwise would be to infuse into constitutional law their current interpretations of our society’s values.” Fish openly rejects the idea of judicial neutrality because interpretation, whether in law or literature, is always contingent and socially constructed.

If Bloom’s argument is even partly valid, we now live in a second or third generation of the academic consequences of the combined decline of academic standards and the incorporation of moral, cultural, and epistemological relativism into college education. We have graduated PhDs in the Humanities, educated by the likes of Sandra Harding and Cornel West, who never should have been in college, and who learned nothing of substance there beyond relativism and a cynical disgust for reason. And those PhDs are now educators who have graduated more PhDs.

Peer reviewed journals are now being reviewed by peers who, by the standards of three generations earlier, might not be qualified to grade spelling tests. The academic products of this educational system are hired to staff government agencies, HR departments, and to teach school children Critical Race Theory, Queer Theory, and Intersectionality – which are given the epistemic eminence of General Relativity – and the turpitude of national pride and patriotism.

An example, with no offense intended to those who call themselves queer, would be to challenge the epistemic status of Queer Theory. Is it parsimonious? What is its research agenda? Does it withstand empirical scrutiny and generate consistent results? Do its theorists adequately account for disconfirming evidence? What bold hypothesis in Queer Theory makes a falsifiable prediction?

Herbert Marcuse’s intellectual descendants, educated under the standards detailed by Bloom, now comprise progressive factions within the Democratic Party, particularly those advocating socialism and Marxist-inspired policies. The rise of figures like Bernie Sanders, Alexandria Ocasio-Cortez, and others associated with the “Democratic Socialists of America” reflects a broader trend in American politics toward embracing a combination of Marcuse’s critique of capitalism, epistemic and moral relativism, and a hefty decline in academic standards.

One direct example is the notion that certain forms of speech including reactionary rhetoric should not be tolerated if they undermine social progress and equity. Allan Bloom again comes to mind: “The most successful tyranny is not the one that uses force to assure uniformity but the one that removes the awareness of other possibilities.”

Echoes of Marcuse, like others of the 1960s (Frantz Fanon, Stokely Carmichael, the Weather Underground) who endorsed rage and violence in anti-colonial struggles, are heard in modern academic outrage that is seen by its adherents as a necessary reaction against oppression. Judith Butler of UC Berkeley, who called the October 2023 Hamas attacks an “act of armed resistance,” once wrote that “understanding Hamas, Hezbollah as social movements that are progressive, that are on the left, that are part of a global left, is extremely important.” College students now learn that rage is an appropriate and legitimate response to systemic injustice, patriarchy, and oppression. Seing the US as a repressive society that fosters complacency toward the marginalization of under-represented groups while striving to impose heteronormativity and hegemonic power is, to academics like Butler, grounds for rage, if not for violent response.

Through their college educations and through ideas and rhetoric supported by “intellectual” movements bred in American universities, politicians, particularly those more aligned with relativism and Marcuse-styled cynicism, feel justified in using rhetorical tools born of relaxed academic standards and tangential admissions criteria.

In the relevant community, “Fuck Trump” is not an aberrant tantrum in an echo chamber but a justified expression of solidary-building and speaking truth to power. But I would argue, following Bloom, that it reveals political retardation originating in shallow academic domains following the deterioration of civic educational priorities.

Examples of such academic domains serving as obvious predecessors to present causes at the center of left politics include:

- 1965: Herbert Marcuse (UC Berkeley) in Repressive Tolerance argues for intolerance toward prevailing policies, stating that a “liberating tolerance” would consist of intolerance to right-wing movements and toleration of left-wing movements. Marcuse advanced Critical Theory and a form of Marxism modified by genders and races replacing laborers as the victims of capitalist oppression.

- 1971: Murray Bookchin’s (Alternative University, New York) Post-Scarcity Anarchism followed by The Ecology of Freedom (1982) introduce the eco-socialism that gives rise to the Green New Deal.

- 1980: Derrick Bell’s (New York University School of Law) “Brown v. Board of Education and the Interest-Convergence Dilemma” wrote that civil rights advance only when they align with the interests of white elites. Later, Bell, Kimberlé Crenshaw, and Richard Delgado (Seattle University) develop Critical Race Theory, claiming that “colorblindness” is a form of oppression.

- 1984: Michel Foucault’s (Collège de France) The Courage of Truth addresses how individuals and groups form identities in relation to truth and power. His work greatly informs Queer Theory, post-colonial ideology, and the concept of toxic masculinity.

- 1985: Stanley Fish (Yeshiva University) and Thomas Grey (Stanford Law School) reject judicial neutrality and call for American judges to infuse into constitutional law their current interpretations of our society’s values.

- 1989: Kimberlé Crenshaw of Columbia Law School introduced the concept of Intersectionality, claiming that traditional frameworks for understanding discrimination were inadequate because they overlooked the ways that multiple forms of oppression (e.g., race, gender, class) interacted.

- 1990: Judith Butler’s (UC Berkeley) Gender Trouble introduces the concept of gender performativity, arguing that gender is socially constructed through repeated actions and expressions. Butler argues that the emotional well-being of vulnerable individuals supersedes the right to free speech.

- 1991: Teresa de Lauretis of UC Santa Cruz: introduced the term “Queer Theory” to challenge traditional understandings of gender and sexuality, particularly in relation to identity, norms, and power structures.

Marcusian cynicism might have simply died an academic fantasy, as it seemed destined to do through the early 1980s, if not for its synergy with the cultural relativism that was bolstered by the universal and relentless misreading and appropriation of Thomas Kuhn that permeated academic thought in the 1960s through 1990s. “Fuck Trump” may have happened without Thomas Kuhn through a different thread of history, but the path outlined here is direct and well-travelled. I wonder what Kuhn would think.

Recent Comments