Posts Tagged History of Science

Popular Miscarriages of Science, part 3 – The Great Lobotomy Rush

Posted by Bill Storage in History of Science on January 25, 2024

On Dec. 16, 1960, Dr. Walter Freeman told his 12-year-old patient Howard Dully that he was going to run some tests. Freeman then delivered four electric shocks to Dully to put him out, writing in his surgery notes that three would have been sufficient. Then Freeman inserted a tool resembling an ice pick above Dully’s eye socket and drove it several inches into his brain. Dully’s mother had died five years earlier. His stepmother told Freeman, a psychiatrist, that Dully had attacked his brother, something the rest of Dully’s family later said never happened. It was enough for Freeman to diagnose Dully as schizophrenic and perform another of the thousands of lobotomies he did between 1936 and 1967.

“By some miracle it didn’t turn me into a zombie,” said Dully in 2005, after a two-year quest for the historical details of his lobotomy. His story got wide media coverage, including an NPR story called My Lobotomy’: Howard Dully’s Journey. Much of the media coverage of Dully and lobotomies focused on Walter Freeman, painting Freeman as a reckless and egotistical monster.

Weston State Hospital (Trans-Allegheny Lunatic Asylum), photo courtesy of Tim Kiser

In The Lobotomy Letters: The Making of American Psychosurgery, (2015) Mical Raz asks, “Why, during its heyday was there nearly no objection to lobotomy in the American medical community?” Raz doesn’t seem to have found a satisfactory answer.

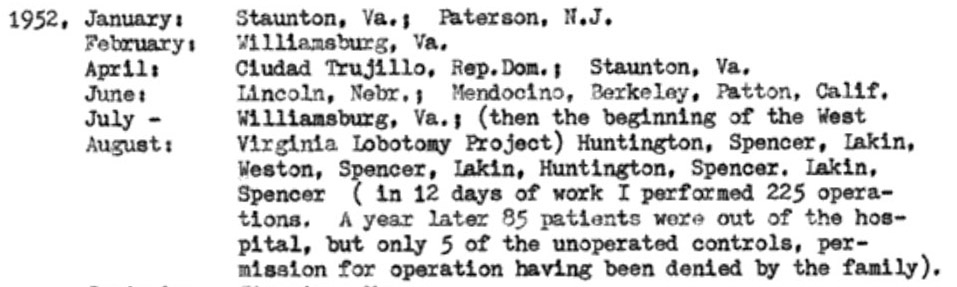

(I’m including a lot of in-line references here, not to be academic, but because modern media coverage often disagrees with primary sources and scholarly papers on the dates, facts, and numbers of lobotomy. It appears that most popular media coverage seemed to use other current articles as their sources, rather than going to primary sources. As a trivial example, Freeman’s notes report that in Weston, WV, he did 225 lobotomies in 12 days. The number 228 is repeated in all the press on Howard Dully. This post is on the longer side, because the deeper I dug, the less satisfied I became that we have learned the right lesson from lobotomies.)

A gripping account of lobotomies appeared in Dr. Paul Offit’s (developer of the rotavirus vaccine) 2017 Pandora’s Lab. It tells of a reckless Freeman buoyed by unbridled media praise. Offit’s piece concludes with a warning about wanting quick fixes. If it seems too good to be true, it probably is.

In the 2005 book, The Lobotomist: A Maverick Medical Genius and his Tragic Quest to Rid the World of Mental Illness, Jack El-Hai gave a much more nuanced account, detailing many patients who thought their lobotomies hade greatly improved their lives. El-Hai’s Walter Freeman was on a compassionate crusade to help millions of asylum patients escape permanent incarceration in gloomy state mental institutions. El-Hai documents Freeman’s life-long postoperative commitment to his patients, crisscrossing America to visit the patients that he had crisscrossed America to operate on. Despite performing most of his surgery in state mental hospitals, Freeman always refused to operate on people in prison, against pressure from defense attorneys’ pleas to render convicts safe for release.

Contrasting El-Hai’s relatively kind assessment, the media coverage of Dully aligns well with Offit’s account in Pandora’s Lab. On researching lobotomies, opinions of the medical community, and media coverage, I found I disagreed with Offit’s characterization of the media coverage, more about which below. In all these books I saw signs that lobotomies are a perfect instance of bad science in the sense of what Thomas Kuhn and related thinkers would call bad science, so I want to dig into that here. I first need to expand on Kuhn, his predecessors, and his followers a bit.

Kuhn’s Precursors and the Kuhnian Groupies

Kuhn’s writing, particularly Structure of Scientific Revolutions, was unfortunately ambiguous. His friends, several of whom I was lucky enough to meet, and his responses to his critics tell us that he was no enemy of science. He thought science was epistemically special. But he thought science’s claims to objectivity couldn’t be justified. Science, in Kuhn’s view, was not simply logic applied to facts. In Structure, Kuhn wrote many things that had been said before, though by sources Kuhn wasn’t aware of.

Karl Marx believed that consciousness was determined by social factors and that thinking will always be ideological. Marx denied that what Francis Bacon (1561-1626) had advocated was possible. I.e., we can never intentionally free our minds of the idols of the mind, the prejudices resulting from social interactions and from our tribe. Kuhn partly agreed but thought that communities of scientists engaged in competitive peer review could still do good science.

Ludwik Fleck’s 1935 Genesis and Development of a Scientific Fact argued that science was a thought collective of a community whose members share values. In 1958, Norwood Hanson, in Patterns of Discovery, wrote that all observation is theory-laden. Hanson agreed with Marx that neutral observation cannot exist, so neither can objective knowledge. “Seeing is an experience. People see, not their eyes,” said Hanson.

Most like Kuhn was Michael Polanyi, a brilliant Polish polymath (chemist, historian, economist). In his 1946 Science, Faith and Society, Polanyi wrote that scientific knowledge was produced by individuals under the influence of the scientific collectives in which they operated. Polanyi long preceded Kuhn, who was unaware of Polanyi’s work, in most of Kuhn’s key concepts. Unfortunately, Polanyi’s work didn’t appear in English until after Kuhn was famous. An aspect of Polanyi’s program important to this look at lobotomies is his idea that competition in science works like competition in business. The “market” determines winners of competing theories based on the judgments of its informed participants. Something like a market process exists within the institutional structure of scientific research.

Kuhn’s Structure was perfectly timed to correspond to the hippie/protest era, which distrusted big pharma and the rest of science, and especially the cozy relationships between academia, government, and corporations – institutions of social and political power. Kuhn had no idea that he was writing what would become one of the most influential books of the century, and one that became the basis for radical anti-science perspectives. Some communities outright declared war on objectivity and rationality. Science was socially constructed, said these “Kuhnians.” Kuhn was appalled.

A Kuhnian Take on Lobotomies

Folk with STEM backgrounds might agree that politics and influence can affect which scientific studies get funded but would probably disagree with Marx, Fleck, and Hanson that interest, influence, and values permeate scientific observations (what evidence gets seen and how it is assimilated), the interpretation of measurements and data, what data gets dismissed as erroneous or suppressed, and finally the conclusions drawn from observations and data.

The concept of social construction is in my view mostly garbage. If everything is socially constructed, then it isn’t useful to say of any particular thing that it is socially constructed. But the Kuhnians, who, oddly, have now come to trust institutions like big pharma, government science, and Wikipedia, were right in principle that science is in some legitimate sense socially constructed, though they were perhaps wrong about the most egregious cases, then and now. The lobotomy boom seems a good fit for what the Kuhnians worried about.

If there is going to be a public and democratic body of scientific knowledge (science definition 2 above) based on scientific methods and testability (definition 1 above), some community of scientists has to agree on what has been tested and falsified for the body of knowledge to get codified and publicized. Fleck and Hanson’s positions apply here. To some degree, that forces definition 3 onto definitions 1 and 2. For science to advance mankind, the institution must be cognitively diverse, it must welcome debate and court refutation, and it must be transparent. The institutions surrounding lobotomies did none of these. Monstrous as Freeman may have been, he was not the main problem – at least not the main scientific problem – with lobotomies. This was bad institutional science, and to the extent that we have missed what was bad about it, it is ongoing bad science. There is much here to make your skin crawl that was missed by NPR, Offit’s Pandora’s Lab, and El-Hai’s The Lobotomist.

Background on Lobotomy

In 1935 António Egas Moniz (1874–1955) first used absolute alcohol to destroy the frontal lobes of a patient. The Nobel Committee called it one of the most important discoveries ever made in psychiatric medicine, and Moniz became a Nobel laureate in 1949. In two years Moniz oversaw about 40 lobotomies. He failed to report cases of vomiting, diarrhea, incontinence, hunger, kleptomania, disorientation, and confusion about time in postoperative patients who lacked these conditions before surgery. When the surgery didn’t help the schizophrenia or whatever condition it was done to cure, Moniz said the patients’ conditions had been too advanced before the surgery.

In 1936 neurologist Walter Freeman, having seen Moniz’s work, ordered the first American lobotomy. James Watts of George Washington University Hospital performed the surgery by drilling holes in the side of the skull and removing a bit of brain. Before surgery, Freeman lied to the patient, who was concerned that her head would be shaved, about the procedure. She didn’t consent, but her husband did. The operation was done anyway, and Freeman declared success. He was on the path to stardom.

The patient, Alice Hammatt, reported being happy as she recovered. A week after the operation, she developed trouble communicating, was disoriented, and experienced anxiety, the condition the lobotomy was intended to cure. Freeman presented the case at a medical association meeting, calling the patient cured. In that meeting, Freeman was surprised to find that he faced criticism. He contacted the local press and offered an exclusive interview. He believed that the press coverage would give him a better reception at his next professional lobotomy presentation.

By 1952, 18,000 lobotomies had been performed in the US, 3000 of which Freeman claimed to have done. He began doing them himself, despite having no training in surgery, after Watts cut ties because of Freeman’s lack of professionalism and sterilization. Technically, Freeman was allowed to perform the kind of lobotomies he had switched to, because it didn’t involve cutting. Freeman’s new technique involved using a tool resembling an ice pick. Most reports say it was a surgical orbitoclast, though Freeman’s son Frank reported in 2005 that his father’s tool came right out their kitchen cabinet. Freeman punched a hole through the eye sockets into the patient’s frontal lobes. He didn’t wear gloves or a mask. West Virginians received a disproportionate share of lobotomies. At the state hospital in Weston, Freeman reports 225 lobotomies in twelve days, averaging six minutes per procedure. In The Last Resort: Psychosurgery and the Limits of Medicine (1999), JD Pressman reports a 14% mortality rate in Freeman’s operations.

The Press at Fault?

The press is at the center of most modern coverage of lobotomies. In Pandora’s Lab, Offit, as in other recent coverage, implies that the press overwhelmingly praised the procedure from day one. Offit reports that a front page article in the June 7, 1937 New York Times “declared – ‘in what read like a patent medicine advertisement – that lobotomies could relieve ‘tension apprehension, anxiety, depression, insomnia, suicidal ideas, …’ and that the operation ‘transforms wild animals into gentle creatures in the course of a few hours.’”

I read the 1937 Times piece as far less supportive. In the above nested quote, The Times was really just reporting the claims of the lobotomists. The headline of the piece shows no such blind faith: “Surgery Used on the Soul-Sick; Relief of Obsessions Is Reported.” The article’s subhead reveals significant clinical criticism: “Surgery Used on the Soul-Sick Relief of Obsessions Is Reported; New Brain Technique Is Said to Have Aided 65% of the Mentally Ill Persons on Whom It Was Tried as Last Resort, but Some Leading Neurologists Are Highly Skeptical of It.”

The opening paragraph is equally restrained: “A new surgical technique, known as “psycho-surgery,” which, it is claimed, cuts away sick parts of the human personality, and transforms wild animals into gentle creatures in the course of a few hours, will be demonstrated here tomorrow at the Comprehensive Scientific Exhibit of the American Medical Association…“

Offit characterizes medical professionals as being generally against the practice and the press as being overwhelmingly in support, a portrayal echoed in NPR’s 2005 coverage. I don’t find this to be the case. By Freeman’s records, most of his lobotomies were performed in hospitals. Surely the administrators and staff of those hospitals were medical professionals, so they couldn’t all be against the procedure. In many cases, parents, husbands, and doctors ordered lobotomies without consent of the patient, in the case of institutionalized minors, sometimes without consent of the parents. The New England Journal of Medicine approved of lobotomy, but an editorial in the 1941 Journal of American Medical Association listed the concerns of five distinguished critics. As discussed below, two sub-communities of clinicians may have held opposing views, and the enthusiasm of the press has been overstated.

In a 2022 paper, Lessons to be learnt from the history of lobotomy, Oivind Torkildsen of the Department of Clinical Medicine at University of Bergen wrote that “the proliferation of the treatment largely appears to have been based on Freeman’s charisma and his ability to enthuse the public and the news media.” Given that lobotomies were mostly done in hospitals staffed by professionals ostensibly schooled in and practicing the methods of science, this seems a preposterous claim. Clinicians would not be swayed by tabloids.

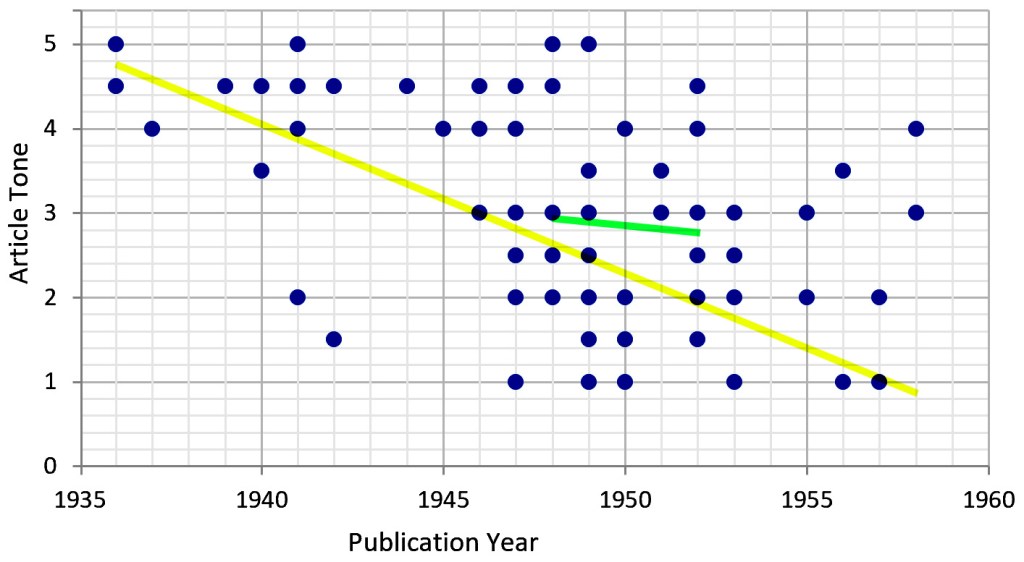

A 1999 article by GJ Diefenbach in the Journal of the History of the Neurosciences, Portrayal of Lobotomy in the Popular Press: 1935-1960, found that the press initially used uncritical, sensational reporting styles, but became increasingly negative in later years. The article also notes that lobotomies faced considerable opposition in the medical community. It concluded that popular press may have been a factor influencing the quick and widespread adoption of lobotomy.

The article’s approach was to randomly distribute articles to two evaluators for quantitative review. The reviewers then rated the tone of the article on a five-point scale. I plotted its data, and a linear regression (yellow line below) indeed shows that the non-clinical press cooled on lobotomies from 1936 to 1958 (though, as is apparent from the broad data scatter, linear regression doesn’t tell the whole story). But the records, spotty as they are, of when the bulk of lobotomies were performed should also be considered. Of the 20,000 US lobotomies, 18,000 of them were done in the 5-year period from 1948 to 1952, the year that phenothiazines entered psychiatric clinical trials. A linear regression of the reviewers’ judgements over that period (green line) shows little change.

Applying the Methods of History and Philosophy of Science

One possibility for making sense of media coverage in the time, the occurrence of lobotomies, and the current perception of why lobotomies persisted despite opposition in the medical community is to distinguish between lobotomies done in state hospitals from those done in private hospitals or psychiatrists’ offices. The latter category dominated the press in the 1940s and modern media coverage. The tragic case of Rosemary Kennedy, whose lobotomy left her institutionalized and abandoned by her family and that of Howard Dully are far better known that the 18,000 lobotomies done in American asylums. Americans were not as in love with lobotomies as modern press reports. The latter category, private hospital lobotomies, while including some high-profile cases, was small compared to the former.

Between 1936 and 1947, only about 1000 lobotomies had been performed in the US, despite Howard Freeman’s charisma and self-promotion. We, along with Offit and NPR, are far too eager to assign blame to Howard Freeman the monster than to consider that the relevant medical communities and institutions may have been monstrous by failing to critically review their results during the lobotomy boom years.

This argument requires me to reconcile the opposition to lobotomies appearing in medical journals from 1936 on with the blame I’m assigning to that medical community. I’ll start by noting that while clinical papers on lobotomy were plentiful (about 2000 between 1936 and 1952), the number of such papers that addressed professional ethics or moral principles was shockingly small. Jan Frank, in Some Aspects of Lobotomy (Prefrontal Leucotomy) under Psychoanalytic Scrutiny (Psychiatry 13:1, 1950) reports a “conspicuous dearth of contributions to the theme.” Constance Holden, in Psychosurgery: Legitimate Therapy or Laundered Lobotomy? (Science, Mar. 16, 1973), concluded that by 1943, medical consensus was against lobotomy, and that is consistent with my reading of the evidence.

Enter Polanyi and the Kuhnians

In 2005, Dr. Elliot Valenstein (1923-2023), 1976 author of Great and Desperate Cures: The Rise and Decline of Psychosurgery, in commenting on the Dully story, stated flatly that “people didn’t write critical articles.” Referring back to Michael Polanyi’s thesis, the medical community failed itself and the world by doing bad science – in the sense that suppression of opposing voices, whether through fear of ostracization or from fear of retribution in the relevant press, destroyed the “market’s” ability to get to the truth.

By 1948, the popular lobotomy craze had waned, as is shown in Diefenbach’s data above, but the institutional lobotomy boom had just begun. It was tucked away in state mental hospitals, particularly in California, West Virginia, Virginia, Washington, Ohio, and New Jersey.

Jack Pressman, in Last resort: Psychosurgery and the Limits of Medicine (1998), seems to hit the nail on the head when he writes “the kinds of evaluations made as to whether psychosurgery worked would be very different in the institutional context than it was in the private practice context.”

Doctors in asylums and mental hospitals lived in a wholly different paradigm from those in for-profit medicine. Funding in asylums was based on patient count rather than medical outcome. Asylums were allowed to perform lobotomies without the consent of patients or their guardians, to whom they could refuse visitation rights.

While asylum administrators usually held medical or scientific degrees, their roles as administrators in poorly funded facilities altered their processing of the evidence on lobotomies. Asylum administrators had a stronger incentive than private practices to use lobotomies because their definitions of successful outcome were different. As Freeman wrote in a 1957 follow-up of 3000 patients, lobotomized patients “become docile and are easier to manage”. Success in the asylum was not a healthier patient, it was a less expensive patient. The promise of a patient’s being able to return to life outside the asylum was a great incentive for administrators on tight budgets. If those administrators thought lobotomy was ineffective, they would have had no reason to use it, regardless of their ethics. The clinical press had already judged it ineffective, but asylum administrators’ understanding of effectiveness was different from that of clinicians in private practice.

Pressman cites the calculus of Dr. Mesrop Tarumianz, administrator of Delaware State Hospital: “In our hospital, there are 1,250 cases and of these about 180 could be operated on for $250 per case. That will constitute a sum of $45,000 for 180 patients. Of these, we will consider that 10 percent, or 18, will die, and a minimum of 50 percent of the remaining, or 81 patients will become well enough to go home or be discharged. The remaining 81 will be much better and more easily cared for the in hospital… That will mean a savings $351,000 in a period of ten years.”

The point here is not that these administrators were monsters without compassion for their patients. The point is that significant available evidence existed to conclude that lobotomies were somewhere between bad and terrible for patients, and that this evidence was not processed by asylum administrators in the same way it was in private medical practice.

The lobotomy boom was enabled by sensationalized headlines in the popular press, tests run without control groups, ridiculously small initial sample sizes, vague and speculative language by Moniz and Freeman, cherry-picked – if not outright false – trial results, and complacence in peer review. Peer review is meaningless unless it contains some element of competition.

Some might call lobotomies a case of conflict of interest. To an extent that label fits, not so much in the sense that anyone derived much personal benefit in their official capacity, but in that the aims and interests of the involved parties – patients and clinicians – were horribly misaligned.

The roles of asylum administrators – recall that they were clinicians too – did not cause them to make bad decisions about ethics. Their roles caused and allowed them to make bad decisions about lobotomy effectiveness, which was an ethics violation because it was bad science. Different situations in different communities – private and state practices – led intelligent men, interpreting the same evidence, to reach vastly different conclusions about pounding holes in people’s faces.

It will come as no surprise to my friends that I will once again invoke Paul Feyerabend: if science is to be understood as an institution, there must be separation of science and state.

___

Epilogical fallacies

A page on the official website the Nobel prize still defends the prize awarded to Moniz. It uncritically accepts Freeman’s statistical analysis of outcomes, e.g., 2% of patients became worse after the surgery.

…

Wikipedia reports that 60% of US lobotomy patients were women. Later in the same article it reports that 40% of US lobotomies were done on gay men. Thus, per Wikipedia, 100% of US male lobotomy patients were gay. Since 18,000 of the 20,000 lobotomies done in the US were in state mental institutions, we can conclude that mental institutions in 1949-1951 overwhelmingly housed gay men. Histories of mental institutions, even those most critical of the politics of deinstitutionalization, e.g. Deinstitutionalization: A Psychiatric Titanic, do not mention gay men.

…

Elliot Valenstein, cited above, wrote in a 1987 Orlando Sentinel editorial that all the major factors that shaped the lobotomy boom are still with us today: “desperate patients and their families still are willing to risk unproven therapies… Ambitious doctors can persuade some of the media to report untested cures with anecdotal ‘research’… it could happen again.” Now let’s ask ourselves, is anything equivalent going on today, any medical fad propelled by an uncritical media and single individual or small cadre of psychiatrists, anything that has been poorly researched and might lead to disastrous outcomes? Nah.

Extraordinary Miscarriages of Science, Part 2 – Creation Science

Posted by Bill Storage in History of Science on January 21, 2024

By Bill Storage, Jan. 21, 2024

Creation Science can refer either to young-earth or old-earth creation theories. Young Earth Creationism (YEC) makes specific claims about the creation of the universe from nothing, the age of the earth as inferred from the Book of Genesis and about the creation of separate “kinds” of creatures. Wikipedia’s terse coverage, as with Lysenkoism, brands it a pseudoscience without explanation. But YEC makes bold, falsifiable claims about biology and genetics (not merely evolution), geology (plate tectonics or lack thereof), and, most significantly, Newtonian mechanics. While it posits unfalsifiable unobservables including a divinity that sculpts the universe in six days, much of its paradigm contrasts modern physics in testable ways. Creation Science is not a miscarriage of science in the sense of some of the others. I’m covering it here because it has many similarities to other bad sciences and is a great test of demarcation criteria. Creation Science does limited harm because it preaches to the choir. I doubt anyone ever joined a cult because they were persuaded that creationism is scientific.

Intelligent Design

Old-earth creationism, now known as Intelligent Design (ID) theory is much different. While ID could have confined itself to the realm of metaphysics and stayed out of our cross hairs, it did not. ID mostly confines itself to the realm of descriptions and explanations, but it explicitly claims to be a science. Again, Wikipedia brands ID as pseudoscience, and, again, this distinction seems shallow. I’m also concerned that the label is rooted in anti-Christian bias with reasons invented after the labelling as a rationalization. To be clear, I see nothing substantial in ID that is scientific, but its opponents’ arguments are often not much better than those of its proponents.

It might be true that a supreme being, benevolent or otherwise, guided the hand of cosmological and biological evolution. But simpler, adequate explanations of those processes exist outside of ID, and ID adds no explanatory power to the theories of cosmology and biology that are independent of it. This was not always the case. The US founding fathers, often labeled Christian by modern Christians, were not Christian at all. They were deists, mainly because they lacked a theoretical framework to explain the universe without a creator, who had little interest in earthly affairs. They accepted the medieval idea that complex organisms, like complex mechanisms, must have a designer. Emergent complexity wasn’t seen as an option. That they generally – notably excepting David Hume – failed to see the circularity of this “teleological argument” can likely be explained by Kuhn’s notion of the assent of the relevant community. Each of them bought it because they all bought it. It was the reigning paradigm.

While intelligent design could logically be understood to not require a Judeo-Christian god, ID seems to have emerged out of fundamentalist Christian objection to teaching evolution in public schools. Logically, “intelligent design” could equally apply to theories involving a superior but not supreme creator or inventor. Space aliens may have seeded the earth with amino acids – the Zoo Hypothesis. Complex organic molecules could have been sent to earth on a comet by highly advanced – and highly patient – aliens, something we might call directed panspermia. Or we could be living in a computer simulation of an alien school kid. Nevertheless, ID seems to be a Christian undertaking positing a Christian God.

Opponents are quick to point this out. ID is motivated by Christian sentiments and is closely aligned with Christian evangelism. Is this a fair criticism of ID as a science? I tend to think not. Newton was strongly motivated by Christian beliefs, though his religion, something like Arianism or Unitarianism, would certainly be rejected by modern Christians. Regardless, Newton’s religious motivation for his studies no more invalidates them than Linus Pauling’s (covered below) economic motivations invalidate his work. Motivations of practitioners, in my view, cannot be grounds for calling a field of inquiry pseudoscience or bad science. Some social scientists disagree.

Dominated by Negative Arguments

YEC and ID writings focus on arguing that much of modern science, particularly evolutionary biology, cannot be correct. For example, much of YEC’s efforts are directed at arguing that the earth cannot be 4.5 billion years old. Strictly speaking, this ( the theory that another theory is wrong) is a difficult theory to disprove. Most scientists tend to think that disproving a theory that itself aims to disprove geology is pointless. They hold that the confirming evidence for modern geologic theory is sufficient. Karl Popper, who held that absence of disconfirmation was the sole basis for judging a theory good, would seem to have a problem with this though. YEC also holds theories defending a single worldwide flood within the last 5,000 years. That seems reasonably falsifiable, if one accepts a large body of related science including several radioactive dating techniques, mechanics of solids, denudation rate calculations, and much more.

Further, it is flawed reasoning (“false choice”) to think that exposing a failure of classical geology is support for a specific competing theory.

YEC and, perhaps surprisingly, much of ID have assembled a body of negative arguments against Darwinism, geology, and other aspects of a naturalistic worldview. Arguing that fossil evidence is an insufficient basis for evolution and that natural processes cannot explain the complexity of the eyeball are characteristically negative arguments. This raises the question of whether a bunch of negative arguments can rightly be called a science. While Einstein started with the judgement that the wave theory of light could not be right (he got the idea from Maxwell), his program included developing a bold, testable, and falsifiable theory that posited that light was something that came in discreet packages, along with predictions about how it would behave in a variety of extreme circumstances. Einsteinian relativity gives us global positioning and useful tools in our cell phones. Creationism’s utility seems limited to philosophical realms. Is lack of practical utility or observable consequences a good basis for calling an endeavor unscientific? See String Theory, below.

Wikipedia (you might guess that I find Wikipedia great for learning the discography of Miley Cyrus but poor for serious inquiries), appealing to “consensus” and “the scientific community,” judges Creation Science to be pseudoscience because creationism invokes supernatural causes. In the same article, it decries the circular reasoning of ID’s argument from design (the teleological argument). But claiming that Creation Science invokes supernatural causes is equally circular unless we’re able to draw the natural/supernatural distinction independently from the science/pseudoscience distinction. Creationists hold that creation is natural; that’s their whole point.

Ignoring Disconfirming Evidence

YEC proponents seem to refuse to allow that any amount of radioactive dating evidence falsifies their theory. I’m tempted to say this alone makes YEC either a pseudoscience or just terrible science. But doing so would force me to accept the 2nd and 3rd definitions of science that I gave in the previous post. In other words, I don’t want to judge a scientific inquiry’s status (or even the status of a non-scientific one) on the basis of what its proponents (a community or institution) do at an arbitrary point in time. Let’s judge the theory, not its most vocal proponents. A large body of German physicists denied that Edington’s measurement confirmed Einstein’s prediction of bent light rays during an eclipse because they rejected Jewish physics. Their hardheadedness is no reason to call their preferred wave theory of light a bad theory. It was a good theory with bad adherents, a good theory for which we now have excellent reasons to judge wrong.

Some YEC proponents hold that, essentially, the fossil record is God’s little joke. Indeed it is possible that when God created the world in six days a few thousand years ago he laid down a lot of evidence to test our faith. The ancient Christian writer Tertullian argued that Satan traveled backward in time to plant evidence against Christian doctrine (more on him soon). It’s hard to disprove. The possibility of deceptive evidence is related to the worry expressed by Hume and countless science fiction writers that the universe, including fossils and your memories of today’s breakfast, could have been planted five minutes ago. Like the Phantom Time hypothesis, it cannot be disproved. Also, as with Phantom Time, we have immense evidence against it. And from a practical perspective, nothing in the future would change if it were true.

Lakatos Applied to Creation Science

Lakatos might give us the best basis for rejecting Creation Science as pseudoscience rather than as an extraordinarily bad science, if that distinction has any value, which it might in the case of deciding what can be taught in elementary school. (We have no laws against unsuccessful theories or poor science.) Lakatos was interested in how a theory makes use of laws of nature and what its research agenda looks like. Laws of nature are regularities observed in nature so widely that we assume them to be true, contingently, and ground predictions about nature on them. Creation Science usually has little interest in making testable predictions about nature or the universe on the basis of such laws. Dr. Duane Gish of the Institute for Creation Research (ICR) wrote in Evolution, The Fossils Say No that “God used processes which are not now operating anywhere in the natural universe.” This is a major point against Creation Science counting as science.

Creation Science’s lack of testable predictions might not even be a fair basis for judging a pursuit to be unscientific. Botany is far more explanatory than predictive, and few of us, including Wikipedia, are ready to expel botany from the science club.

Most significant for me, Lakatos casts doubt on Creation Science by the thinness of its research agenda. A look at the ICR’s site reveals a list of papers and seminars all by PhDs and MDs. They seem to fall in two categories: evolution is wrong (discussed above), and topics that are plausible but that don’t give support for creationism in any meaningful way. The ploy here is playing a game with the logic of confirmation.

By the Will of Elvis

Consider the following statement of hypothesis. Everything happens by the will of Elvis. Now this statement, if true, logically ensures that the following disjunctive statement is true: Either everything happens by the will of Elvis or all cats have hearts. Now let’s go out with a stethoscope and do some solid cat science to gather empirical evidential support for all cats having hearts. This evidence gives us reasonable confidence that the disjunctive statement is true. Since the original simple hypothesis logically implies the disjunction, evidence that cats have hearts gives support for the hypothesis that everything happens by the will of Elvis. This is a fun game (like Hempel’s crows) in the logic of confirmation, and those who have studied it will instantly see the ruse. But ICR has dedicated half its research agenda to it, apparently to deceive its adherents.

The creationist research agenda is mostly aimed at negating evolution and at large philosophical matters. Where it deals with small and specific scientific questions – analogous to cat hearts in the above example – the answers to those questions don’t in any honest sense provide evidentiary support for divine creation.

If anything fails the test of being valid science, Creation Science does. Yet popular arguments that attempt to logically dismiss it from the sciences seem prejudiced or ill motivated. As discussed in the last post, fair and honest demarcation is not so simple. This may be a case where we have to take the stance of Justice Potter Stewart, who, when judging whether Lady Chatterley’s Lover was pornography, said “I shall not today attempt further to define [it], but I know it when I see it, and this is not it.”

To be continued.

Extraordinary Popular Miscarriages of Science (part 1)

Posted by Bill Storage in History of Science, Uncategorized on January 18, 2024

By Bill Storage, Jan. 18, 2024

I’ve been collecting examples of bad science. Many came from friends and scientists I’ve talked to. Bad science can cover several aspects of science depending on what one means by science. At least three very different things are called science now:

- An approach or set of rules and methods used to understand and predict nature

- A body of knowledge about nature and natural processes

- An institution, culture or community of people, including academic, government and corporate professionals, who are involved, or are said to be involved, in 1. or 2. above

Many of my examples of bad science fall under the 3rd category and involve, or are dominated by, the academicians, government offices, and corporations. Below are a few of my favorites from the past century or so. I think many people tend to think that bad science happened in medieval times and that the modern western world is immune to that sort of thing. On the contrary, bad science may be on the rise. For the record, I don’t judge a theory bad merely because it was shown to be wrong, even if spectacularly wrong. Geocentricity was a good theory. Phlogiston (17th century theoretical substance believed to escape from matter during combustion), caloric theory (18th century theory of a weightless fluid that flows from hot matter to cold), and the luminiferous ether (17-19th century postulated medium for the propagation of light waves) were all good theories, though we now have robust evidence against them. All had substantial predictive power. All posited unobservable entities to explain phenomena. But predictive success alone cannot justify belief in unobservable entities. Creation science and astrology were always bad science.

To clarify the distinction between bad science and wrong theories, consider Trofim Lysenko. He was nominally a scientist. Some of his theories appear to be right. He wore the uniform, held the office, and published sciencey papers. But he did not behave scientifically (consistent with definition 1 above) when he ignored the boundless evidence and prior art about heredity. Wikipedia dubs him a pseudoscientist, despite his having some successful theories and making testable hypotheses. Pseudoscience, says Wikipedia, makes unfalsifiable claims. Lysenko’s bold claims were falsifiable, and they were falsified. Wikipedia talks as if the demarcation problem – knowing science from pseudoscience – is a closed case. Nah. Rather than tackle that matter of metaphysics and philosophy, I’ll offer that Lysenkoism, like creation science, and astrology, are all sciences but they are bad science. While they all make some testable predictions, they also make a lot of vague ones, their interest in causation is puny, and their research agendas are scant.

Good science entails testable, falsifiable theories and bold predictions. Most philosophers of science, notably excluding Karl Popper, who thought that only withstanding falsification mattered, have held that making succinct, correct prediction makes a theory good, and that successful theories make for good science. Larry Laudan gave, in my view, a fine definition of a successful theory in his 1984 Philosophy of Science: A theory is successful provided it makes substantially more correct predictions, that it leads to efficacious interventions in the natural order, or that it passes a suitable battery of tests.

Concerns over positing unobservables opens a debate on the question of just how observable are electrons, quarks, and the Higgs Field. Not here though. I am more interested in bad science (in the larger senses of science) than I am with wrong theories. Badness often stems not from seeking to explain and predict nature and failing out of refusal to read the evidence fairly, but from cloaking a non-scientific agenda in the trappings of science. I’m interested in what Kuhn, Feyerabend, and Lakatos dealt with – the non-scientific interests of academicians, government offices, and corporations and their impact on what gets studied and how it gets studied, how confirming evidence is sought and processed, how disconfirming evidence is processed, avoided, or dismissed, and whether Popperian falsifiability was ever on the table.

Recap of Kuhn, Feyerabend, and Lakatos

Thomas Kuhn claimed that normal (day-to-day lab-coat) science consisted of showing how nature can be fit into the existing theory. That is, normal science is decidedly unscientific. It is bad science, aimed at protecting the reigning paradigm from disconfirming evidence. On Kuhn’s view, your scientific education teaches you how to see things as your field requires them to be seen. He noted that medieval and renaissance astronomers never saw the supernovae that were seen in China. Europeans “knew” that the heavens were unchanging. Kuhn used the terms dogma and indoctrination to piss off scientists of his day. He thought that during scientific crises (Newton vs. Einstein being the exemplar) scientists clutched at new theories, often irrationally, and then vicious competition ended when scientific methods determined the winner of a new paradigm. Kuhn was, unknown to most of his social-science groupies, a firm believer that the scientific enterprise ultimately worked. Kuhn says normal science is bad science. He thought this was okay because crisis science reverted to good science, and in crisis, the paradigm was overthrown when the scientists got interested in philosophy of science. When Kuhn was all the rage in the early 1960s, radical sociologists of science, all at the time very left leaning, had their doubts that science could stay good under the influence of government and business. Recall worries about the military industrial complex. They thought that interest, whether economic or political, could keep science bad. I think history has sided with those sociologists; though today’s professional sociologists, now overwhelmingly employed by the the US and state governments, are astonishingly silent on the matter. Granting, for sake of argument, that social science is science, its practitioners seem to be living proof that interest can dominate not only research agendas but what counts as evidence, along with the handling of evidence toward what becomes dogma in the paradigm.

Paul Feyerabend, though also no enemy of science, thought Kuhn stopped short of exposing the biggest problems with science. Feyerabend called science, referring to science as an institution, a threat to democracy. He called for “a separation of state and science just as there is a separation between state and religious institutions.” He thought that 1960s institutional science resembled more the church of Galileo’s day than it resembled Galileo. Feyerabend thought theories should be tested against each other, not merely against the world. He called institutional science a threat because it increasingly held complete control over what is deemed scientifically important for society. Historically, he observed, individuals, by voting with their attention and their dollars, have chosen what counts as being socially valuable. Feyerabend leaned rather far left. In my History of Science appointment at UC Berkeley I was often challenged for invoking him against bad-science environmentalism because Feyerabend wouldn’t have supported a right-winger. Such is the state of H of S at Berkeley, now subsumed by Science and Technology Studies, i.e., same social studies bullshit (it all ends in “Studies”), different pile. John Heilbronn rest in peace.

Imre Lakatos had been imprisoned by the Nazis for revisionism. Through that experience he saw Kuhn’s assent of the relevant community as a valid criterion for establishing a new post-crisis paradigm as not much of a virtue. It sounded a bit too much like Nazis and risked becoming “mob psychology.” If the relevant community has excessive organizational or political power, it can put overpowering demands on individual scientists and force them to subordinate their ideas to the community (see String Theory’s attack on Lee Smolin below). Lakatos saw the quality of a science’s research agenda as a strong indicator of quality. Thin research agendas, like those of astrology and creation science, revealed bad science.

Selected Bad Science

Race Science and Eugenics

Eugenics is an all time favorite, not just of mine. It is a poster child for evil-agenda science driven by a fascist. That seems enough knowledge of the matter for the average student of political science. But eugenics did not emerge from fascism and support for it was overwhelming in progressive circles, particularly in American universities and the liberal elite. Alfred Binet of IQ-test fame, H. G. Wells, Margaret Sanger, John Harvey Kellogg, George Bernard Shaw, Theodore Roosevelt, and apparently Oliver Wendell Holmes, based on his decision that compulsory sterilization was within a state’s rights, found eugenics attractive. Financial support for the eugenics movement included the Carnegie Foundation, Rockefeller Institute, and the State Department. Harvard endorsed it, as did Stanford’s first president, David S Jordan. Yale’s famed economist and social reformer Irving Fisher was a supporter. Most aspects of eugenics in the United States ended abruptly when we discovered that Hitler had embraced it and was using it to defend the extermination of Jews. Hitler borrowed from our 1933 Law for the Prevention of Hereditarily Defective Offspring drawn up by Harry Laughlin. Eugenics was a class case of advocates and activists, clueless of any sense of science, broadcasting that the science (the term “race science” exploded onto the scene as if if had always been a thing) had been settled. In an era where many Americans enjoy blaming the living – and some of the living enjoy accepting that blame – for the sins of our fathers, one wonders why these noble academic institutions have not come forth to offer recompense for their eugenics transgressions.

The War on Fat

In 1977 a Senate committee led by George McGovern published “Dietary Goals for the United States,” imploring us to eat less red meat, eggs, and dairy products. The U.S. Department of Agriculture (USDA) then issued its first dietary guidelines, which focused on cutting cholesterol and not only meat fat but fat from any source. The National Institutes of Health recommended that all Americans, including young kids, cut fat consumption. In 1980 the US government broadcast that eating less fat and cholesterol would reduce your risk of heart attack. Evidence then and ever since has not supported this edict. A low-fat diet was alleged to mitigate many metabolic risk factors and to be essential for achieving a healthy body weight. However, over the past 45 years, obesity in the US climbed dramatically while dietary fat levels fell. Europeans with higher fat diets, having the same genetic makeup, are far thinner. The science of low-fat diets and the tenets of related institutions like insurance, healthcare, and school lunches have seemed utterly immune to evidence. Word is finally trickling out. The NIH has not begged pardon.

The DDT Ban

Rachel Carson appeared before the Department of Commerce in 1963, asking for a “Pesticide Commission” to regulate the DDT. Ten years later, Carson’s “Pesticide Commission” became the Environmental Protection Agency, which banned DDT in the US. The rest of the world followed, including Africa, which was bullied by European environmentalists and aid agencies to do so.

By 1960, DDT use had eliminated malaria from eleven countries. Crop production, land values, and personal wealth rose. In eight years of DDT use, Nepal’s malaria rate dropped from over two million to 2,500. Life expectancy rose from 28 to 42 years.

Malaria reemerged when DDT was banned. Since the ban, tens of millions of people have died from malaria. Following Rachel Carson’s Silent Spring narrative, environmentalists claimed that, with DDT, cancer deaths would have negated the malaria survival rates. No evidence supported this. It was fiction invented by Carson. The only type of cancer that increased during DDT use in the US was lung cancer, which correlated cigarette use. But Carson instructed citizens and governments that DDT caused leukemia, liver disease, birth defects, premature births, and other chronic illnesses. If you “know” that DDT altered the structure of eggs, causing bird populations to dwindle, it is Carson’s doing.

Banning DDT didn’t save the birds, because DDT wasn’t the cause of US bird death as Carson reported. While bird populations had plunged prior to DDT’s first use, the bird death at the center of her impassioned plea never happened. We know this from bird count data and many subsequent studies. Carson, in her work at Fish and Wildlife Service and through her participation in Audubon bird counts, certainly knew that during US DDT use, the eagle population doubled, and robin, dove, and catbird counts increased by 500%. Carson lied like hell and we showered her with praise and money. Africans paid with their lives.

In 1969 the Environmental Defense Fund demanded a hearing on DDT. The 8-month investigation concluded DDT was not mutagenic or teratogenic. No cancer, no birth defects. In found no “deleterious effect on freshwater fish, estuarine organisms, wild birds or other wildlife.” Yet William Ruckleshaus, first director of the EPA, who never read the transcript, chose to ban DDT anyway. Joni Mitchell was thrilled. DDT was replaced by more harmful pesticides. NPR, the NY Times, and the Puget Sound Institute still report a “preponderance of evidence” of DDT’s dangers.

When challenged with the claim that DDT never killed kids, the Rachel Carson Landmark Alliance responded in 2017 that indeed it had. A two-year old drank and ounce of 5% DDT in a solution of kerosene and died. Now there’s scientific integrity.

Vilification of Cannabis

I got this one from my dentist; I had never considered it before. White-collar, or rather, work-from-home, California potheads think this problem has been overcome. Far from it. Cannabis use violates federal law. Republicans are too stupid to repeal it, and Democrats are too afraid of looking like hippies. According to Quest Diagnostics, in 2020, 4.4% of workers failed their employers’ drug tests. Blue-collar Americans, particularly those who might be a sub-sub-subcontractor on a government project, are subject to drug tests. Testing positive for weed can cost you your job. So instead of partying on pot, the shop floor consumes immense amounts of alcohol, increasing its risk of accidents at work and in the car, harming its health, and raising its risk of hard drug use. To the admittedly small sense in which the concept of a gateway drug is valid, marijuana is probably not one and alcohol almost certainly is. Racism, big pharma lobbyists, and social-control are typically blamed for keeping cannabis illegal. Governments may also have concluded that tolerating weed at the state level while maintaining federal prohibition is an optimal tax revenue strategy. Cannabis tolerance at state level appears to have reduced opioid use and opioid related ER admissions.

Stoners who scoff at anti-cannabis propaganda like Reefer Madness might be unaware that a strong correlation between psychosis and cannabis use has been known for decades. But inferring causation from that correlation was always either highly insincere (huh huh) or very bad science. Recent analysis of study participants’ genomes showed that those with the strongest genetic profile for schizophrenia were also more likely to use cannabis in large amounts. So unless you follow Lysenko, who thought acquired traits were passed to offspring, pot is unlikely to cause psychosis. When A and B correlate, either A causes B, B causes A, or C causes both, as appears to be the case with schizophrenic potheads.

To be continued.

Innumeracy and Overconfidence in Medical Training

Posted by Bill Storage in History of Science on May 4, 2020

Most medical doctors, having ten or more years of education, can’t do simple statistics calculations that they were surely able to do, at least for a week or so, as college freshmen. Their education has let them down, along with us, their patients. That education leaves many doctors unquestioning, unscientific, and terribly overconfident.

A disturbing lack of doubt has plagued medicine for thousands of years. Galen, at the time of Marcus Aurelius, wrote, “It is I, and I alone, who has revealed the true path of medicine.” Galen disdained empiricism. Why bother with experiments and observations when you own the truth. Galen’s scientific reasoning sounds oddly similar to modern junk science armed with abundant confirming evidence but no interest in falsification. Galen had plenty of confirming evidence: “All who drink of this treatment recover in a short time, except those whom it does not help, who all die. It is obvious, therefore, that it fails only in incurable cases.”

Galen was still at work 1500 years later when Voltaire wrote that the art of medicine consisted of entertaining the patient while nature takes its course. One of Voltaire’s novels also described a patient who had survived despite the best efforts of his doctors. Galen was around when George Washington died after five pints of bloodletting, a practice promoted up to the early 1900s by prominent physicians like Austin Flint.

But surely medicine was mostly scientific by the 1900s, right? Actually, 20th century medicine was dragged kicking and screaming to scientific methodology. In the early 1900’s Ernest Amory Codman of Massachusetts General proposed keeping track of patients and rating hospitals according to patient outcome. He suggested that a doctor’s reputation and social status were poor measures of a patient’s chance of survival. He wanted the track records of doctors and hospitals to be made public, allowing healthcare consumers to choose suppliers based on statistics. For this, and for his harsh criticism of those who scoffed at his ideas, Codman was tossed out of Mass General, lost his post at Harvard, and was suspended from the Massachusetts Medical Society. Public outcry brought Codman back into medicine, and much of his “end results system” was put in place.

But surely medicine was mostly scientific by the 1900s, right? Actually, 20th century medicine was dragged kicking and screaming to scientific methodology. In the early 1900’s Ernest Amory Codman of Massachusetts General proposed keeping track of patients and rating hospitals according to patient outcome. He suggested that a doctor’s reputation and social status were poor measures of a patient’s chance of survival. He wanted the track records of doctors and hospitals to be made public, allowing healthcare consumers to choose suppliers based on statistics. For this, and for his harsh criticism of those who scoffed at his ideas, Codman was tossed out of Mass General, lost his post at Harvard, and was suspended from the Massachusetts Medical Society. Public outcry brought Codman back into medicine, and much of his “end results system” was put in place.

20th century medicine also fought hard against the concept of controlled trials. Austin Bradford Hill introduced the concept to medicine in the mid 1920s. But in the mid 1950s Dr. Archie Cochrane was still fighting valiantly against what he called the God Complex in medicine, which was basically the ghost of Galen; no one should question the authority of a physician. Cochrane wrote that far too much of medicine lacked any semblance of scientific validation and knowing what treatments actually worked. He wrote that the medical establishment was hostile the idea of controlled trials. Cochrane fought this into the 1970s, authoring Effectiveness and Efficiency: Random Reflections on Health Services in 1972.

Doctors aren’t naturally arrogant. The God Complex is passed passed along during the long years of an MD’s education and internship. That education includes rights of passage in an old boys’ club that thinks sleep deprivation builds character in interns, and that female med students should make tea for the boys. Once on the other side, tolerance of archaic norms in the MD culture seems less offensive to the inductee, who comes to accept the system. And the business of medicine, the way it’s regulated, and its control by insurance firms, pushes MDs to view patients as a job to be done cost-effectively. Medical arrogance is in a sense encouraged by recovering patients who might see doctors as savior figures.

As Daniel Kahneman wrote, “generally, it is considered a weakness and a sign of vulnerability for clinicians to appear unsure.” Medical overconfidence is encouraged by patients’ preference for doctors who communicate certainties, even when uncertainty stems from technological limitations, not from doctors’ subject knowledge. MDs should be made conscious of such dynamics and strive to resist inflating their self importance. As Allan Berger wrote in Academic Medicine in 2002, “we are but an instrument of healing, not its source.”

Many in medical education are aware of these issues. The calls for medical education reform – both content and methodology – are desperate, but they are eerily similar to those found in a 1924 JAMA article, Current Criticism of Medical Education.

Covid19 exemplifies the aspect of medical education I find most vile. Doctors can’t do elementary statistics and probability, and their cultural overconfidence renders them unaware of how critically they need that missing skill.

A 1978 study, brought to the mainstream by psychologists like Kahnemann and Tversky, showed how few doctors know the meaning of a positive diagnostic test result. More specifically, they’re ignorant of the relationship between the sensitivity and specificity (true positive and true negative rates) of a test and the probability that a patient who tested positive has the disease. This lack of knowledge has real consequences In certain situations, particularly when the base rate of the disease in a population is low. The resulting probability judgements can be wrong by factors of hundreds or thousands.

In the 1978 study (Cascells et. al.) doctors and medical students at Harvard teaching hospitals were given a diagnostic challenge. “If a test to detect a disease whose prevalence is 1 out of 1,000 has a false positive rate of 5 percent, what is the chance that a person found to have a positive result actually has the disease?” As described, the true positive rate of the diagnostic test is 95%. This is a classic conditional-probability quiz from the second week of a probability class. Being right requires a), knowing Bayes Theorem, and b), being able to multiply and divide. Not being confidently wrong requires only one thing: scientific humility – the realization that all you know might be less than all there is to know. The correct answer is 2% – there’s a 2% likelihood the patient has the disease. The most common response, by far, in the 1978 study was 95%, which is wrong by 4750%. Only 18% of doctors and med students gave the correct response. The study’s authors observed that in the group tested, “formal decision analysis was almost entirely unknown and even common-sense reasoning about the interpretation of laboratory data was uncommon.”

As mentioned above, this story was heavily publicized in the 80s. It was widely discussed by engineering teams, reliability departments, quality assurance groups and math departments. But did it impact medical curricula, problem-based learning, diagnostics training, or any other aspect of the way med students were taught? One might have thought yes, if for no reason than to avoid criticism by less prestigious professions having either the relevant knowledge of probability or the epistemic humility to recognize that the right answer might be far different from the obvious one.

Similar surveys were done in 1984 (David M Eddy) and in 2003 (Kahan, Paltiel) with similar results. In 2013, Manrai and Bhatia repeated Cascells’ 1978 survey with the exact same wording, getting trivially better results. 23% answered correctly. They suggesting that medical education “could benefit from increased focus on statistical inference.” That was 35 years after Cascells, during which, the phenomenon was popularized by the likes of Daniel Kahneman, from the perspective of base-rate neglect, by Philip Tetlock, from the perspective of overconfidence in forecasting, and by David Epstein, from the perspective of the tyranny of specialization.

Over the past decade, I’ve asked the Cascells question to doctors I’ve known or met, where I didn’t think it would get me thrown out of the office or booted from a party. My results were somewhat worse. Of about 50 MDs, four answered correctly or were aware that they’d need to look up the formula but knew that it was much less than 95%. One was an optometrist, one a career ER doc, one an allergist-immunologist, and one a female surgeon – all over 50 years old, incidentally.

Despite the efforts of a few radicals in the Accreditation Council for Graduate Medical Education and some post-Flexnerian reformers, medical education remains, as Jonathan Bush points out in Tell Me Where It Hurts, basically a 2000 year old subject-based and lecture-based model developed at a time when only the instructor had access to a book. Despite those reformers, basic science has actually diminished in recent decades, leaving many physicians with less of a grasp of scientific methodology than that held by Ernest Codman in 1915. Medical curriculum guardians, for the love of God, get over your stodgy selves and replace the calculus badge with applied probability and statistical inference from diagnostics. Place it later in the curriculum later than pre-med, and weave it into some of that flipped-classroom, problem-based learning you advertise.

Paul Feyerabend, The Worst Enemy of Science

Posted by Bill Storage in History of Science, Philosophy of Science on December 10, 2019

“How easy it is to lead people by the nose in a rational way.”

A similarly named post I wrote on Paul Feyerabend seven years ago turned out to be my most popular post by far. Seeing it referenced in a few places has made me cringe, and made me face the fact that I failed to make my point. I’ll try to correct that here. I don’t remotely agree with the paper in Nature that called Feyerabend the worst enemy of science, nor do I side with the postmodernists that idolize him. I do find him to be one of the most provocative thinkers of the 20th century, brash, brilliant, and sometimes full of crap.

Feyerabend opened his profound Against Method by telling us to always remember that what he writes in the book does not reflect any deep convictions of his, but that he intends “merely show how easy it is to lead people by the nose in a rational way.” I.e., he was more telling us what he thought we needed to hear than what he necessarily believed. In his autobiography he wrote that for Against Method he had used older material but had “replaced moderate passages with more outrageous ones.” Those using and abusing Feyerabend today have certainly forgot what this provocateur, who called himself an entertainer, told us always to remember about him in his writings.

Any who think Feyerabend frivolous should examine the scientific rigor in his analysis of Galileo’s work. Any who find him to be an enemy of science should actually read Against Method instead of reading about him, as quotes pulled from it can be highly misleading as to his intent. My communications with some of his friends after he died in 1994 suggest that while he initially enjoyed ruffling so many feathers with Against Method, he became angered and ultimately depressed over both critical reactions against it and some of the audiences that made weapons of it. In 1991 he wrote, “I often wished I had never written that fucking book.”

I encountered Against Method searching through a library’s card catalog seeking an authority on the scientific method. I learned from Feyerabend that no set of methodological rules fits the great advances and discoveries in science. It’s obvious once you think about it. Pick a specific scientific method – say the hypothetico-deductive model – or any set of rules, and Feyerabend will name a scientific discovery that would not have occurred had the scientist, from Galileo to Feynman, followed that method, or any other.

Part of Feyerabend’s program was to challenge the positivist notion that in real science, empiricism trumps theory. Galileo’s genius, for Feyerabend, was allowing theory to dominate observation. In Dialogue Galileo wrote:

Nor can I ever sufficiently admire the outstanding acumen of those who have taken hold of this opinion and accepted it as true: they have, through sheer force of intellect, done such violence to their own senses as to prefer what reason told them over that which sensible experience plainly showed them to be the contrary.

For Feyerabend, against Popper and the logical positivists of the mid 1900’s, Galileo’s case exemplified a need to grant theory priority over evidence. This didn’t sit well with empiricist leanings of the the post-war western world. It didn’t set well with most scientists or philosophers. Sociologists and literature departments loved it. It became fuel for fire of relativism sweeping America in the 70’s and 80’s and for the 1990’s social constructivists eager to demote science to just another literary genre.

But in context, and in the spheres for which Against Method was written, many people – including Feyerabend’s peers from 1970 Berkeley, with whom I’ve had many conversations on the topic, conclude that the book’s goading style was a typical Feyerabendian corrective provocation to that era’s positivistic dogma.

Feyerabend distrusts the orthodoxy of social practices of what Thomas Kuhn termed “normal science” – what scientific institutions do in their laboratories. Unlike their friend Imre Lakatos, Feyerabend distrusts any rule-based scientific method at all. Instead, Feyerabend praises the scientific innovation and individual creativity. For Feyerabend science in the mid 1900’s had fallen prey to the “tyranny of tightly-knit, highly corroborated, and gracelessly presented theoretical systems.” What would he say if alive today?

As with everything in the philosophy of science in the late 20th century, some of the disagreement between Feyerabend, Kuhn, Popper and Lakatos revolved around miscommunication and sloppy use of language. The best known case of this was Kuhn’s inconsistent use of the term paradigm. But they all (perhaps least so Lakatos) talked past each other by failing to differentiate different meanings of the word science, including:

- An approach or set of rules and methods for inquiry about nature

- A body of knowledge about nature

- In institution, culture or community of scientists, including academic, government and corporate

Kuhn and Feyerabend in particular vacillated between meaning science as a set of methods and science as an institution. Feyerabend certainly was referring to an institution when he said that science was a threat to democracy and that there must be “a separation of state and science just as there is a separation between state and religious institutions.” Along these lines Feyerabend thought that modern institutional science resembles more the church of Galileo’s day than it resembles Galileo.

On the matter of state control of science, Feyerabend went further than Eisenhower did in his “military industrial complex” speech, even with the understanding that what Eisenhower was describing was a military-academic-industrial complex. Eisenhower worried that a government contract with a university “becomes virtually a substitute for intellectual curiosity.” Feyerabend took this worry further, writing that university research requires conforming to orthodoxy and “a willingness to subordinate one’s ideas to those of a team leader.” Feyerabend disparaged Kuhn’s normal science as dogmatic drudgery that stifles scientific creativity.

A second area of apparent miscommunication about the history/philosophy of science in the mid 1900’s was the descriptive/normative distinction. John Heilbron, who was Kuhn’s grad student when Kuhn wrote Structure of Scientific Revolutions, told me that Kuhn absolutely despised Popper, not merely as a professional rival. Kuhn wanted to destroy Popper’s notion that scientists discard theories on finding disconfirming evidence. But Popper was describing ideally performed science; his intent was clearly normative. Kuhn’s work, said Heilbron (who doesn’t share my admiration for Feyerabend), was intended as normative only for historians of science, not for scientists. True, Kuhn felt that it was pointless to try to distinguish the “is” from the “ought” in science, but this does not mean he thought they were the same thing.

As with Kuhn’s use of paradigm, Feyerabend’s use of the term science risks equivocation. He drifts between methodology and institution to suit the needs of his argument. At times he seems to build a straw man of science in which science insists it creates facts as opposed to building models. Then again, on this matter (fact/truth vs. models as the claims of science) he seems to be more right about the science of 2019 than he was about the science of 1975.

While heavily indebted to Popper, Feyerabend, like Kuhn, grew hostile to Popper’s ideas of demarcation and falsification: “let us look at the standards of the Popperian school, which are still being taken seriously in the more backward regions of knowledge.” He eventually expanded his criticism of Popper’s idea of theory falsification to a categorical rejection of Popper’s demarcation theories and of Popper’s critical rationalism in general. Now from the perspective of half a century later, a good bit of the tension between Popper and both Feyerabend and Kuhn and between Kuhn and Feyerabend seems to have been largely semantic.

For me, Feyerabend seems most relevant today through his examination of science as a threat to democracy. He now seems right in ways that even he didn’t anticipate. He thought it a threat mostly in that science (as an institution) held complete control over what is deemed scientifically important for society. In contrast, people as individuals or small competing groups, historically have chosen what counts as being socially valuable. In this sense science bullied the citizen, thought Feyerabend. Today I think we see a more extreme example of bullying, in the case of global warming for example, in which government and institutionalized scientists are deciding not only what is important as a scientific agenda but what is important as energy policy and social agenda. Likewise the role that neuroscience plays in primary education tends to get too much of the spotlight in the complex social issues of how education should be conducted. One recalls Lakatos’ concern against Kuhn’s confidence in the authority of “communities.” Lakatos had been imprisoned by the Nazis for revisionism. Through that experience he saw Kuhn’s “assent of the relevant community” as not much of a virtue if that community has excessive political power and demands that individual scientists subordinate their ideas to it.

As a tiny tribute to Feyerabend, about whom I’ve noted caution is due in removal of his quotes from their context, I’ll honor his provocative spirit by listing some of my favorite quotes, removed from context, to invite misinterpretation and misappropriation.

“The similarities between science and myth are indeed astonishing.”

“The church at the time of Galileo was much more faithful to reason than Galileo himself, and also took into consideration the ethical and social consequences of Galileo’s doctrine. Its verdict against Galileo was rational and just, and revisionism can be legitimized solely for motives of political opportunism.”

“All methodologies have their limitations and the only ‘rule’ that survives is ‘anything goes’.”

“Revolutions have transformed not only the practices their initiators wanted to change buy the very principles by means of which… they carried out the change.”

“Kuhn’s masterpiece played a decisive role. It led to new ideas, Unfortunately it also led to lots of trash.”

“First-world science is one science among many.”

“Progress has always been achieved by probing well-entrenched and well-founded forms of life with unpopular and unfounded values. This is how man gradually freed himself from fear and from the tyranny of unexamined systems.”

“Research in large institutes is not guided by Truth and Reason but by the most rewarding fashion, and the great minds of today increasingly turn to where the money is — which means military matters.”

“The separation of state and church must be complemented by the separation of state and science, that most recent, most aggressive, and most dogmatic religious institution.”

“Without a constant misuse of language, there cannot be any discovery, any progress.”

__________________

Photos of Paul Feyerabend courtesy of Grazia Borrini-Feyerabend

Was Thomas Kuhn Right about Anything?

Posted by Bill Storage in History of Science, Philosophy of Science on September 3, 2016

William Storage – 9/1/2016

Visiting Scholar, UC Berkeley History of Science

Fifty years ago Thomas Kuhn’s Structures of Scientific Revolution armed sociologists of science, constructionists, and truth-relativists with five decades of cliche about the political and social dimensions of theory choice and scientific progress’s inherent irrationality. Science has bias, cries the social-justice warrior. Despite actually being a scientist – or at least holding a PhD in Physics from Harvard, Kuhn isn’t well received by scientists and science writers. They generally venture into history and philosophy of science as conceived by Karl Popper, the champion of the falsification model of scientific progress.

Kuhn saw Popper’s description of science as a self-congratulatory idealization for researchers. That is, no scientific theory is ever discarded on the first observation conflicting with the theory’s predictions. All theories have anomalous data. Dropping heliocentrism because of anomalies in Mercury’s orbit was unthinkable, especially when, as Kuhn stressed, no better model was available at the time. Einstein said that if Eddington’s experiment would have not shown bending of light rays around the sun, “I would have had to pity our dear Lord. The theory is correct all the same.”

Kuhn was wrong about a great many details. Despite the exaggeration of scientific detachment by Popper and the proponents of rational-reconstruction, Kuhn’s model of scientists’ dogmatic commitment to their theories is valid only in novel cases. Even the Copernican revolution is overstated. Once the telescope was in common use and the phases of Venus were confirmed, the philosophical edifices of geocentrism crumbled rapidly in natural philosophy. As Joachim Vadianus observed, seemingly predicting the scientific revolution, sometimes experience really can be demonstrative.

Kuhn seems to have cherry-picked historical cases of the gap between normal and revolutionary science. Some revolutions – DNA and the expanding universe for example – proceeded with no crisis and no battle to the death between the stalwarts and the upstarts. Kuhn’s concept of incommensurabilty also can’t withstand scrutiny. It is true that Einstein and Newton meant very different things when they used the word “mass.” But Einstein understood exactly what Newton meant by mass, because Einstein had grown up a Newtonian. And if brought forth, Newton, while he never could have conceived of Einsteinian mass, would have had no trouble understanding Einstein’s concept of mass from the perspective of general relativity, had Einstein explained it to him.

Likewise, Kuhn’s language about how scientists working in different paradigms truly, not merely metaphorically, “live in different worlds” should go the way of mood rings and lava lamps. Most charitably, we might chalk this up to Kuhn’s terminological sloppiness. He uses “success terms” like “live” and “see,” where he likely means “experience visually” or “perceive.” Kuhn describes two observers, both witnessing the same phenomenon, but “one sees oxygen, where another sees dephlogisticated air” (emphasis mine). That is, Kuhn confuses the descriptions of visual experiences with the actual experiences of observation – to the delight of Bruno Latour and the cultural relativists.

Finally, Kuhn’s notion that theories completely control observation is just as wrong as scientists’ belief that their experimental observations are free of theoretical influence and that their theories are independent of their values.

Despite these flaws, I think Kuhn was on to something. He was right, at least partly, about the indoctrination of scientists into a paradigm discouraging skepticism about their research program. What Wolfgang Lerche of CERN called “the Stanford propaganda machine” for string theory is a great example. Kuhn was especially right in describing science education as presenting science as a cumulative enterprise, relegating failed hypotheses to the footnotes. Einstein built on Newton in the sense that he added more explanations about the same phenomena; but in no way was Newton preserved within Einstein. Failing to see an Einsteinian revolution in any sense just seems akin to a proclamation of the infallibility not of science but of scientists. I was surprised to see this attitude in Stephen Weinberg’s recent To Explain the World. Despite excellent and accessible coverage of the emergence of science, he presents a strictly cumulative model of science. While Weinberg only ever mentions Kuhn in footnotes, he seems to be denying that Kuhn was ever right about anything.

For example, in describing general relativity, Weinberg says in 1919 the Times of London reported that Newton had been shown to be wrong. Weinberg says, “This was a mistake. Newton’s theory can be regarded as an approximation to Einstein’s – one that becomes increasingly valid for objects moving at velocities much less than that of light. Not only does Einstein’s theory not disprove Newton’s, relativity explains why Newton’s theory works when it does work.”