Archive for category Uncategorized

Extraordinary Popular Miscarriages of Science (part 1)

Posted by Bill Storage in History of Science, Uncategorized on January 18, 2024

By Bill Storage, Jan. 18, 2024

I’ve been collecting examples of bad science. Many came from friends and scientists I’ve talked to. Bad science can cover several aspects of science depending on what one means by science. At least three very different things are called science now:

- An approach or set of rules and methods used to understand and predict nature

- A body of knowledge about nature and natural processes

- An institution, culture or community of people, including academic, government and corporate professionals, who are involved, or are said to be involved, in 1. or 2. above

Many of my examples of bad science fall under the 3rd category and involve, or are dominated by, the academicians, government offices, and corporations. Below are a few of my favorites from the past century or so. I think many people tend to think that bad science happened in medieval times and that the modern western world is immune to that sort of thing. On the contrary, bad science may be on the rise. For the record, I don’t judge a theory bad merely because it was shown to be wrong, even if spectacularly wrong. Geocentricity was a good theory. Phlogiston (17th century theoretical substance believed to escape from matter during combustion), caloric theory (18th century theory of a weightless fluid that flows from hot matter to cold), and the luminiferous ether (17-19th century postulated medium for the propagation of light waves) were all good theories, though we now have robust evidence against them. All had substantial predictive power. All posited unobservable entities to explain phenomena. But predictive success alone cannot justify belief in unobservable entities. Creation science and astrology were always bad science.

To clarify the distinction between bad science and wrong theories, consider Trofim Lysenko. He was nominally a scientist. Some of his theories appear to be right. He wore the uniform, held the office, and published sciencey papers. But he did not behave scientifically (consistent with definition 1 above) when he ignored the boundless evidence and prior art about heredity. Wikipedia dubs him a pseudoscientist, despite his having some successful theories and making testable hypotheses. Pseudoscience, says Wikipedia, makes unfalsifiable claims. Lysenko’s bold claims were falsifiable, and they were falsified. Wikipedia talks as if the demarcation problem – knowing science from pseudoscience – is a closed case. Nah. Rather than tackle that matter of metaphysics and philosophy, I’ll offer that Lysenkoism, like creation science, and astrology, are all sciences but they are bad science. While they all make some testable predictions, they also make a lot of vague ones, their interest in causation is puny, and their research agendas are scant.

Good science entails testable, falsifiable theories and bold predictions. Most philosophers of science, notably excluding Karl Popper, who thought that only withstanding falsification mattered, have held that making succinct, correct prediction makes a theory good, and that successful theories make for good science. Larry Laudan gave, in my view, a fine definition of a successful theory in his 1984 Philosophy of Science: A theory is successful provided it makes substantially more correct predictions, that it leads to efficacious interventions in the natural order, or that it passes a suitable battery of tests.

Concerns over positing unobservables opens a debate on the question of just how observable are electrons, quarks, and the Higgs Field. Not here though. I am more interested in bad science (in the larger senses of science) than I am with wrong theories. Badness often stems not from seeking to explain and predict nature and failing out of refusal to read the evidence fairly, but from cloaking a non-scientific agenda in the trappings of science. I’m interested in what Kuhn, Feyerabend, and Lakatos dealt with – the non-scientific interests of academicians, government offices, and corporations and their impact on what gets studied and how it gets studied, how confirming evidence is sought and processed, how disconfirming evidence is processed, avoided, or dismissed, and whether Popperian falsifiability was ever on the table.

Recap of Kuhn, Feyerabend, and Lakatos

Thomas Kuhn claimed that normal (day-to-day lab-coat) science consisted of showing how nature can be fit into the existing theory. That is, normal science is decidedly unscientific. It is bad science, aimed at protecting the reigning paradigm from disconfirming evidence. On Kuhn’s view, your scientific education teaches you how to see things as your field requires them to be seen. He noted that medieval and renaissance astronomers never saw the supernovae that were seen in China. Europeans “knew” that the heavens were unchanging. Kuhn used the terms dogma and indoctrination to piss off scientists of his day. He thought that during scientific crises (Newton vs. Einstein being the exemplar) scientists clutched at new theories, often irrationally, and then vicious competition ended when scientific methods determined the winner of a new paradigm. Kuhn was, unknown to most of his social-science groupies, a firm believer that the scientific enterprise ultimately worked. Kuhn says normal science is bad science. He thought this was okay because crisis science reverted to good science, and in crisis, the paradigm was overthrown when the scientists got interested in philosophy of science. When Kuhn was all the rage in the early 1960s, radical sociologists of science, all at the time very left leaning, had their doubts that science could stay good under the influence of government and business. Recall worries about the military industrial complex. They thought that interest, whether economic or political, could keep science bad. I think history has sided with those sociologists; though today’s professional sociologists, now overwhelmingly employed by the the US and state governments, are astonishingly silent on the matter. Granting, for sake of argument, that social science is science, its practitioners seem to be living proof that interest can dominate not only research agendas but what counts as evidence, along with the handling of evidence toward what becomes dogma in the paradigm.

Paul Feyerabend, though also no enemy of science, thought Kuhn stopped short of exposing the biggest problems with science. Feyerabend called science, referring to science as an institution, a threat to democracy. He called for “a separation of state and science just as there is a separation between state and religious institutions.” He thought that 1960s institutional science resembled more the church of Galileo’s day than it resembled Galileo. Feyerabend thought theories should be tested against each other, not merely against the world. He called institutional science a threat because it increasingly held complete control over what is deemed scientifically important for society. Historically, he observed, individuals, by voting with their attention and their dollars, have chosen what counts as being socially valuable. Feyerabend leaned rather far left. In my History of Science appointment at UC Berkeley I was often challenged for invoking him against bad-science environmentalism because Feyerabend wouldn’t have supported a right-winger. Such is the state of H of S at Berkeley, now subsumed by Science and Technology Studies, i.e., same social studies bullshit (it all ends in “Studies”), different pile. John Heilbronn rest in peace.

Imre Lakatos had been imprisoned by the Nazis for revisionism. Through that experience he saw Kuhn’s assent of the relevant community as a valid criterion for establishing a new post-crisis paradigm as not much of a virtue. It sounded a bit too much like Nazis and risked becoming “mob psychology.” If the relevant community has excessive organizational or political power, it can put overpowering demands on individual scientists and force them to subordinate their ideas to the community (see String Theory’s attack on Lee Smolin below). Lakatos saw the quality of a science’s research agenda as a strong indicator of quality. Thin research agendas, like those of astrology and creation science, revealed bad science.

Selected Bad Science

Race Science and Eugenics

Eugenics is an all time favorite, not just of mine. It is a poster child for evil-agenda science driven by a fascist. That seems enough knowledge of the matter for the average student of political science. But eugenics did not emerge from fascism and support for it was overwhelming in progressive circles, particularly in American universities and the liberal elite. Alfred Binet of IQ-test fame, H. G. Wells, Margaret Sanger, John Harvey Kellogg, George Bernard Shaw, Theodore Roosevelt, and apparently Oliver Wendell Holmes, based on his decision that compulsory sterilization was within a state’s rights, found eugenics attractive. Financial support for the eugenics movement included the Carnegie Foundation, Rockefeller Institute, and the State Department. Harvard endorsed it, as did Stanford’s first president, David S Jordan. Yale’s famed economist and social reformer Irving Fisher was a supporter. Most aspects of eugenics in the United States ended abruptly when we discovered that Hitler had embraced it and was using it to defend the extermination of Jews. Hitler borrowed from our 1933 Law for the Prevention of Hereditarily Defective Offspring drawn up by Harry Laughlin. Eugenics was a class case of advocates and activists, clueless of any sense of science, broadcasting that the science (the term “race science” exploded onto the scene as if if had always been a thing) had been settled. In an era where many Americans enjoy blaming the living – and some of the living enjoy accepting that blame – for the sins of our fathers, one wonders why these noble academic institutions have not come forth to offer recompense for their eugenics transgressions.

The War on Fat

In 1977 a Senate committee led by George McGovern published “Dietary Goals for the United States,” imploring us to eat less red meat, eggs, and dairy products. The U.S. Department of Agriculture (USDA) then issued its first dietary guidelines, which focused on cutting cholesterol and not only meat fat but fat from any source. The National Institutes of Health recommended that all Americans, including young kids, cut fat consumption. In 1980 the US government broadcast that eating less fat and cholesterol would reduce your risk of heart attack. Evidence then and ever since has not supported this edict. A low-fat diet was alleged to mitigate many metabolic risk factors and to be essential for achieving a healthy body weight. However, over the past 45 years, obesity in the US climbed dramatically while dietary fat levels fell. Europeans with higher fat diets, having the same genetic makeup, are far thinner. The science of low-fat diets and the tenets of related institutions like insurance, healthcare, and school lunches have seemed utterly immune to evidence. Word is finally trickling out. The NIH has not begged pardon.

The DDT Ban

Rachel Carson appeared before the Department of Commerce in 1963, asking for a “Pesticide Commission” to regulate the DDT. Ten years later, Carson’s “Pesticide Commission” became the Environmental Protection Agency, which banned DDT in the US. The rest of the world followed, including Africa, which was bullied by European environmentalists and aid agencies to do so.

By 1960, DDT use had eliminated malaria from eleven countries. Crop production, land values, and personal wealth rose. In eight years of DDT use, Nepal’s malaria rate dropped from over two million to 2,500. Life expectancy rose from 28 to 42 years.

Malaria reemerged when DDT was banned. Since the ban, tens of millions of people have died from malaria. Following Rachel Carson’s Silent Spring narrative, environmentalists claimed that, with DDT, cancer deaths would have negated the malaria survival rates. No evidence supported this. It was fiction invented by Carson. The only type of cancer that increased during DDT use in the US was lung cancer, which correlated cigarette use. But Carson instructed citizens and governments that DDT caused leukemia, liver disease, birth defects, premature births, and other chronic illnesses. If you “know” that DDT altered the structure of eggs, causing bird populations to dwindle, it is Carson’s doing.

Banning DDT didn’t save the birds, because DDT wasn’t the cause of US bird death as Carson reported. While bird populations had plunged prior to DDT’s first use, the bird death at the center of her impassioned plea never happened. We know this from bird count data and many subsequent studies. Carson, in her work at Fish and Wildlife Service and through her participation in Audubon bird counts, certainly knew that during US DDT use, the eagle population doubled, and robin, dove, and catbird counts increased by 500%. Carson lied like hell and we showered her with praise and money. Africans paid with their lives.

In 1969 the Environmental Defense Fund demanded a hearing on DDT. The 8-month investigation concluded DDT was not mutagenic or teratogenic. No cancer, no birth defects. In found no “deleterious effect on freshwater fish, estuarine organisms, wild birds or other wildlife.” Yet William Ruckleshaus, first director of the EPA, who never read the transcript, chose to ban DDT anyway. Joni Mitchell was thrilled. DDT was replaced by more harmful pesticides. NPR, the NY Times, and the Puget Sound Institute still report a “preponderance of evidence” of DDT’s dangers.

When challenged with the claim that DDT never killed kids, the Rachel Carson Landmark Alliance responded in 2017 that indeed it had. A two-year old drank and ounce of 5% DDT in a solution of kerosene and died. Now there’s scientific integrity.

Vilification of Cannabis

I got this one from my dentist; I had never considered it before. White-collar, or rather, work-from-home, California potheads think this problem has been overcome. Far from it. Cannabis use violates federal law. Republicans are too stupid to repeal it, and Democrats are too afraid of looking like hippies. According to Quest Diagnostics, in 2020, 4.4% of workers failed their employers’ drug tests. Blue-collar Americans, particularly those who might be a sub-sub-subcontractor on a government project, are subject to drug tests. Testing positive for weed can cost you your job. So instead of partying on pot, the shop floor consumes immense amounts of alcohol, increasing its risk of accidents at work and in the car, harming its health, and raising its risk of hard drug use. To the admittedly small sense in which the concept of a gateway drug is valid, marijuana is probably not one and alcohol almost certainly is. Racism, big pharma lobbyists, and social-control are typically blamed for keeping cannabis illegal. Governments may also have concluded that tolerating weed at the state level while maintaining federal prohibition is an optimal tax revenue strategy. Cannabis tolerance at state level appears to have reduced opioid use and opioid related ER admissions.

Stoners who scoff at anti-cannabis propaganda like Reefer Madness might be unaware that a strong correlation between psychosis and cannabis use has been known for decades. But inferring causation from that correlation was always either highly insincere (huh huh) or very bad science. Recent analysis of study participants’ genomes showed that those with the strongest genetic profile for schizophrenia were also more likely to use cannabis in large amounts. So unless you follow Lysenko, who thought acquired traits were passed to offspring, pot is unlikely to cause psychosis. When A and B correlate, either A causes B, B causes A, or C causes both, as appears to be the case with schizophrenic potheads.

To be continued.

Fashionable Pessimism: Confidence Gap Revisited

Posted by Bill Storage in Uncategorized on August 19, 2021

Most of us hold a level of confidence about our factual knowledge and predictions that doesn’t match our abilities. That’s what I learned, summarized in a recent post, from running a website called The Confidence Gap for a year.

When I published that post, someone linked to it from Hacker News, causing 9000 people to read the post, 3000 of which took the trivia quiz(es) and assigned confidence levels to their true/false responses. Presumably, many of those people learned from the post that the each group of ten questions in the survey had one question about the environment or social issues designed to show an extra level of domain-specific overconfidence. Presumably, those readers are highly educated. I would have thought this would have changed the gap between accuracy and confidence in those who used the site before and after that post. But the results from visits after the blog post and Hacker News coverage, even in those categories, were almost identical to those of the earlier group.

Hacker News users pointed out several questions where the the Confidence Gap answers were obviously wrong. Stop signs actually do have eight sides, for example. Readers also reported questions with typos that could have confused the respondents. For example “Feetwood Mac” did not perform “Black Magic Woman” prior to Santana, but Fleetwood Mac did. The site called the statement with “Feetwood” true; it was a typo, not a trick.

A Hacker News reader challenged me on the naïve averaging of confidence estimates, saying he assumed the whole paper was similarly riddled with math errors. The point is arguable, but not a math error. Philip Tetlock, Tony Cox, Gerd Gigerenzer, Sarah Lichtenstein, Baruch Fischhoff, Paul Slovic and other heavyweights of the field used the same approach I used. Thank your university system for teaching that interpretation of probability is a clear-cut matter of math as opposed to vexing issue in analytic philosophy (see The Trouble with Probability and The Trouble with Doomsday).

Those criticisms acknowledged, I deleted the response data from the questions where Hacker News reported errors and typos. This changed none of the results by more than a percentage point. And, as noted above, prior knowledge of “trick” questions had almost no effect on accuracy or overconfidence.

On the point about the media’s impact on people’s confidence about fact claims that involve environmental issues, consider data from the 2nd batch of responses (post blog post) to this question:

According to the United Nations the rate of world deforestation increased between 1995 and 2015

This statement about a claim made by the UN is false, and the UN provides a great deal of evidence on the topic. The average confidence level given by respondents was 71%. The fraction of people answering correctly was 29%. The average confidence value specified by those who answered incorrectly was 69%. Independent of different interpretations of probability and confidence, this seems a clear sign of overconfidence about deforestation facts.

Compare this to the responses given for the question of whether Oregon borders California. 88% of respondents answered correctly and their average confidence specified was 88%.

Another example:

According to OurWorldInData.org, the average number of years of schooling per resident was higher in S. Korea than in USA in 2010

The statement is false. Average confidence was 68%. Average correctness was 20%

For all environmental and media-heavy social questions answered by the 2nd group of respondents (who presumably had some clue that people tend to be overconfident about such issues) the average correctness was 46% and the average confidence was 67%. This is a startling result; the proverbial dart throwing chimps would score vastly higher on environmental issues (50% by chimps, 20% on the schooling question and 46% for all “trick” questions by humans) than American respondents who were specifically warned that environmental questions were designed to demonstrate that people think the world is more screwed up than it is. Is this a sign of deep pessimism about the environment and society?

For comparison, average correctness and confidence on all World War II questions were both 65%. For movies, 70% correct, 71% confidence. For science, 75% and 77%. Other categories were similar, with some showing a slightly higher overconfidence. Most notably, sports mean accuracy was 59% with mean confidence of 68%.

Richard Feynman famously said he preferred not knowing to holding as certain the answers that might be wrong (No Ordinary Genius). Freeman Dyson famously said, “it is better to be wrong than to be vague” (The Scientist As Rebel). For the hyper-educated class, spoon-fed with facts and too educated to grasp the obvious, it seems the preferred system might now be phrased “better wrong than optimistic.”

. . . _ _ _ . . .

The man who despairs when others hope is admired as a sage. – John Stuart Mill. Speech on Perfectibility, 1828

Optimism is cowardice. Otto Spengler, Man and Technics, 1931

The U.S. life expectancy will drop to 42 years by 1980 due to cancer epidemics. Paul Ehrlich. Ramparts, 1969

It is the long ascent of the past that gives the lie to our despair. HG Wells. The Discovery of the Future, 1902

Smart Folk Often Full of Crap, Study Finds

Posted by Bill Storage in Uncategorized on April 22, 2021

For most of us, there is a large gap between what we know and what we think we know. We hold a level of confidence about our factual knowledge and predictions that doesn’t match our abilities. Since our personal decisions are really predictions about the future based on our available present knowledge, it makes sense to work toward adjusting our confidence to match our skill.

Last year I measured the knowledge-confidence gap of 3500 participants in a trivia game with a twist. For each True/False trivia question the respondents specified their level of confidence (between 50 and 100% inclusive) with each answer. The questions, presented in banks of 10, covered many topics and ranged from easy (American stop signs have 8 sides) to expert (Stockholm is further west than Vienna).

I ran this experiment on a website and mobile app using 1500 True/False questions, about half of which belonged to specific categories including music, art, current events, World War II, sports, movies and science. Visitors could choose between the category “Various” or from a specific category. I asked for personal information such as age, gender current profession, title, and education. About 20% of site visitors gave most of that information. 30% provided their professions.

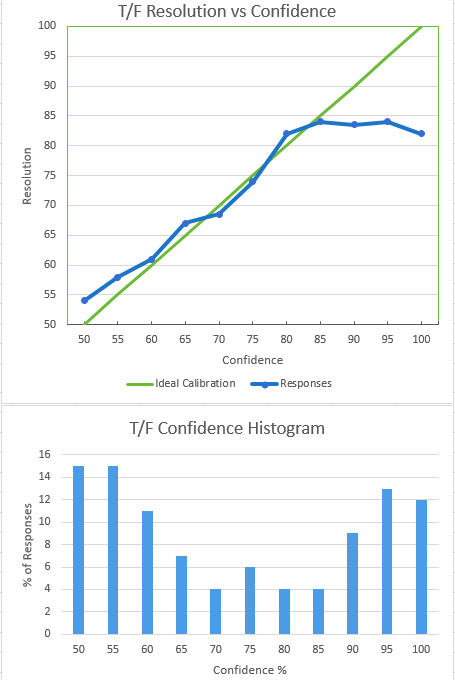

Participants were told that the point of the game was not to get the questions right but to have an appropriate level of confidence. For example, if a your average confidence value is 75%, 75% of their your answers should be correct. If your confidence and accuracy match, you are said to be calibrated. Otherwise you are either overconfident or underconfident. Overconfidence – sometime extreme – is more common, though a small percentage are significantly underconfident.

Overconfidence in group decisions is particularly troubling. Groupthink – collective overconfidence and rationalized cohesiveness – is a well known example. A more common, more subtle, and often more dangerous case exists when social effects and the perceived superiority of judgment of a single overconfident participant can leads to unconscious suppression of valid input from a majority of team members. The latter, for example, explains the Challenger launch decision for more than classic groupthink does, though groupthink is often cited as the cause.

I designed the trivia quiz system so that each group of ten questions under the Various label included one that dealt with a subject about which people are particularly passionate – environmental or social justice issues. I got this idea from Hans Rosling’s book, Factfulness. As expected, respondents were both overwhelmingly wrong and acutely overconfident about facts tied to emotional issues, e.g., net change in Amazon rainforest area in last five years.

I encouraged people to use take a few passes through the Various category before moving on to the specialty categories. Assuming that the first specialty categories that respondents chose was their favorite, I found them to be generally more overconfident about topics they presumable knew best. For example, those that first selected Music and then Art showed both higher resolution (correctness) and higher overconfidence in Music than they did in Art.

Mean overconfidence for all first-chosen specialties was 12%. Mean overconfidence for second-chosen categories was 9%. One interpretation is that people are more overconfident about that which they know best. Respondents’ overconfidence decreased progressively as they answered more questions. In that sense the system served as confidence calibration training. Relative overconfidence in the first specialty category chosen was present even when the effect of improved calibration was screened off, however.

For the first 10 questions, mean overconfidence in the Various category was 16% (16% for males, 14% for females). Mean overconfidence for the nine question in each group excepting the “passion” question was 13%.

Overconfidence seemed to be constant across professions, but increased about 1.5% with each level of college education. PhDs are 4.2% more overconfident than high school grads. I’ll leave that to sociologists of education to interpret. A notable exception was a group of analysts from a research lab who were all within a point or two of perfect calibration even on their first 10 questions. Men were slightly more overconfident than women. Underconfidence (more than 5% underconfident) was absent in men and present in 6% of the small group identifying as women (98 total).

The nature of overconfidence is seen in the plot of resolution (response correctness) vs. confidence. Our confidence roughly matches our accuracy up to the point where confidence is moderately high, around 85%. After this, increased confidence occurs with no increase in accuracy. At at 100% confidence level, respondents were, on average, less correct than they were at 95% confidence. Much of that effect stemmed from the one “trick” question in each group of 10; people tend to be confident but wrong about hot topics with high media coverage.

The distribution of confidence values expressed by participants was nominally bimodal. People expressed very high or very low confidence about the accuracy of their answers. The slight bump in confidence at 75% is likely an artifact of the test methodology. The default value of the confidence slider (website user interface element) was 75%. On clicking the Submit button, users were warned if most of their responses specified the default value, but an acquiescence effect appears to have present anyway. In Superforecasters Philip Tetlock observed that many people seem to have a “three settings” (yes, no, maybe) mindset about matters of probability. That could also explain the slight peak at 75%.

I’ve been using a similar approach to confidence calibration in group decision settings for the past three decades. I learned it from a DoD publication by Sarah Lichtenstein and Baruch Fischhoff while working on the Midgetman Small Intercontinental Ballistic Missile program in the mid 1980s. Doug Hubbard teaches a similar approach in his book The Failure of Risk Management. In my experience with diverse groups contributing to risk analysis, where group decisions about likelihood of uncertain events are needed, an hour of training using similar tools yields impressive improvements in calibration as measured above.

Risk Neutrality and Corporate Risk Frameworks

Posted by Bill Storage in Uncategorized on October 27, 2020

Wikipedia describes risk-neutrality in these terms: “A risk neutral party’s decisions are not affected by the degree of uncertainty in a set of outcomes, so a risk-neutral party is indifferent between choices with equal expected payoffs even if one choice is riskier”

While a useful definition, it doesn’t really help us get to the bottom of things since we don’t all remotely agree on what “riskier” means. Sometimes, by “risk,” we mean an unwanted event: “falling asleep at the wheel is one of the biggest risks of nighttime driving.” Sometimes we equate “risk” with the probability of the unwanted event: “the risk of losing in roulette is 35 out of 36. Sometimes we mean the statistical expectation. And so on.

When the term “risk” is used in technical discussions, most people understand it to involve some combination of the likelihood (probability) and cost (loss value) of an unwanted event.

We can compare both the likelihoods and the costs of different risks, but deciding which is “riskier” using a one-dimensional range (i.e., higher vs. lower) requires a scalar calculus of risk. If risk is a combination of probability and severity of an unwanted outcome, riskier might equate to a larger value of the arithmetic product of the relevant probability (a dimensionless number between zero and one) and severity, measured in dollars.

But defining risk as such a scalar (area under the curve, therefore one dimensional) value is a big step, one that most analyses of human behavior suggests is not an accurate representation of how we perceive risk. It implies risk-neutrality.

Most people agree, as Wikipedia states, that a risk-neutral party’s decisions are not affected by the degree of uncertainty in a set of outcomes. On that view, a risk-neutral party is indifferent between all choices having equal expected payoffs.

Under this definition, if risk-neutral, you would have no basis for preferring any of the following four choices over another:

1) a 50% chance of winning $100.00

2) An unconditional award of $50.

3) A 0.01% chance of winning $500,000.00

4) A 90% chance of winning $55.56.

If risk-averse, you’d prefer choices 2 or 4. If risk-seeking, you’d prefer 1 or 3.

Now let’s imagine, instead of potential winnings, an assortment of possible unwanted events, termed hazards in engineering, for which we know, or believe we know, the probability numbers. One example would be to simply turn the above gains into losses:

1) a 50% chance of losing $100.00

2) An unconditional payment of $50.

3) A 0.01% chance of losing $500,000.00

4) A 90% chance of losing $55.56.

In this example, there are four different hazards. Many argue that rational analysis of risk entails quantification of hazard severities, independent of whether their probabilities are quantified. Above we have four risks, all having the same $50 expected value (cost), labeled 1 through 4. Whether those four risks can be considered equal depends on whether you are risk-neutral.

If forced to accept one of the four risks, a risk-neutral person would be indifferent to the choice; a risk seeker might choose risk 3, etc. Banks are often found to be risk-averse. That is, they will pay more to prevent risk 3 than to prevent risk 4, even though they have the same expected value. Viewed differently, banks often pay much more to prevent one occurrence of hazard 3 (cost = $500,000) than to prevent 9000 occurrences of hazard 4 (cost = $500,000).

Businesses compare risks to decide whether to reduce their likelihood, to buy insurance, or to take other actions. They often use a heat-map approach (sometimes called risk registers) to visualize risks. Heat maps plot probability vs severity and view any particular risk’s riskiness as the area of the rectangle formed by the axes and the point on the map representing that risk. Lines of constant risk therefore look like y = 1 / x. To be precise, they take the form of y = a/x where a represents a constant number of dollars called the expected value (or mathematical expectation or first moment) depending on area of study.

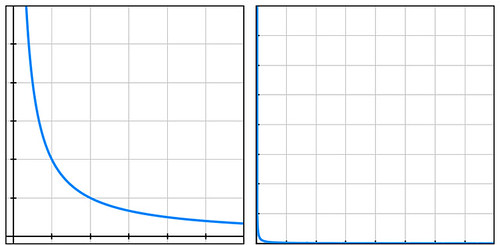

By plotting the four probability-cost vector values (coordinates) of the above four risks, we see that they all fall on the same line of constant risk. A sample curve of this form, representing a line of constant risk appears below on the left.

In my example above, the four points (50% chance of losing $100, etc.) have a large range of probabilities. Plotting these actual values on a simple grid isn’t very informative because the data points are far from the part of the plotted curve where the bend is visible (plot below on the right).

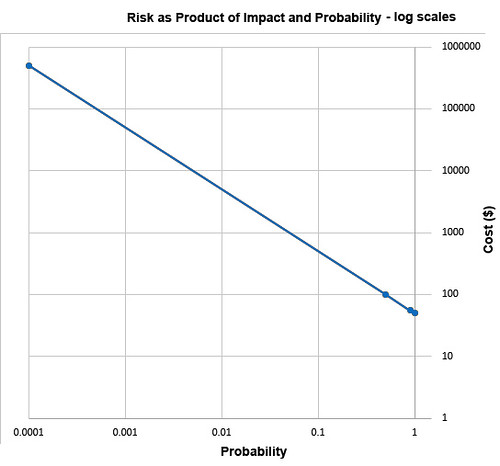

Students of high-school algebra know the fix for the problem of graphing data of this sort (monomials) is to use log paper. By plotting equations of the form described above using logarithmic scales for both axes, we get a straight line, having data points that are visually compressed, thereby taming the large range of the data, as below.

The risk frameworks used in business take a different approach. Instead of plotting actual probability values and actual costs, they plot scores, say from one ten. Their reason for doing this is more likely to convert an opinion into a numerical value than to cluster data for easy visualization. Nevertheless, plotting scores – on linear, not logarithmic, scales – inadvertently clusters data, though the data might have lost something in the translation to scores in the range of 1 to 10. In heat maps, this compression of data has the undesirable psychological effect of implying much small ranges for the relevant probability values and costs of the risks under study.

A rich example of this effect is seen in the 2002 PmBok (Project Management Body of Knowledge) published by the Project Management Institute. It assigns a score (which it curiously calls a rank) of 10 for probability values in the range of 0.5, a score of 9 for p=0.3, and a score of 8 for p=0.15. It should be obvious to most having a background in quantified risk that differentiating failure probabilities of .5, .3, and .15 is pointless and indicative of bogus precision, whether the probability is drawn from observed frequencies or from subjectivist/Bayesian-belief methods.

The methodological problem described above exists in frameworks that are implicitly risk-neutral. The real problem with the implicit risk-neutrality of risk frameworks is that very few of us – individuals or corporations – are risk-neutral. And no framework is right to tell us that we should be. Saying that it is somehow rational to be risk-neutral pushes the definition of rationality too far.

As proud king of a small distant planet of 10 million souls, you face an approaching comet that, on impact, will kill one million (10%) in your otherwise peaceful world. Your scientists and engineers rush to build a comet-killer nuclear rocket. The untested device has a 90% chance of destroying the comet but a 10% chance of exploding on launch thereby killing everyone on your planet. Do you launch the comet-killer, knowing that a possible outcome is total extinction? Or do you sit by and watch one million die from a preventable disaster? Your risk managers see two choices of equal riskiness: 100% chance of losing one million and a 10% chance of losing 10 million. The expected value is one million lives in both cases. But in that 10% chance of losing 10 million, there is no second chance. It’s an existential risk.

If these two choices seem somehow different, you are not risk-neutral. If you’re tempted to leave problems like this in the capable hands of ethicists, good for you. But unaware boards of directors have left analogous dilemmas in the incapable hands of simplistic and simple-minded risk frameworks.

The risk-neutrality embedded in risk frameworks is a subtle and pernicious case of Hume’s Guillotine – an inference from “is” to “ought” concealed within a fact-heavy argument. No amount of data, whether measured frequencies or subjective probability estimates, whether historical expenses or projected costs, even if recorded as PmBok’s scores and ranks, can justify risk-neutrality to parties who are not risk-neutral. So why is it embed it in the frameworks our leading companies pay good money for?

The Dose Makes the Poison

Posted by Bill Storage in Uncategorized on October 19, 2020

Toxicity is binary in California. Or so says its governor and most of its residents.

Governor Newsom, who believes in science, recently signed legislation making California the first state to ban 24 toxic chemicals in cosmetics.

The governor’s office states “AB 2762 bans 24 toxic chemicals in cosmetics, which are linked to negative long-term health impacts especially for women and children.”

The “which” in that statement is a nonrestrictive pronoun, and the comma preceding it makes the meaning clear. The sentence says that all toxic chemicals are linked to health impacts and that AB 2762 bans 24 of them – as opposed to saying 24 chemicals that are linked to health effects are banned. One need not be a grammarian or George Orwell to get the drift.

California continues down the chemophobic path, established in the 1970s, of viewing all toxicity through the beloved linear no-threshold lens. That lens has served gullible Californians well since the 1974, when the Sierra Club, which had until then supported nuclear power as “one of the chief long-term hopes for conservation,” teamed up with the likes of Gov. Jerry Brown (1975-83, 2011-19) and William Newsom – Gavin’s dad, investment manager for Getty Oil – to scare the crap out of science-illiterate Californians about nuclear power.

That fear-mongering enlisted Ralph Nadar, Paul Ehrlich and other leading Malthusians, rock stars, oil millionaires and overnight-converted environmentalists. It taught that nuclear plants could explode like atom bombs, and that anything connected to nuclear power was toxic – in any dose. At the same time Governor Brown, whose father had deep oil ties, found that new fossil fuel plants could be built “without causing environmental damage.” The Sierra Club agreed, and secretly took barrels of cash from fossil fuel companies for the next four decades – $25M in 2007 from subsidiaries of, and people connected to, Chesapeake Energy.

What worked for nuclear also works for chemicals. “Toxic chemicals have no place in products that are marketed for our faces and our bodies,” said First Partner Jennifer Siebel Newsom in response to the recent cosmetics ruling. Jennifer may be unaware that the total amount of phthalates in the banned zipper tabs would yield very low exposure indeed.

Chemicals cause cancer, especially in California, where you cannot enter a parking garage, nursery, or Starbucks without reading a notice that the place can “expose you to chemicals known to the State of California to cause birth defects.” California’s litigator-lobbied legislators authored Proposition 65 in a way that encourages citizens to rat on violators, the “citizen enforcers” receiving 25% of any penalties assessed by the court. The proposition lead chemophobes to understand that anything “linked to cancer” causes cancer. It exaggerates theoretical cancer risks stymying the ability of the science-ignorant educated class to make reasonable choices about actual risks like measles and fungus.

California’s linear no-threshold conception of chemical carcinogens actually started in 1962 with Rachel Carson’s Silent Spring, the book that stopped DDT use, saving all the birds, with the minor side effect of letting millions of Africans die of malaria who would have survived (1, 2, 3) had DDT use continued.

But ending DDT didn’t save the birds, because DDT wasn’t the cause of US bird death as Carson reported, because the bird death at the center of her impassioned plea never happened. This has been shown by many subsequent studies; and Carson, in her work at Fish and Wildlife Service and through her participation in Audubon bird counts, certainly had access to data showing that the eagle population doubled, and robin, catbird, and dove counts had increased by 500% between the time DDT was introduced and her eloquence, passionate telling of the demise of the days that once “throbbed with the dawn chorus of robins, catbirds, and doves.”

Carson also said that increasing numbers of children were suffering from leukemia, birth defects and cancer, and of “unexplained deaths,” and that “women were increasingly unfertile.” Carson was wrong about increasing rates of these human maladies, and she lied about the bird populations. Light on science, Carson was heavy on influence: “Many real communities have already suffered.”

In 1969 the Environmental Defense Fund demanded a hearing on DDT. Lasting eight months, the examiner’s verdict concluded DDT was not mutagenic or teratogenic. No cancer, no birth defects. In found no “deleterious effect on freshwater fish, estuarine organisms, wild birds or other wildlife.”

William Ruckleshaus, first director of the EPA didn’t attend the hearings or read the transcript. Pandering to the mob, he chose to ban DDT in the US anyway. It was replaced by more harmful pesticides in the US and the rest of the world. In praising Ruckleshaus, who died last year, NPR, the NY Times and the Puget Sound Institute described his having a “preponderance of evidence” of DDT’s damage, never mentioning the verdict of that hearing.

When Al Gore took up the cause of climate, he heaped praise on Carson, calling her book “thoroughly researched.” Al’s research on Carson seems of equal depth to Carson’s research on birds and cancer. But his passion and unintended harm have certainly exceeded hers. A civilization relying on the low-energy-density renewables Gore advocates will consume somewhere between 100 and 1000 times more space for food and energy than we consume at present.

California’s fallacious appeal to naturalism regarding chemicals also echoes Carson’s, and that of her mentor, Wilhelm Hueper, who dedicated himself to the idea that cancer stemmed from synthetic chemicals. This is still overwhelmingly the sentiment of Californians, despite the fact that the smoking-tar-cancer link now seems a bit of a fluke. That is, we expected the link between other “carcinogens” and cancer to be as clear as the link between smoking and cancer. It is not remotely. As George Johnson, author of The Cancer Chronicles, wrote, “as epidemiology marches on, the link between cancer and carcinogen seems ever fuzzier” (re Tomasetti on somatic mutations). Carson’s mentor Hueper, incidentally, always denied that smoking caused cancer, insisting toxic chemicals released by industry caused lung cancer.

This brings us back to the linear no-threshold concept. If a thing kills mice in high doses, then any dose to humans is harmful – in California. And that’s accepting that what happens in mice happens in humans, but mice lie and monkeys exaggerate. Outside California, most people are at least aware of certain hormetic effects (U-shaped dose-response curve). Small amounts of Vitamin C prevent scurvy; large amounts cause nephrolithiasis. Small amounts of penicillin promote bacteria growth; large amount kill them. There is even evidence of biopositive effects from low-dose radiation, suggesting that 6000 millirems a year might be best for your health. The current lower-than-baseline levels of cancers in 10,000 residents of Taiwan accidentally exposed to radiation-contaminated steel, in doses ranging from 13 to 160 mSv/yr for ten years starting in 1982 is a fascinating case.

Radiation aside, perpetuating a linear no-threshold conception of toxicity in the science-illiterate electorate for political reasons is deplorable, as is the educational system that produces degreed adults who are utterly science-illiterate – but “believe in science” and expect their government to dispense it responsibly. The Renaissance physician Paracelsus knew better half a millennium ago when he suggested that that substances poisonous in large doses may be curative in small ones, writing that “the dose makes the poison.”

To demonstrate chemophobia in 2003, Penn Jillette and assistant effortlessly convinced people in a beach community, one after another, to sign a petition to ban dihydrogen monoxide (H2O). Water is of course toxic in high doses, causing hyponatremia, seizures and brain damage. But I don’t think Paracelsus would have signed the petition.

The Covid Megatilt

Posted by Bill Storage in Uncategorized on April 3, 2020

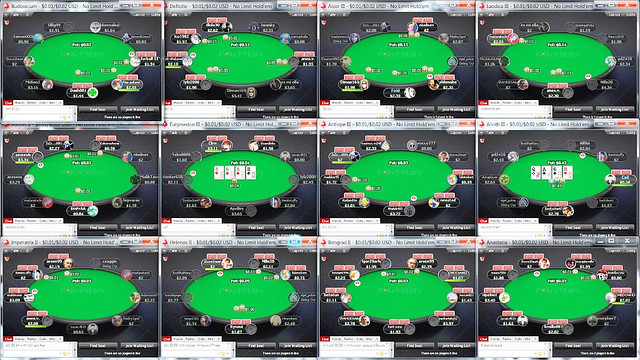

Playing poker online is far more addictive than gambling in a casino. Online poker, and other online gambling that involves a lot of skill, is engineered for addiction. Online poker allows multiple simultaneous tables. Laptops, tablets, and mobile phones provide faster play than in casinos. Setup time, for an efficient addict, can be seconds per game. Better still, you can rapidly switch between different online games to get just enough variety to eliminate any opportunity for boredom that has not been engineered out of the gaming experience. Completing a hand of Texas Holdem in 45 seconds online increases your chances of fast wins, fast losses, and addiction.

Tilt is what poker players call it when a particular run of bad luck, an opponent’s skill, or that same opponent’s obnoxious communications put you into a mental state where you’re playing emotionally and not rationally. Anger, disgust, frustration and distress is precipitated by bad beats, bluffs gone awry, a run of dead cards, losing to a lower ranked opponent, fatigue, or letting the opponent’s offensive demeanor get under your skin.

Tilt is so important to online poker that many products and commitment devices have emerged to deal with it. Tilt Breaker provides services like monitoring your performance to detect fatigue and automated stop-loss protection that restricts betting or table count after a run of losses.

A few years back, some friends and I demonstrated biometric tilt detection using inexpensive heart rate sensors. We used machine learning with principal dynamic modes (PDM) analysis running in a mobile app to predict sympathetic (stress-inducing, cortisol, epinephrine) and parasympathetic (relaxation, oxytocin) nervous system activity. We then differentiated mental and physical stress using the mobile phone’s accelerometer and location functions. We could ring an alarm to force a player to face being at risk of tilt or ragequit, even if he was ignoring the obvious physical cues. Maybe it’s time to repurpose this technology.

In past crises, the flow of bad news and peer communications were limited by technology. You could not scroll through radio programs or scan through TV shows. You could click between the three news stations, and then you were stuck. Now you can consume all of what could be home work and family time with up to the minute Covid death tolls while blasting your former friends on Twitter and Facebook for their appalling politicization of the crisis.

You yourself are of course innocent of that sort of politicizing. As a seasoned poker player, you know that the more you let emotions take control your game, the farther your judgments will stray from rational ones.

Still yet, what kind of utter moron could think that the whole response to Covid is a media hoax? Or that none of it is.

The P Word

Posted by Bill Storage in Philosophy of Science, Uncategorized on June 19, 2016

Philosophy can get you into trouble.

I don’t get many responses to blog posts; and for some reason, most of those I get come as email. A good number of those I have received fall into two categories – proclamations and condemnations of philosophy.

The former consist of a final word offered on a matter that I wrote about having two sides and warranting some investigation. The respondents, whose signatures always include a three-letter suffix, set me straight, apparently discounting the possibility of an opposing PhD. Regarding argumentum ad verecundiam, John Locke’s 1689 Essay Concerning Human Understanding is apparently passé in the era where nonscientists feel no shame for their science illiteracy and “my scientist can beat up your scientist.” For one blog post where I questioned whether fault tree analysis was, as commonly claimed, a deductive process, I received two emails in perfect opposition, both suitably credentialed but unimpressively defended.

More surprising is hostility to endorsement of philosophy in general or philosophy of science (as in last post). It seems that for most scientist, engineers and Silicon Valley tech folk, “philosophy” conjures up guys in wool sportscoats with elbow patches wondering what to doubt next or French neoliberals congratulating themselves on having simultaneously confuted Freud, Marx, Mao, Hamilton, Rawls and Cato the Elder.

When I invoke philosophy here I’m talking about how to think well, not how to live right. And philosophy of science is a thing (hint: Google); I didn’t make it up. Philosophy of science is not about ethics. It has to do with that fact that most of us agree that science yields useful knowledge, but we don’t all agree about what makes good scientific thinking. I.e., what counts as evidence, what truth and proof mean, and being honest about what questions science can’t answer.

Philosophy is not, as some still maintain, a framework or ground on which science rests. The failure of logical positivism in the 1960s ended that notion. But the failure of positivism did not render science immune to philosophy. Willard Van Orman Quine is known for having put the nail in the coffin of logical positivism. Quine introduced a phrase I discussed in my last post – underdetermination of theory by data – in his 1951 “Two Dogmas of Empiricism,” often called the most important philosophical article of the 20th century. Quine’s article isn’t about ethics; it’s about scientific method. As Quine later said in Ontological Relativity and Other Essays (1969):

I see philosophy not as groundwork for science, but as continuous with science. I see philosophy and science as in the same boat – a boat which we can rebuild only at sea while staying afloat in it. There is no external vantage point, no first philosophy. All scientific findings, all scientific conjectures that are at present plausible, are therefore in my view as welcome for use in philosophy as elsewhere.

Philosophy helps us to know what science is. But then, what is philosophy, you might ask. If so, you’re halfway there.

.

—

Philosophy is the art of asking questions that come naturally to children, using methods that come naturally to lawyers. – David Hills in Jeffrey Kasser’s The Philosophy of Science lectures

The aim of philosophy, abstractly formulated, is to understand how things in the broadest possible sense of the term hang together in the broadest possible sense of the term. – Wilfrid Sellars, “Philosophy and the Scientific Image of Man,” 1962

This familiar desk manifests its presence by resisting my pressures and by deflecting light to my eyes. – WVO Quine, Word and Object, 1960

My Trouble with Bayes

Posted by Bill Storage in Philosophy of Science, Probability and Risk, Uncategorized on January 21, 2016

In past consulting work I’ve wrestled with subjective probability values derived from expert opinion. Subjective probability is an interpretation of probability based on a degree of belief (i.e., hypothetical willingness to bet on a position) as opposed a value derived from measured frequencies of occurrences (related posts: Belief in Probability, More Philosophy for Engineers). Subjective probability is of interest when failure data is sparse or nonexistent, as was the data on catastrophic loss of a space shuttle due to seal failure. Bayesianism is one form of inductive logic aimed at refining subjective beliefs based on Bayes Theorem and the idea of rational coherence of beliefs. A NASA handbook explains Bayesian inference as the process of obtaining a conclusion based on evidence, “Information about a hypothesis beyond the observable empirical data about that hypothesis is included in the inference.” Easier said than done, for reasons listed below.

In past consulting work I’ve wrestled with subjective probability values derived from expert opinion. Subjective probability is an interpretation of probability based on a degree of belief (i.e., hypothetical willingness to bet on a position) as opposed a value derived from measured frequencies of occurrences (related posts: Belief in Probability, More Philosophy for Engineers). Subjective probability is of interest when failure data is sparse or nonexistent, as was the data on catastrophic loss of a space shuttle due to seal failure. Bayesianism is one form of inductive logic aimed at refining subjective beliefs based on Bayes Theorem and the idea of rational coherence of beliefs. A NASA handbook explains Bayesian inference as the process of obtaining a conclusion based on evidence, “Information about a hypothesis beyond the observable empirical data about that hypothesis is included in the inference.” Easier said than done, for reasons listed below.

Bayes Theorem itself is uncontroversial. It is a mathematical expression relating the probability of A given that B is true to the probability of B given that A is true and the individual probabilities of A and B:

P(A|B) = P(B|A) x P(A) / P(B)

If we’re trying to confirm a hypothesis (H) based on evidence (E), we can substitute H and E for A and B:

P(H|E) = P(E|H) x P(H) / P(E)

To be rationally coherent, you’re not allowed to believe the probability of heads to be .6 while believing the probability of tails to be .5; the sum of chances of all possible outcomes must sum to exactly one. Further, for Bayesians, the logical coherence just mentioned (i.e., avoidance of Dutch book arguments) must hold across time (synchronic coherence) such that once new evidence E on a hypothesis H is found, your believed probability for H given E should equal your prior conditional probability for H given E.

Plenty of good sources explain Bayesian epistemology and practice far better than I could do here. Bayesianism is controversial in science and engineering circles, for some good reasons. Bayesianism’s critics refer to it as a religion. This is unfair. Bayesianism is, however, like most religions, a belief system. My concern for this post is the problems with Bayesianism that I personally encounter in risk analyses. Adherents might rightly claim that problems I encounter with Bayes stem from poor implementation rather than from flaws in the underlying program. Good horse, bad jockey? Perhaps.

Problem 1. Subjectively objective

Bayesianism is an interesting mix of subjectivity and objectivity. It imposes no constraints on the subject of belief and very few constraints on the prior probability values. Hypothesis confirmation, for a Bayesian, is inherently quantitative, but initial hypotheses probabilities and the evaluation of evidence is purely subjective. For Bayesians, evidence E confirms or disconfirms hypothesis H only after we establish how probable H was in the first place. That is, we start with a prior probability for H. After the evidence, confirmation has occurred if the probability of H given E is higher than the prior probability of H, i.e., P(H|E) > P(H). Conversely, E disconfirms H when P(H|E) < P(H). These equations and their math leave business executives impressed with the rigor of objective calculation while directing their attention away from the subjectivity of both the hypothesis and its initial prior.

2. Rational formulation of the prior

Problem 2 follows from the above. Paranoid, crackpot hypotheses can still maintain perfect probabilistic coherence. Excluding crackpots, rational thinkers – more accurately, those with whom we agree – still may have an extremely difficult time distilling their beliefs, observations and observed facts of the world into a prior.

3. Conditionalization and old evidence

This is on everyone’s short list of problems with Bayes. In the simplest interpretation of Bayes, old evidence has zero confirming power. If evidence E was on the books long ago and it suddenly comes to light that H entails E, no change in the value of H follows. This seems odd – to most outsiders anyway. This problem gives rise to the game where we are expected to pretend we never knew about E and then judge how surprising (confirming) E would have been to H had we not know about it. As with the general matter of maintaining logical coherence required for the Bayesian program, it is extremely difficult to detach your knowledge of E from the rest of your knowing about the world. In engineering problem solving, discovering that H implies E is very common.

4. Equating increased probability with hypothesis confirmation.

My having once met Hillary Clinton arguably increases the probability that I may someday be her running mate; but few would agree that it is confirming evidence that I will do so. See Hempel’s raven paradox.

5. Stubborn stains in the priors

Bayesians, often citing success in the business of establishing and adjusting insurance premiums, report that the initial subjectivity (discussed in 1, above) fades away as evidence accumulates. They call this washing-out of priors. The frequentist might respond that with sufficient evidence your belief becomes irrelevant. With historical data (i.e., abundant evidence) they can calculate P of an unwanted event in a frequentist way: P = 1-e to the power -RT, roughly, P=RT for small products of exposure time T and failure rate R (exponential distribution). When our ability to find new evidence is limited, i.e., for modeling unprecedented failures, the prior does not get washed out.

6. The catch-all hypothesis

The denominator of Bayes Theorem, P(E), in practice, must be calculated as the sum of the probability of the evidence given the hypothesis plus the probability of the evidence given not the hypothesis:

P(E) = [P(E|H) x p(H)] + [P(E|~H) x P(~H)]

But ~H (“not H”) is not itself a valid hypothesis. It is a family of hypotheses likely containing what Donald Rumsfeld famously called unknown unknowns. Thus calculating the denominator P(E) forces you to pretend you’ve considered all contributors to ~H. So Bayesians can be lured into a state of false choice. The famous example of such a false choice in the history of science is Newton’s particle theory of light vs. Huygens’ wave theory of light. Hint: they are both wrong.

7. Deference to the loudmouth

This problem is related to no. 1 above, but has a much more corporate, organizational component. It can’t be blamed on Bayesianism but nevertheless plagues Bayesian implementations within teams. In the group formulation of any subjective probability, normal corporate dynamics govern the outcome. The most senior or deepest-voiced actor in the room drives all assignments of subjective probability. Social influence rules and the wisdom of the crowd succumbs to a consensus building exercise, precisely where consensus is unwanted. Seidenfeld, Kadane and Schervish begin “On the Shared Preferences of Two Bayesian Decision Makers” with the scholarly observation that an outstanding challenge for Bayesian decision theory is to extend its norms of rationality from individuals to groups. Their paper might have been illustrated with the famous photo of the exploding Challenger space shuttle. Bayesianism’s tolerance of subjective probabilities combined with organizational dynamics and the shyness of engineers can be a recipe for disaster of the Challenger sort.

All opinions welcome.

Arianna Huffington, Wisdom, and Stoicism 1.0

Posted by Bill Storage in Uncategorized on April 3, 2014

Huffington began with the story of her wake-up call to the idea that success is killing us. She told of collapsing from exhaustion, hitting the corner of her desk on the way down, gashing her forehead and breaking her cheek bone.

She later realized that “by any sane definition of success, if you are lying in a pool of blood on the floor of your office you’re not a success.”

After this epiphany Huffington began an inquiry into the meaning of success. The first big change was realizing that she needed much more sleep. She joked that she now advises women to sleep their way to the top. Sleep is a wonder drug.

Her reexamination of success also included personal values. She referred to ancient philosophers who asked what is a good life. She explicitly identified her current doctrine with that of the Stoics (not to be confused with modern use of the term stoic). “Put joy back in our everyday lives,” she says. She finds that we have shrunken the definition of success down to money and power, and now we need to expand it again. Each of us needs to define success by our own criteria, hence the name of her latest book. The third metric in her book’s title includes focus on well-being, wisdom, wonder, and giving.

Refreshingly (for me at least) Huffington drew repeatedly on ancient western philosophy, mostly that of the Stoics. In keeping with the Stoic style, her pearls often seem self-evident only after the fact:

“The essence of what we are is greater than whatever we are in the world.”

Take risk. See failure as part of the journey, not the opposite of success. (paraphrased)

I do not try to dance better than anyone else. I only try to dance better than myself.

“We may not be able to witness our own eulogy, but we’re actually writing it all the time, every day.”

“It’s not ‘What do I want to do?’, it’s ‘What kind of life do I want to have?”

“Being connected in a shallow way to the entire world can prevent us from being deeply connected to those closest to us, including ourselves.”

“‘My life has been full of terrible misfortunes, most of which never happened.'” (citing Montaigne)

As you’d expect, Huffington and Sandberg suggested that male-dominated corporate culture betrays a dearth of several of the qualities embodied in Huffington’s third metric. Huffington said the most popular book among CEOs is the Chinese military treatise, The Art of War. She said CEOs might do better to read children’s books like Silverstein’s The Giving Tree or maybe Make Way for Ducklings. Fair enough; there are no female Bernie Madoffs.

As you’d expect, Huffington and Sandberg suggested that male-dominated corporate culture betrays a dearth of several of the qualities embodied in Huffington’s third metric. Huffington said the most popular book among CEOs is the Chinese military treatise, The Art of War. She said CEOs might do better to read children’s books like Silverstein’s The Giving Tree or maybe Make Way for Ducklings. Fair enough; there are no female Bernie Madoffs.

I was pleasantly surprised by Huffington. I found her earlier environmental pronouncements to be poorly conceived. But in this talk on success, wisdom, and values, she shone. Huffington plays the part of a Stoic well, though some of the audience seemed to judge her more of a sophist. One attendee asked her if she really believed that living the life she identified in Thrive could have possibly led to her current success. Huffington replied yes, of course, adding that she, like Bill Clinton, found they’d made all their biggest mistakes while tired.

Huffington’s quotes above align well with the ancients. Consider these from Marcus Aurelius, one of the last of the great Stoics:

Everything we hear is an opinion, not a fact. Everything we see is a perspective, not the truth.

Very little is needed to make a happy life; it is all within yourself, in your way of thinking.

Confine yourself to the present.

Be content to seem what you really are.

The object of life is not to be on the side of the majority, but to escape finding oneself in the ranks of the insane.

I particularly enjoyed Huffington’s association of sense-of-now, inner calm, and wisdom with Stoicism, rather than, as is common in Silicon Valley, with a misinformed and fetishized understanding of Buddhism. Further, her fare was free of the intellectualization of mysticism that’s starting to plague Wisdom 2.0. It was a great performance.

————————

.

Preach not to others what they should eat, but eat as becomes you, and be silent. – Epictetus

Common-Mode Failure Driven Home

Posted by Bill Storage in Probability and Risk, Risk Management, Uncategorized on February 3, 2014

In a recent post I mentioned that probabilistic failure models are highly vulnerable to wrong assumptions of independence of failures, especially in redundant system designs. Common-mode failures in multiple channels defeats the purpose of redundancy in fault-tolerant designs. Likewise, if probability of non-function is modeled (roughly) as historical rate of a specific component failure times the length of time we’re exposed to the failure, we need to establish that exposure time with great care. If only one channel is in control at a time, failure of the other channel can go undetected. Monitoring systems can detect such latent failures. But then failures of the monitoring system tend to be latent.

In a recent post I mentioned that probabilistic failure models are highly vulnerable to wrong assumptions of independence of failures, especially in redundant system designs. Common-mode failures in multiple channels defeats the purpose of redundancy in fault-tolerant designs. Likewise, if probability of non-function is modeled (roughly) as historical rate of a specific component failure times the length of time we’re exposed to the failure, we need to establish that exposure time with great care. If only one channel is in control at a time, failure of the other channel can go undetected. Monitoring systems can detect such latent failures. But then failures of the monitoring system tend to be latent.

For example, your car’s dashboard has an engine oil warning light. That light ties to a monitor that detects oil leaks from worn gaskets or loose connections before the oil level drops enough to cause engine damage. Without that dashboard warning light, the exposure time to an undetected slow leak is months – the time between oil changes. The oil warning light alerts you to the condition, giving you time to deal with it before your engine seizes.

But what if the light is burned out? This failure mode is why the warning lights flash on for a short time when you start your car. In theory, you’d notice a burnt-out warning light during the startup monitor test. If you don’t notice it, the exposure time for an oil leak becomes the exposure time for failure of the warning light. Assuming you change your engine oil every 9 months, loss of the monitor potentially increases the exposure time from minutes to months, multiplying the probability of an engine problem by several orders of magnitude. Aircraft and nuclear reactors contain many such monitoring systems. They need periodic maintenance to ensure they’re able to detect failures. The monitoring systems rarely show problems in the check-ups; and this fact often lures operations managers, perceiving that inspections aren’t productive, into increasing maintenance intervals. Oops. Those maintenance intervals were actually part of the system design, derived from some quantified level of acceptable risk.

Common-mode failures get a lot press when they’re dramatic. They’re often used by risk managers as evidence that quantitative risk analysis of all types doesn’t work. Fukushima is the current poster child of bad quantitative risk analysis. Despite everyone’s agreement that any frequencies or probabilities used in Fukushima analyses prior to the tsunami were complete garbage, the result for many was to conclude that probability theory failed us. Opponents of risk analysis also regularly cite the Tacoma Narrows Bridge collapse, the Chicago DC-10 engine-loss disaster, and the Mount Osutaka 747 crash as examples. But none of the affected systems in these disasters had been justified by probabilistic risk modeling. Finally, common-mode failure is often cited in cases where it isn’t the whole story, as with the Sioux City DC-10 crash. More on Sioux City later.

On the lighter side, I’d like to relate two incidents – one personal experience, one from a neighbor – that exemplify common-mode failure and erroneous assumptions of exposure time in everyday life, to drive the point home with no mathematical rigor.

I often ride my bicycle through affluent Marin County. Last year I stopped at the Molly Stone grocery in Sausalito, a popular biker stop, to grab some junk food. I locked my bike to the bike rack, entered the store, grabbed a bag of chips and checked out through the fast lane with no waiting. Ninety seconds at most. I emerged to find no bike, no lock and no thief.

I suspect that, as a risk man, I unconsciously model all risk as the combination of some numerical rate (occurrence per hour) times some exposure time. In this mental model, the exposure time to bike theft was 90 seconds. I likely judged the rate to be more than zero but still pretty low, given broad daylight, the busy location with lots of witnesses, and the affluent community. Not that I built such a mental model explicitly of course, but I must have used some unconscious process of that sort. Thinking like a crook would have served me better.

If you were planning to steal an expensive bike, where would you go to do it? Probably a place with a lot of expensive bikes. You might go there and sit in your pickup truck with a friend waiting for a good opportunity. You’d bring a 3-foot long set of chain link cutters to make quick work of the 10 mm diameter stem of a bike lock. Your friend might follow the victim into the store to ensure you were done cutting the lock and throwing the bike into the bed of your pickup to speed away before the victim bought his snacks.

After the fact, I had much different thought thoughts about this specific failure rate. More important, what is the exposure time when the thief is already there waiting for me, or when I’m being stalked?

My neighbor just experienced a nerve-racking common mode failure. He lives in a San Francisco high-rise and drives a Range Rover. His wife drives a Mercedes. He takes the Range Rover to work, using the same valet parking-lot service every day. He’s known the attendant for years. He takes his house key from the ring of vehicle keys, leaving the rest on the visor for the attendant. He waves to the attendant as he leaves the lot on way to the office.

One day last year he erred in thinking the attendant had seen him. Someone else, now quite familiar with his arrival time and habits, got to his Range Rover while the attendant was moving another car. The thief drove out of the lot without the attendant noticing. Neither my neighbor nor the attendant had reason for concern. This gave the enterprising thief plenty of time. He explored the glove box, finding the registration, which includes my neighbor’s address. He also noticed the electronic keys for the Mercedes.

The thief enlisted a trusted colleague, and drove the stolen car to my neighbor’s home, where they used the electronic garage entry key tucked neatly into its slot in the visor to open the gate. They methodically spiraled through the garage, periodically clicking the button on the Mercedes key. Eventually they saw the car lights flash and they split up, each driving one vehicle out of the garage using the provided electronic key fobs. My neighbor lost two cars though common-mode failures. Fortunately, the whole thing was on tape and the law men were effective; no vehicle damage.

Should I hide my vehicle registration, or move to Michigan?

—————–

In theory, there’s no difference between theory and practice. In practice, there is.

Recent Comments