Posts Tagged Decision analysis

Smart Folk Often Full of Crap, Study Finds

Posted by Bill Storage in Uncategorized on April 22, 2021

For most of us, there is a large gap between what we know and what we think we know. We hold a level of confidence about our factual knowledge and predictions that doesn’t match our abilities. Since our personal decisions are really predictions about the future based on our available present knowledge, it makes sense to work toward adjusting our confidence to match our skill.

Last year I measured the knowledge-confidence gap of 3500 participants in a trivia game with a twist. For each True/False trivia question the respondents specified their level of confidence (between 50 and 100% inclusive) with each answer. The questions, presented in banks of 10, covered many topics and ranged from easy (American stop signs have 8 sides) to expert (Stockholm is further west than Vienna).

I ran this experiment on a website and mobile app using 1500 True/False questions, about half of which belonged to specific categories including music, art, current events, World War II, sports, movies and science. Visitors could choose between the category “Various” or from a specific category. I asked for personal information such as age, gender current profession, title, and education. About 20% of site visitors gave most of that information. 30% provided their professions.

Participants were told that the point of the game was not to get the questions right but to have an appropriate level of confidence. For example, if a your average confidence value is 75%, 75% of their your answers should be correct. If your confidence and accuracy match, you are said to be calibrated. Otherwise you are either overconfident or underconfident. Overconfidence – sometime extreme – is more common, though a small percentage are significantly underconfident.

Overconfidence in group decisions is particularly troubling. Groupthink – collective overconfidence and rationalized cohesiveness – is a well known example. A more common, more subtle, and often more dangerous case exists when social effects and the perceived superiority of judgment of a single overconfident participant can leads to unconscious suppression of valid input from a majority of team members. The latter, for example, explains the Challenger launch decision for more than classic groupthink does, though groupthink is often cited as the cause.

I designed the trivia quiz system so that each group of ten questions under the Various label included one that dealt with a subject about which people are particularly passionate – environmental or social justice issues. I got this idea from Hans Rosling’s book, Factfulness. As expected, respondents were both overwhelmingly wrong and acutely overconfident about facts tied to emotional issues, e.g., net change in Amazon rainforest area in last five years.

I encouraged people to use take a few passes through the Various category before moving on to the specialty categories. Assuming that the first specialty categories that respondents chose was their favorite, I found them to be generally more overconfident about topics they presumable knew best. For example, those that first selected Music and then Art showed both higher resolution (correctness) and higher overconfidence in Music than they did in Art.

Mean overconfidence for all first-chosen specialties was 12%. Mean overconfidence for second-chosen categories was 9%. One interpretation is that people are more overconfident about that which they know best. Respondents’ overconfidence decreased progressively as they answered more questions. In that sense the system served as confidence calibration training. Relative overconfidence in the first specialty category chosen was present even when the effect of improved calibration was screened off, however.

For the first 10 questions, mean overconfidence in the Various category was 16% (16% for males, 14% for females). Mean overconfidence for the nine question in each group excepting the “passion” question was 13%.

Overconfidence seemed to be constant across professions, but increased about 1.5% with each level of college education. PhDs are 4.2% more overconfident than high school grads. I’ll leave that to sociologists of education to interpret. A notable exception was a group of analysts from a research lab who were all within a point or two of perfect calibration even on their first 10 questions. Men were slightly more overconfident than women. Underconfidence (more than 5% underconfident) was absent in men and present in 6% of the small group identifying as women (98 total).

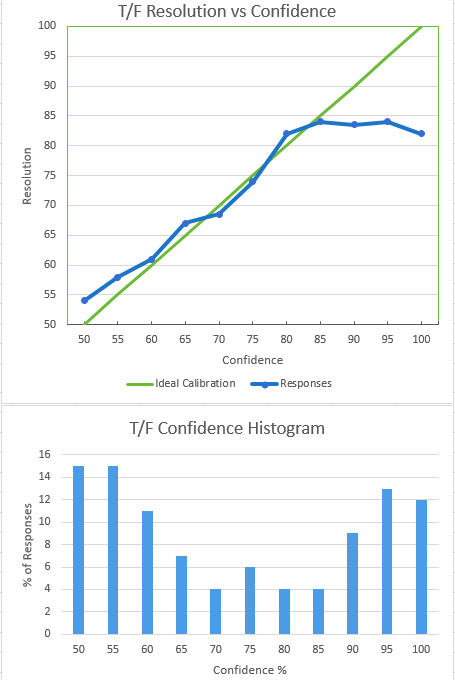

The nature of overconfidence is seen in the plot of resolution (response correctness) vs. confidence. Our confidence roughly matches our accuracy up to the point where confidence is moderately high, around 85%. After this, increased confidence occurs with no increase in accuracy. At at 100% confidence level, respondents were, on average, less correct than they were at 95% confidence. Much of that effect stemmed from the one “trick” question in each group of 10; people tend to be confident but wrong about hot topics with high media coverage.

The distribution of confidence values expressed by participants was nominally bimodal. People expressed very high or very low confidence about the accuracy of their answers. The slight bump in confidence at 75% is likely an artifact of the test methodology. The default value of the confidence slider (website user interface element) was 75%. On clicking the Submit button, users were warned if most of their responses specified the default value, but an acquiescence effect appears to have present anyway. In Superforecasters Philip Tetlock observed that many people seem to have a “three settings” (yes, no, maybe) mindset about matters of probability. That could also explain the slight peak at 75%.

I’ve been using a similar approach to confidence calibration in group decision settings for the past three decades. I learned it from a DoD publication by Sarah Lichtenstein and Baruch Fischhoff while working on the Midgetman Small Intercontinental Ballistic Missile program in the mid 1980s. Doug Hubbard teaches a similar approach in his book The Failure of Risk Management. In my experience with diverse groups contributing to risk analysis, where group decisions about likelihood of uncertain events are needed, an hour of training using similar tools yields impressive improvements in calibration as measured above.

Mazateconomics

Posted by Bill Storage in Economics on August 29, 2019

(2nd post on rational behavior of people too hastily judged irrational)

“These villagers have some really messed-up building practices.”

That’s a common reaction by gringos on first visiting rural Mexico. They see half-completed brick or cinder-block walls, apparently abandoned for a year or more, or exposed rebar sticking up from the roof of a one-story structure. It’s a pretty common sight.

In the 1990s I spent a few months in some pretty remote places in southern Mexico’s Sierra Madre Oriental mountains exploring caves. The indigenous people, Mazatecs and Chinantecs, were corn and coffee growers, depending on elevation and rain, which vary wildly over short distances. I traveled to San Agustin Zaragoza, a few miles from Huautla de Jimenez, home of Maria Sabina and the birthplace of the American psychedelic drug culture. San Agustin was mostly one-room thatched-roof stone houses, a few of brick or block, a few with concrete walls or floors. One had glass windows. Most had electricity, though often a single bulb hanging from a beam. No phones for miles. Several cinder block houses had rebar sticking from their flat roofs.

In the 1990s I spent a few months in some pretty remote places in southern Mexico’s Sierra Madre Oriental mountains exploring caves. The indigenous people, Mazatecs and Chinantecs, were corn and coffee growers, depending on elevation and rain, which vary wildly over short distances. I traveled to San Agustin Zaragoza, a few miles from Huautla de Jimenez, home of Maria Sabina and the birthplace of the American psychedelic drug culture. San Agustin was mostly one-room thatched-roof stone houses, a few of brick or block, a few with concrete walls or floors. One had glass windows. Most had electricity, though often a single bulb hanging from a beam. No phones for miles. Several cinder block houses had rebar sticking from their flat roofs.

Talking with the adult Mazatecs of San Agustin wasn’t easy. Few of them spoke Spanish, but all their kids were learning it. Since we were using grade-school kids as translators, and our Spanish was weak to start with, we rarely went deep into politics or philosophy.

Juan Felix’s son Luis told me, after we got to know each other a bit, that when he turned fourteen he’d be heading off to a boarding school. He wanted to go. His dad had explained to Luis that life beyond the mountains of Oaxaca was an option. Education was the way out.

Mazatecs get far more cooperation from their kids than US parents do. This observation isn’t mere noble-savage worship. They consciously create early opportunities for toddlers to collaborate with adults in house and field work. They do this fully aware that the net contribution from young kids is negative; adults have to clean up messes made by honest efforts of preschoolers. But by age 8 or 9, kids don’t shun household duties. The American teenager phenomenon is nonexistent in San Agustin.

Juan Felix was a thinker. I asked Luis to help me ask his dad some questions. What’s up with the protruding rebar, I asked. Follow the incentives, Juan Felix said in essence. Houses under construction are taxed as raw land; completed houses have a higher tax rate. Many of the locals, having been relocated from more productive land now beneath a lowland reservoir, were less than happy with their federal government.

Juan Felix was a thinker. I asked Luis to help me ask his dad some questions. What’s up with the protruding rebar, I asked. Follow the incentives, Juan Felix said in essence. Houses under construction are taxed as raw land; completed houses have a higher tax rate. Many of the locals, having been relocated from more productive land now beneath a lowland reservoir, were less than happy with their federal government.

Back then Mexican Marxists patrolled the deeply-rutted mud roads in high-clearance trucks blasting out a bullhorn message that the motives of the Secretariat of Hydraulic Resources had been ethnocidal and that the SHR sought to force the natives into an evil capitalist regime by destroying their cultural identity, etc. Despite being victims of relocation, the San Agustin residents didn’t seem to buy the argument. While there was still communal farming in the region, ejidos were giving way to privately owned land.

Chinanteconomics

A few years later, caver Louise Hose and I traveled to San Juan Zautla, also in the state of Oaxaca, to look for caves. Getting there was a day of four-wheeling followed by a two-day walk over mountainous dirt trails. It was as remote a place as I could find in North America. We stopped overnight in the village of Tecomaltianguisco and discussed our travel plans. We were warned that we might be unwelcome in Zautla.

On approaching Zautla we made enough noise to ensure we weren’t surprising anyone. Zautlans speak Sochiapam Chinantec. Like Mazatec, it is a highly tonal language, so much so that they can conduct full conversations over distance by whistling the tones that would otherwise accompany speech. Knowing that we were being whistled about was unnerving, though had they been talking, we wouldn’t have understood a word of their language any more than we would understand an etic tone of it.

But the Zautla residents welcomed us with open arms, gave us lodging, and fed us, including the fabulous black persimmons they grew there along with coffee. Again communicating through their kids, they told us we were the first brown-hairs that had ever visited Zautla. They guessed that the last outsiders to arrive there were the Catholic Spaniards who had brought the town bell for a tower that was never built. The Zautlans are not Catholic. They showed us the bell. Its inscription included a date in the 1700’s. Today there’s a road to Zautla. Census data says that in 2015 100% of the population (1200) was still indigenous and that there were no land lines, no cell phones and no internet access.

In Zautla I saw very little exposed rebar, but partially-completed block walls were everywhere. I doubted that property-tax assessors spent much time in Zautla, so the tax story didn’t seem to apply. So, through a 10 year old, I asked the jefe about the construction practices, which to outsiders appeared to reflect terrible planning.

Jefe Miguel laid it out. Despite their remote location, they still purchased most of their construction materials in distant Cuicatlan. Mules carried building materials over the dirt trail that brought us to Zautla. Inflation in Mexico had been running at 70% annually, compounding to over 800% for the last decade. Cement, mortar and cinder block are non-depreciating assets in a high inflation economy, Miguel told us. Buying construction materials as early as possible makes economic sense. Paying high VAT on the price of materials added insult to inflationary injury. Blocks take up a lot of space so you don’t want to store them indoors. While theft is uncommon, it’s still a concern. Storing them outdoors is made safer by gluing them down with mortar where the new structure is planned. Of course its not ideal, but don’t blame Zautla, blame the monetary tomfoolery of the PRI – Partido Revolucionario Institucional. Zautla economics 101.

San Agustin Christmas Eve 1988.

San Agustin Christmas Eve 1988.

Bernard on fiddle, Jaime on Maria Sabina’s guitar.

San Agustin Zaragoza from the trail to Santa Maria la Asuncion

San Agustin Zaragoza from the trail to Santa Maria la Asuncion

On the trail from San Agustin to Santa Maria la Asuncion

On the trail from San Agustin to Santa Maria la Asuncion

On the trail from Tecomaltianguisco to San Juan Zautla

On the trail from Tecomaltianguisco to San Juan Zautla

San Juan Zautla, Feb. 1992

San Juan Zautla, Feb. 1992

The karst valley below Zautla

The karst valley below Zautla

Chinatec boy with ancient tripod bowl

Chinatec boy with ancient tripod bowl

Mountain view from Zautla

Mountain view from Zautla

Multiple-Criteria Decision Analysis in the Engineering and Procurement of Systems

Posted by Bill Storage in Aerospace, Multidisciplinarians, Systems Engineering on March 26, 2014

The use of weighted-sum value matrices is a core component of many system-procurement and organizational decisions including risk assessments. In recent years the USAF has eliminated weighted-sum evaluations from most procurement decisions. They’ve done this on the basis that system requirements should set accurate performance levels that, once met, reduce procurement decisions to simple competition on price. This probably oversimplifies things. For example, the acquisition cost for an aircraft system might be easy to establish. But life cycle cost of systems that includes wear-out or limited-fatigue-life components requires forecasting and engineering judgments. In other areas of systems engineering, such as trade studies, maintenance planning, spares allocation, and especially risk analysis, multi-attribute or multi-criterion decisions are common.

Weighted-sum criterion matrices (and their relatives, e.g., weighted-product, AHP, etc.) are often criticized in engineering decision analysis for some valid reasons. These include non-independence of criteria, difficulties in normalizing and converting measurements and expert opinions into scores, and logical/philosophical concerns about decomposing subjective decisions into constituents.

Years ago, a team of systems engineers and I, while working through the issues of using weighted-sum matrices to select subcontractors for aircraft systems, experimented with comparing the problems we encountered in vendor selection to the unrelated multi-attribute decision process of mate selection. We met the same issues in attempting to create criteria, weight those criteria, and establish criteria scores in both decision processes, despite the fact that one process seems highly technical, the other one completely non-technical. This exercise emphasized the degree to which aircraft system vendor selection involves subjective decisions. It also revealed that despite the weaknesses of using weighted sums to make decisions, the process of identifying, weighting, and scoring the criteria for a decision greatly enhanced the engineers’ ability to give an expert opinion. But this final expert opinion was often at odds with that derived from weighted-sum scoring, even after attempts to adjust the weightings of the criteria.

Weighted-sum and related numerical approaches to decision-making interest me because I encounter them in my work with clients. They are central to most risk-analysis methodologies, and, therefore, central to risk management. The topic is inherently multidisciplinary, since it entails engineering, psychology, economics, and, in cases where weighted sums derive from multiple participants, social psychology.

This post is an introduction-after-the-fact, to my previous post, How to Pick a Spouse. I’m writing this brief prequel to address the fact that blog excerpting tools tend to use only the first few lines of a post, and on that basis, my post appeared to be on mate selection rather than decision analysis, it’s main point.

If you’re interested in multi-attribute decision-making in the engineering of systems, please continue now to How to Pick a Spouse.

.

.

————-

Katz’s Law: Humans will act rationally when all other possibilities have been exhausted.

How to Pick a Spouse

Posted by Bill Storage in Aerospace, Systems Engineering on March 19, 2014

Bekhap’s Law asserts that brains times beauty equals a constant. Can this be true? Are intellect and beauty quantifiable? Is beauty a property of the subject of investigation, or a quality of the mind of the beholder? Are any other relevant variables (attributes) intimately tied to brains or beauty? Assuming brains and beauty both are desirable, Backhap’s Law implies an optimization exercise – picking a point on the reciprocal function representing the best compromise between brains and beauty. Presumably, this point differs for all evaluators. It raises questions about the marginal utility of brains and beauty. Is it possible that too much brain or too much beauty could be a liability? (Engineers would call this an edge-case check of Beckhap’s validity.) Is Beckhap’s Law of any use without a cost axis? Other axes? In practice, if taken seriously, Backhap’s Law might be merely one constraint in a multi-attribute decision process for selecting a spouse. It also sheds light on the problems of Air Force procurement of the components of a weapons system and a lot of other decisions. I’ll explain why.

I’ll start with an overview of how the Air Force oversees contract awards for aircraft subsystems – at least how it worked through most of USAF history, before recent changes in procurement methods. Historically, after awarding a contract to an aircraft maker, the aircraft maker’s engineers wrote specs for its systems. Vendors bid on the systems by creating designs described in proposals submitted for competition. The engineers who wrote the specs also created a list of a few dozen criteria, with weightings for each, on which they graded the vendors’ proposals. The USAF approved this criteria list and their weightings before vendors submitted their proposals to ensure the fairness deserved by taxpayers. Pricing and life-cycle cost were similarly scored by the aircraft maker. The bidder with the best total score got the contract.

A while back I headed a team of four engineers, all single men, designing and spec’ing out systems for a military jet. It took most of a year to write these specs. Six months later we received proposals hundreds of pages long. We graded the proposals according to our pre-determined list of criteria. After computing the weighted sums (sums of score times weight for each criteria) I asked the engineers if the results agreed with their subjective judgments. That is, did the scores agree with the subjective judgment of best bidder made by these engineers independent of the scoring process. Only about half of them were. I asked the team why they thought the score results differed from their subjective judgments.

They proposed several theories. A systems engineer, viewing the system from the perspective of its interactions and interfaces with the entire aircraft may not be familiar with all the internal details of the system while writings specs. You learn a lot of these details by reading the vendors’ proposals. So you’re better suited to create the criteria list after reading proposals. But the criteria and their weightings are fixed at that point because of the fairness concern. Anonymized proposals might preserve fairness and allow better criteria lists, one engineer offered.

But there was more to the disconnect between their subjective judgments of “best candidate” and the computed results. Someone immediately cited the problem of normalization. Converting weight in pounds, for example, to a dimensionless score (e.g., a grade of 0 to 100) was problematic. If minimum product weight is the goal, how you do you convert three vendors’ product weights into grades on the 100 scale. Giving the lowest weight 100 points and subtracting the percentage weight delta of the others feels arbitrary – because it is. Doing so compresses the scores excessively – making you want to assign a higher weighting to product-weight to compensate for the clustering of the product-weight scores. Since you’re not allowed to do that, you invent some other ad hoc means of increasing the difference between scores. In other words, you work around the weighted-sum concept to try to comply with the spirit of the rules without actually breaking the rules. But you still end up with a method in which you’re not terribly confident.

A bright young engineer named Hui then hit on a major problem of the weighted-sum scoring approach. He offered that the criteria in our lists were not truly independent; they interacted with each other. Further, he noted, it would be impossible to create a list of criteria that were truly independent. Nature, physics and engineering design just don’t work like that. On that thought, another engineer said that even if the criteria represented truly independent attributes of the vendors’ proposed systems, they might not be independent in a mental model of quality judgment. For example, there may be a logical quality composed of a nonlinear relationship between reliability, spares cost, support equipment, and maintainability. Engineering meets philosophy.

We spent lunch critiquing and philosophizing about multi-attribute decision-making. Where else is this relevant, I asked. Hui said, “Hmmm, everywhere?” “Dating!” said Eric. “Dating, or marriage?”, I asked. They agreed that while their immediate dating interests might suggest otherwise, all four were in fact interested in finding a spouse at some point. I suggested we test multi-attribute decision matrices on this particular decision. They accepted the challenge. Each agreed to make a list of past and potential future candidates to wed, without regard for the likelihood of any mutual interest the candidate might have. Each also would independently prepare a list of criteria on which they would rate the candidates. To clarify, each engineer would develop their own criteria, weightings, and scores for their own candidates only. No multi-party (participatory) decisions were involved; these involve other complex issues beyond our scope here (e.g., differing degrees of over/under-confidence in participants, doctrinal paradox, etc.). Sharing the list would be optional.

Nevertheless, on completing their criteria lists, everyone was happy to share criteria and weightings. There were quite a few non-independent attributes related to appearance, grooming and dress, even within a single engineer’s list. Likewise with intelligence. Then there was sense of humor, quirkiness, religious compatibility, moral virtues, education, type A/B personality, all the characteristics of Myers-Briggs, Eysenck, MMPI, and assorted personality tests. Each engineer rated a handful of candidates and calculated the weighted sum for each.

I asked everyone if their winning candidate matched their subjective judgment of who the winner should have been. A resounding no, across the board.

Some adherents of rigid multi-attribute decision processes address such disconnects between intuition and weighted-sum decision scores by suggesting that in this case we merely adjust the weightings. For example, MindTools suggests:

“If your intuition tells you that the top scoring option isn’t the best one, then reflect on the scores and weightings that you’ve applied. This may be a sign that certain factors are more important to you than you initially thought.”

To some, this sounds like an admission that subjective judgment is more reliable than the results of the numerical exercise. Regardless, no amount of adjusting scores and weights left the engineers confident that the method worked. No adjustment to the weight coefficients seemed to properly express tradeoffs between some of the attributes. I.e., no tweaking of the system ordered the candidates (from high to low) in a way that made sense to each evaluator. This meant the redesigned formula still wasn’t trustworthy. Again, the matter of complex interactions of non-independent criteria came up. The relative importance of attributes seems to change as one contemplates different aspects of a thing. A philosopher’s perspective would be that normative statements cannot be made descriptive by decomposition. Analytic methods don’t answer normative questions.

Interestingly, all the engineers felt that listing criteria and scoring them helped them make better judgments about the ideal spouse, but not the judgments resulting directly from the weighted-sum analysis.

Fact is, picking which supplier should get the contract and picking the best spouse candidate are normative, subjective decisions. No amount of dividing a subjective decision into components makes it objective. Nor does any amount of ranking or scoring. A quantified opinion is still an opinion. This doesn’t mean we shouldn’t use decision matrices or quantify our sentiments, but it does mean we should not hide behind such quantifications.

From the perspective of psychology, decomposing the decision into parts seems to make sense. Expert opinion is known to be sometimes marvelous, sometimes terribly flawed. Daniel Kahneman writes extensively on associative coherence, finding that our natural, untrained tendency is to reach conclusions first, and justify them second. Kahneman and Gary Klein looked in detail at expert opinions in “Conditions for Intuitive Expertise: a Failure to Disagree” (American Psychologist, 2009). They found that short-answer expert opinion can be very poor. But they found that the subjective judgments of experts forced to examine details and contemplate alternatives – particularly when they have sufficient experience to close the intuition feedback loop – are greatly improved.

Their findings seem to support the aircraft engineers’ views of the weight-sum analysis process. Despite the risk of confusing reasons with causes, enumerating the evaluation criteria and formally assessing them aids the subjective decision process. Doing so left them more confident about their decisions, for spouse and for aircraft system, though those decision differed from the ones produced by weighted sums. In the case of the aircraft systems, the engineers had to live with the results of the weighted-sum scoring.

I was one of the engineers who disagreed with the results of the aircraft system decisions. The weighted-sum process awarded a very large contract to the firm whose design I judged inferior. Ten years later, service problems were severe enough that the Air Force agreed to switch to the vendor I had subjectively judged best. As for the engineer-spouse decisions, those of my old engineering team are all successful so far. It may not be a coincidence that the divorce rates of engineers are among the lowest of all professions.

——————-

Hedy Lamarr was granted a patent for spread-spectrum communication technology, paving the way for modern wireless networking.

Recent Comments