Archive for category Innovation management

Actively Disengaged?

Posted by Bill Storage in Innovation management on January 28, 2020

Over half of employees in America are disengaged from their jobs – 85%, according to a recent Gallup poll. About 15% are actively disengaged – so miserable that they seek to undermine the productivity of everyone else. Gallup, ADP and Towers Watson have been reporting similar numbers for two decades now. It’s an astounding claim that signals a crisis in management and the employee experience. Astounding. And it simply cannot be true.

Think about it. When you shop, eat out, sit in a classroom, meet with an accountant, hire an electrician, negotiate contracts, and talk to tech support, do you get a sense that they truly hate their jobs? They might begrudge their boss. They might be peeved about their pay. But those problems clearly haven’t lead to enough employment angst and career choice regret that they are truly disengaged. If they were, they couldn’t hide it. Most workers I encounter at all levels reveal some level of pride in their performance.

According to Bersin and Associates, we spend about a billion dollars per year to cure employee disengagement. And apparently to little effect given the persistence of disengagement reported in these surveys. The disengagement numbers don’t reconcile with our experience in the world. We’ve all seen organizational dysfunction and toxic cultures, but they are easy to recognize; i.e., they stand out from the norm. From a Bayesian logic perspective, we have rich priors about employee sentiments and attitudes, because we see them everywhere every day.

How do research firms reach such wrong conclusions about the state of engagement? That’s not entirely clear, but it probably goes beyond the fact that most of those firms offer consulting services to cure the disengagement problem. Survey researchers have long known that small variations in question wording and order profoundly affect responses (e.g. Hadley Cantril, 1944). In engagement surveys, context and priming likely play a large part.

I’m not saying that companies do a good job of promoting the right people into management; and I’m not denying that Dilbert is alive and well. I’m saying that the evidence suggests that despite these issues, most employees seek mastery of vocation; and they somehow find some degree of purpose in their work.

Successful firms realize that people will achieve mastery on their own if you get out of their way. They’re organized for learning and sensible risk-taking, not for process compliance. They’ve also found ways to align employees’ goals with corporate mission, fostering employees’ sense of purpose in their work.

Mastery seems to emerge naturally, perhaps from intrinsic motivation, when people have a role in setting their goals. In contrast, purpose, most researchers find, requires some level of top-down communications and careful trust building. Management must walk the talk to bring a mission to life.

Long ago I worked on a top secret aircraft project. After waiting a year or so on an SBI clearance, I was surprised to find that despite the standard need-to-know conditions being stipulated, the agency provided a large amount of information about the operational profile and mission of the vehicle that didn’t seem relevant to my work. Sensing that I was baffled by this, the agency’s rep explained that they had found that people were better at keeping secrets when they knew they were trusted and knew that they were a serious part of a serious mission. Never before or since have I felt such a sense of professional purpose.

Being able to see what part you play in the big picture provides purpose. A small investment in the top-down communication of a sincere message regarding purpose and risk-taking can prevent a large investment in rehiring, retraining and searching for the sources of lost productivity.

Physics for Venture Capitalists

Posted by Bill Storage in Innovation management, Philosophy of Science on August 11, 2019

VCs stress that they’re not in the business of evaluating technology. Few failures of startups are due to bad tech. Leo Polovets at Susa Ventures says technical diligence is a waste of time because few startups have significant technical risk. Success hinges on knowing customers’ needs, efficiently addressing those needs, hiring well, minding customer acquisition, and having a clue about management and governance.

In the dot-com era, I did tech diligence for Internet Capital Group. They invested in everything I said no to. Every one of those startups failed, likely for business management reasons. Had bad management not killed them, their bad tech would have in many cases. Are things different now?

Polovets is surely right in the domain of software. But hardware is making a comeback, even in Silicon Valley. A key difference between diligence on hardware and software startups is that software technology barely relies on the laws of nature. Hardware does. Hardware is dependent on science in a way software isn’t.

Silicon Valley’s love affairs with innovation and design thinking (the former being a retrospective judgement after market success, the latter mostly marketing jargon) leads tech enthusiasts and investors to believe that we can do anything given enough creativity. Creativity can in fact come up with new laws of nature. Isaac Newton and Albert Einstein did it. Their creativity was different in kind from that of the Wright Brothers and Elon Musk. Those innovators don’t change laws of nature; they are very tightly bound by them.

You see the impact of innovation overdose in responses to anything cautious of overoptimism in technology. Warp drive has to be real, right? It was already imagined back when William Shattner could do somersaults.

When the Solar Impulse aircraft achieved 400 miles non-stop, enthusiasts demanded solar passenger planes. Solar Impulse has the wingspan of an A380 (800 passengers) but weighs less than my car. When the Washingon Post made the mildly understated point that solar powered planes were a long way from carrying passengers, an indignant reader scorned their pessimism: “I can see the WP headline from 1903: ‘Wright Flyer still a long way from carrying passengers’. Nothing like a good dose of negativity.”

Another reader responded, noting that theoretical limits would give a large airliner coated with cells maybe 30 kilowatts of sun power, but it takes about 100 megawatts to get off the runway. Another enthusiast, clearly innocent of physics, said he disagreed with this answer because it addressed current technology and “best case.” Here we see a disconnect between two understandings of best case, one pointing to hard limits imposed by nature, the other to soft limits imposed by manufacturing and limits of current engineering know-how.

What’s a law of nature?

Law of nature doesn’t have a tight definition. But in science it usually means generalities drawn from a very large body of evidence. Laws in this sense must be universal, omnipotent, and absolute – true everywhere for all time, no exceptions. Laws of nature don’t happen to be true; they have to be true (see footnote*). They are true in both main philosophical senses of “true”: correspondence and coherence. To the best of our ability, they correspond with reality from a gods’ eye perspective; and they cohere, in the sense that each gets along with every other law of nature, allowing a coherent picture of how the universe works. The laws are interdependent.

Now we’ve gotten laws wrong in the past, so our current laws may someday be overturned too. But such scientific disruptions are rare indeed – a big one in 1687 (Newton) and another in 1905 (Einstein). Lesser laws rely on – and are consistent with – greater ones. The laws of physics erect barriers to engineering advancement. Betting on new laws of physics – as cold fusion and free-energy investors have done – is a very long shot.

As an example of what flows from laws of nature, most gasoline engines (Otto cycle) have a top theoretical efficiency of about 47%. No innovative engineering prowess can do better. Material and temperature limitations reduce that further. All metals melt at some temperature, and laws of physics tell us we’ll find no new stable elements for building engines – even in distant galaxies. Moore’s law, by the way, is not in any sense a law in the way laws of nature are laws.

The Betz limit tells us that no windmill will ever convert more than 59.3% of the wind’s kinetic energy into electricity – not here, not on Jupiter, not with curvy carbon nanotube blades, not coated with dilythium crystals. This limit doesn’t come from measurement; it comes from deduction and the laws of nature. The Shockley-Queisser limit tells us no single-layer photovoltaic cell will ever convert more than 33.7% of the solar energy hitting it into electricity. Gaia be damned, but we’re stuck with physics, and physics trumps design thinking.

So while funding would grind to a halt if investors dove into the details of pn-junctions in chalcopyrite semiconductors, they probably should be cautious of startups that, as judged by a Physics 101 student, are found to flout any fundamental laws of nature. That is, unless they’re fixing to jump in early, ride the hype cycle to the peak of expectation, and then bail out before the other investors catch on. They’d never do that, right?

Solyndra’s sales figures

In Solyndra‘s abundant autopsies we read that those crooks duped the DoE about sales volume and profits. An instant Wall Street darling, Solyndra was named one of 50 most innovative companies by Technology Review. Later, the Solyndra scandal coverage never mentioned that the idea of cylindrical containers of photovoltaic cells with spaces between them was a dubious means of maximizing incident rays. Yes, some cells in a properly arranged array of tubes would always be perpendicular to the sun (duh), but the surface area of the cells within say 30 degrees of perpendicular to the sun is necessarily (not even physics, just geometry) only one sixth of those on the tube (2 * 30 / 360). The fact that the roof-facing part of the tubes catches some reflected light relies on there being space between the tubes, which obviously aren’t catching those photons directly. A two-layer tube grabs a few more stray photons, but… Sure, the DoE should have been more suspicious of Solyndra’s bogus bookkeeping; but there’s another lesson in this $2B Silicon Valley sinkhole. Their tech was bullshit.

The story at Abound Solar was surprisingly similar, though more focused on bad engineering than bad science. Claims about energy, given a long history of swindlers, always warrant technical diligence. Upfront Ventures recently lead a $20M B round for uBeam, maker of an ultrasonic charging system. Its high frequency sound vibrations travel across the room to a receiver that can run your iPhone or, someday, as one presentation reported, your flat screen TV, from a distance of four meters. Mark Cuban and Marissa Mayer took the plunge.

Now we can’t totally rule out uBeam’s claims, but simple physics screams out a warning. High frequency sound waves diffuse rapidly in air. And even if they didn’t, a point-source emitter (likely a good model for the uBeam transmitter) obeys the inverse-square law (see Johannes Kepler, 1596). At four meters, the signal is one sixteenth as strong as at one meter. Up close it would fry your brains. Maybe they track the target and focus a beam on it (sounds expensive). But in any case, sound-pressure-level regulations limit transmitter strength. It’s hard to imagine extracting more than a watt or so from across the room. Had Upfront hired a college kid for a few days, they might have spent more wisely and spared uBeam’s CEO the embarrassment of stepping down last summer after missing every target.

Even b-school criticism of Theranos focuses on the firm’s culture of secrecy, Holmes’ poor management practices, and bad hiring, skirting the fact that every med student knew that a drop of blood doesn’t contain enough of the relevant cells to give accurate results.

Homework: Water don’t flow uphill

Now I’m not saying all VC, MBAs, and private equity folk should study much physics. But they should probably know as much physics as I know about convertible notes. They should know that laws of nature exist, and that diligence is due for bold science/technology claims. Start here:

Newton’s 2nd law:

- Roughly speaking, force = mass times acceleration. F = ma.

- Important for cars. More here.

- Practical, though perhaps unintuitive, application: slow down on I-280 when it’s raining.

2nd Law of Thermodynamics:

- Entropy always increases. No process is thermodynamically reversible. More understandable versions came from Lord Kelvin and Rudolf Clausius.

- Kelvin: You can’t get any mechanical effect from anything by cooling it below the temperature of its surroundings.

- Clausius: Without adding energy, heat can never pass from a cold thing to a hot thing.

- Practical application: in an insulated room, leaving the refrigerator door open will raise the room’s temperature.

- American frontier version (Locomotive Engineering Vol XXII, 1899): “Water don’t flow uphill.”

_ __________ _

“If someone points out to you that your pet theory of the universe is in disagreement with Maxwell’s equations – then so much the worse for Maxwell’s equations. If it is found to be contradicted by observation – well, these experimentalists do bungle things sometimes. But if your theory is found to be against the Second Law of Thermodynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation.” – Arthur Eddington

*footnote: Critics might point out that the distinction between laws of physics (must be true) and mere facts (happen to be true) of physics seems vague, and that this vagueness robs any real meaning from the concept of laws of physics. Who decides what has to be true instead of what happens to be true? All copper in the universe conducts electricity seems like a law. All trees in my yard are oak does not. How arrogant was Newton to move from observing that f=ma in our little solar system to his proclamation that force equals mass times acceleration in all possible worlds. All laws of science (and all scientific progress) seem to rely on the logical fallacy of affirming the consequent. This wasn’t lost on the ancient anti-sophist Greeks (Plato), the cleverest of the early Christian converts (Saint Jerome) and perceptive postmodernists (Derrida). David Hume’s 1738 A Treatise of Human Nature methodically destroyed the idea that there is any rational basis for the kind of inductive inference on which science is based. But… Hume was no relativist or nihilist. He appears to hold, as Plato did in Theaetetus, that global relativism is self-undermining. In 1951, WVO Quine eloquently exposed the logical flaws of scientific thinking in Two Dogmas of Empiricism, finding real problems with distinctions between truths grounded in meaning and truths grounded in fact. Unpacking that a bit, Quine would say that it is pointless to ask whether f=ma is a law of nature or a just deep empirical observation. He showed that we can combine two statements appearing to be laws together in a way that yielded a statement that had to be merely a fact. Finally, from Thomas Kuhn’s perspective, deciding which generalized observation becomes a law is entirely a social process. Postmodernist and Strong Program adherents then note that this process is governed by local community norms. Cultural relativism follows, and ultimately decays into pure subjectivism: each of us has facts that are true for us but not for each other. Scientists and engineers have found that relativism and subjectivism aren’t so useful for inventing vaccines and making airplanes fly. Despite the epistemological failings, laws of nature work pretty well, they say.

The Road to Holacracy

Posted by Bill Storage in Innovation management on May 12, 2016

In 1960 South Korea’s GDP per capita was at the level of the poorest of African and Asian nations. Four decades later, Korea ranked high in the G-20 major economies. Many factors including a US-assisted education system and a carefully-planned export-oriented economic strategy made this possible. By some accounts the influence of Peter Drucker also played a key role, as attested by the prominent Korean businessman who changed his first name to “Mr. Drucker.” Unlike General Motors in the US, South Korean businesses embraced Drucker’s concept of the self-governing organization.

Drucker proposed this concept in The Future of Industrial Man and further developed it in his 1946 Concept of the Corporation, which GM’s CEO Alfred Sloan, despite Drucker’s general praise of GM, saw as a betrayal. Sloan would hear nothing flattened hierarchies and decentralization.

Drucker was shocked by Sloan’s reaction to his book. With the emergence of large corporations, Drucker saw autonomous teams and empowered employees who would assume managerial responsibilities as the ultimate efficiency booster. He sought to establish trust and “create meaning” for employees, seeing this as key to what we now call “engagement.”

In the 1960’s, Douglas McGregor of MIT used the term, Theory Y, to label the contrarian notion that democracy in the work force encourages workers to approach tasks without direct supervision, again leading to fuller engagement and higher productivity.

Neither GM nor any other big US firm welcomed self-management for the rest of the 20th century. It’s ideals may have sounded overly socialistic to CEOs of the cold war era. A few consultancies promoted related concepts like shop-floor autonomy, skepticism of bureaucracy, and focus on intrinsic employee rewards in the 1980’s, e.g., Peters and Waterman’s In Search of Excellence. Later poor performance by firms celebrated in Excellence (e.g. Wang and NCR) may have further discredited concepts like worker autonomy.

Recently, Daniel Pink’s popular Drive argued that self-management and worker autonomy lead to a sense of purpose and engagement, which motivate more than rank in a hierarchy and higher wages. Despite the cases made by these champions of flatter organizations, the approach that helped Korea become an economic power got few followers in the west.

In 2014 Zappos adopted holacracy, an organizational structure promoted by Brian J. Robertson, which is often called a flat organization. Following a big increase in turnover rate at Zappos, many concluded that holacracy left workers confused and, with no ladder to climb, flatly unmotivated. Tony Hsieh, Zappos’s CEO, denies that holacracy was the cause. Hsieh implemented holacracy because in his view, self-managed structures promote innovation while hierarchies stifle it; large companies tend to stagnate.

There’s a great deal of confusion about holacracy, and whether it in fact can accurately be called a flat structure. A closer look at holacracy helps clear this up.

To begin, note that holacracy.org itself states that work “is more structured with Holacracy than [with] conventional management.” Holacracy does not advocate a flat structure or a simple democracy. Authority, instead of being delegated, is instead granted to roles, potentially ephemeral, which are tied to specific tasks.

Much of the confusion around holacracy likely stems from Robertson’s articulation of its purpose and usage. His 2015 book, Holacracy: The New Management System for a Rapidly Changing World, is wordy and abstruse to the point of self-obfuscation. Its use of metaphors drawn from biology and natural processes suggest an envy for scientific status. There’s plenty of theory, with little evidential support. Robertson never mentions Drucker’s work on self-governance or his concept of management by objective. He never references Theory Y or John Case’s open-book management concept, Evan’s lattice structure, or any other relevant precedent for holacracy. Nor does he address any pre-existing argument against holacracy, e.g., Contingency Theory. But, a weak book doesn’t mean a weak concept.

Holacracry.org’s statement of principles is crisp, and will surely appeal to anyone who has done time in the lower tiers of a corporate hierarchy. Its envisions a corporate republic, rather than a pure democracy. I.e., authority is distributed across teams, and decisions are made locally at the lowest level possible. More importantly, the governance is based on a constitution, through which holacracy aims to curb tyranny of the majority and factionalism, and to ensure that everyone is bound to the same rule set.

Unfortunately, Holacracy’s constitution is bloated, arcane, and far too brittle to support the weight of a large corporation. Several times longer than the US constitution and laden with idiosyncratic usage of common terms, it reads like a California tax code authored by L Ron Hubbard. It also seems to be the work of a single author rather than a constitutional congress. But again, a weak implementation does not impugn the underlying principles. Further, we cannot blame the concept for its mischaracterization by an unmindful tech press as being a flat and structureless process.

Holacracy is right about the perils of both flat structures (inability to allocate resources, solve disputes, and formation of factions) and the faults of silos (demotivation, principal-agent problem, and oppressive managers). But with a dense and rigid constitution and a purely inward focus (no attention to customers) it is a flawed version 1.0 product. It, or something like it – perhaps without the superfluous neologism – will be needed to handle imminent workforce changes. We are facing an engagement crisis, with 80% of the millennial workforce reporting a sense of disengagement and inability to exploit their skills at work. Millennials, says the Pew Research Center, resist paying dues, expect more autonomy while being comfortable in teams, resent taking orders, and expect to make an impact. With productivity tied to worker engagement, and millennial engagement hinging on autonomy, empowerment and trust, some of the silos need to come down. A constitutional system embodying self-governance seems like a good place to start.

Multidisciplinary

Posted by Bill Storage in Innovation management on May 6, 2016

In college, fellow cave explorer Ron Simmons found that the harnesses made for rock climbing performed very poorly underground. The cave environment shredded the seams of the harnesses from which we hung hundreds of feet off the ground in the underworld of remote southern Mexico. The conflicting goals of minimizing equipment expenses and avoiding death from equipment failure awakened our innovative spirit.

We wondered if we could build a better caving harness ourselves. Having access to UVA’s Instron testing machine Ron hand-stitched some webbing junctions to compare the tensile characteristics of nylon and polyester topstitching thread. His experiments showed too much variation from irregularities in his stitching, so he bought a Singer industrial sewing machine. At that time Ron had no idea how sew. But he mastered the machine and built fabulous caving harnesses. Ron later developed and manufactured hardware for ropework and specialized gear for cave diving. Curiosity about earth’s last great exploration frontier propelled our cross-disciplinary innovation. Curiosity, imagination and restlessness drive multidisciplinarity.

Soon we all owned sewing machines, making not only harnesses but wetsuits and nylon clothing. We wrote mapping programs to reduce our survey data and invented loop-closure algorithms to optimally distribute errors across a 40-mile cave survey. We learned geomorphology to predict the locations of yet undiscovered caves. Ron was unhappy with the flimsy commercial photo strobe equipment we used underground so he learned metalworking and the electrical circuitry needed to develop the indestructible strobe equipment with which he shot the above photo of me.

Fellow caver Bill Stone pushed multidisciplinarity further. Unhappy with conventional scuba gear for underwater caving, Bill invented a multiple-redundant-processor, gas-scrubbing rebreather apparatus that allowed 12-hour dives on a tiny “pony tank” oxygen cylinder. This device evolved into the Cis-Lunar Primary Life Support System later praised by the Apollo 11 crew. Bill’s firm, Stone Aerospace, later developed autonomous underwater vehicles under NASA Astrobiology contracts, for which I conducted probabilistic risk analyses. If there is life beneath the ice of Jupiter’s moon Europa, we’ll need robots like this to find it.

My years as a cave explorer and a decade as a systems engineer in aerospace left me comfortable crossing disciplinary boundaries. I enjoy testing the tools of one domain on the problems of another. The Multidisciplinarian is a hobby blog where I experiment with that approach. I’ve tried to use the perspective of History of Science on current issues in Technology (e.g.) and the tools of Science and Philosophy on Business Management and Politics (e.g.).

Terms like interdisciplinary and multidisciplinary get a fair bit of press in tech circles. Their usage speaks to the realization that while intense specialization and deep expertize are essential for research, they are the wrong tools for product design, knowledge transfer, addressing customer needs, and everything else related to society’s consumption of the fruits of research and invention.

These terms are generally shunned by academia for several reasons. One reason is the abuse of the terms in fringe social sciences of the 80s and 90s. Another is that the university system, since the time of Aristotle’s Lyceum, has consisted of silos in which specialists compete for top position. Academic status derives from research, and research usually means specialization. Academic turf protection and the research grant system also contribute. As Gina Kolata noted in a recent NY Times piece, the reward system of funding agencies discourages dialog between disciplines. Disappointing results in cancer research are often cited as an example of sectoral research silos impeding integrative problem solving.

Beside the many examples of silo inefficiencies, we have a long history of breakthroughs made possible by individuals who mastered several skills and integrated them. Galileo, Gutenberg, Franklin and Watt were not mere polymaths. They were polymaths who did something more powerful than putting specialists together in a room. They put ideas together in a mind.

On this view, specialization may be necessary to implement a solution but is insufficient for conceiving of that solution. Lockheed Martin does not design aircraft by putting aerodynamicists, propulsion experts, and stress analysts together in a think tank. It puts them together, along with countless other specialists, and a cadre of integrators, i.e., systems engineers, for whom excessive disciplinary specialization would be an obstacle. Bill Stone has deep knowledge in several sciences, but his ARTEMIS project, a prototype of a vehicle that could one day discover life beneath an ice-covered moon of Jupiter, succeeded because of his having learned to integrate and synthesize.

A famous example from another field is the case of the derivation of the double-helix model of DNA by Watson and Crick. Their advantage in the field, mostly regarded as a weakness before their discovery, was their failure – unlike all their rivals – to specialize in a discipline. This lack of specialization allowed them to move conceptually between disciplines, fusing separate ideas from Avery, Chargaff and Wilkins, thereby scooping front runner Linus Pauling.

Dev Patnaik, leader of Jump Associates, is a strong advocate of the conscious blending of different domains to discover opportunities that can’t be seen through a single lens. When I spoke with Dev at a recent innovation competition our conversation somehow drifted from refrigeration in Nairobi to Ludwig Wittgenstein. Realizing that, we shared a good laugh. Dev expresses pride for having hired MBA-sculptors, psychologist-filmmakers and the like. In a Fast Company piece, Dev suggested that beyond multidisciplinary teams, we need multidisciplinary people.

The silos that stifle innovation come in many forms, including company departments, academic disciplines, government agencies, and social institutions. The smarts needed to solve a problem are often at a great distance from the problem itself. Successful integration requires breaking down both institutional and epistemological barriers.

I recently overheard professor Olaf Groth speaking to a group of MBA students at Hult International Business School. Discussing the Internet of Things, Olaf told the group, “remember – innovation doesn’t go up, it goes across.” I’m not sure what context he had in mind, but it’s a great point regardless. The statement applies equally well to cognitive divides, academic disciplinary boundaries, and corporate silos.

Olaf’s statement reminded me of a very concrete example of a missed opportunity for cross-discipline, cross-division action at Gillette. Gillette acquired both Oral-B, the old-school toothbrush maker, and Braun, the electric appliance maker, in 1984. Gillette then acquired Duracell in 1996. But five years later, Gillette had not found a way into the lucrative battery-powered electric toothbrush market – despite having all the relevant technologies in house, but in different silos. They finally released the CrossAction (ironic name) brush in 2002; but it was inferior to well-established Colgate and P&G products. Innovation initiatives at Gillette were stymied by the usual suspects – principal-agent, misuse of financial tools in evaluating new product lines, misuse of platform-based planning, and holding new products to the same metrics as established ones. All that plus the fact that the divisions weren’t encouraged to look across. The three units were adjacent in a list of divisions and product lines in Gillette’s Strategic Report.

Multidisciplinarity (or interdisciplinarity, if you prefer) clearly requires more than a simple combination of academic knowledge and professional skills. Innovation and solving new problems require integrating and synthesizing different repositories of knowledge to frame problems in a real-world context rather than through the lens of a single discipline. This shouldn’t be so hard. After all, we entered the world free of disciplinary boundaries, and we know that fervent curiosity can dissolve them.

……

The average student emerges at the end of the Ph.D. program, already middle-aged, overspecialized, poorly prepared for the world outside, and almost unemployable except in a narrow area of specialization. Large numbers of students for whom the program is inappropriate are trapped in it, because the Ph.D. has become a union card required for entry into the scientific job market. – Freeman Dyson

Science is the organized skepticism in the reliability of expert opinion. – Richard Feynman

Curiosity is one of the permanent and certain characteristics of a vigorous intellect. – Samuel Johnson

The exhortation to defer to experts is underpinned by the premise that their specialist knowledge entitles them to a higher moral status than the rest of us. – Frank Furedi

It is a miracle that curiosity survives formal education. – Albert Einstein

An expert is one who knows more and more about less and less until he knows absolutely everything about nothing. – Nicholas Murray Butler

A specialist is someone who does everything else worse. – Ruggiero Ricci

Ron Simmons, 1954-2007

Leaders and Managers in Startups

Posted by Bill Storage in Innovation management on April 10, 2016

The distinction between leaders and managers has been worn to the bone in popular press, though with little agreement on what leadership is and whether leaders can be managers or vice versa. Further, a cult of leadership seems to exalt the most sadistic behaviors of charismatic leaders with no attention on key characteristics ascribed to leaders in most leader-manager dichotomies. Despite imprecision and ambiguity, a coarse distinction between leadership and management sheds powerful light on the needs of startups, as well as giving some advice and cautions about the composition of founder teams in startups.

The distinction between leaders and managers has been worn to the bone in popular press, though with little agreement on what leadership is and whether leaders can be managers or vice versa. Further, a cult of leadership seems to exalt the most sadistic behaviors of charismatic leaders with no attention on key characteristics ascribed to leaders in most leader-manager dichotomies. Despite imprecision and ambiguity, a coarse distinction between leadership and management sheds powerful light on the needs of startups, as well as giving some advice and cautions about the composition of founder teams in startups.

Common distinctions between managers and leaders include a mix of behaviors and traits, e.g.:

Managers

- Process and execution-oriented

- Risk averse

- Allocates resources

- Bottom-line focus

- Command and control

- Schedule-driven

Leaders

- Risk tolerant

- Innovative

- Visionary

- Thinks long-term

- Charismatic

- Intuitive

The cult of leadership often also paints some leaders as dictatorial, authoritative and inflexible, seeing these characteristics as an acceptable price for innovative vision. Likewise, the startup culture often views management as being wholly irrelevant to startups. Warren Bennis, in Learning to Lead, gives neither concept priority, but holds that they are profoundly different. For Bennis, managers do things right and leaders do the right thing. Peter Drucker, from 1946 on, saw leadership mostly as another attribute of good management but acknowledged a difference. He characterized good managers as leaders and bad managers as functionaries. Drucker saw a common problem in large corporations; they’re over-managed and under-led. He defined leader simply as someone with followers. He thought trust was the only means by which people chose to follow a leader.

Accepting that the above distinctions are useful for discussion, it’s arguable that in early-stage startups leadership would trump management, simply because at that stage startups require innovation and risk tolerance to get off the ground. Any schedules or bottom-line considerations in the early days of a startup rely only on rough approximations. That said, for startups targeting more serious industry sectors – financial and healthcare, for example – the domain knowledge and organizational maturity of experienced managers could be paramount.

Over the past 15 years I’ve watched a handful of startups face the challenges and benefits of functional, experience, and cognitive diversity. Some of this was firsthand – once as a board director, once on an advisory board, and twice as an owner. I also have close friends with direct experience in founding teams composed partly of tech innovators and partly of early-retired managers from large firms. My thoughts below flow from observing these startups.

Failure is an option. Perfect is a verb.

Silicon Valley’s “fail early, fail often” mantra is misunderstood and misused. For some it is an excuse for recklessness with investors’ money. Others chant the mantra with bad counter-inductive logic; i.e., believing that exhausting all routes to failure will necessarily result in success. Despite the hype, the fail-early perspective has value that experienced managers often miss. A look at the experience profile of corporate managers shows why.

Managers are used to having things go according to plan. That doesn’t happen in startups. Managers in startups are vulnerable to committing to an initial plan. The leader/manager distinction has some power here. You cannot manage an army into battle; you can only lead one. Yes, startups are in battle.

For a manager, planning, scheduling, estimating and budgeting traditionally involve a great deal of historical data with low variability. This is more true in the design/manufacture world than for managers who oversee product development (see Donald Reinertsen’s works for more on this distinction). But startups are much more like product development or R&D than they are like manufacturing. In manufacturing, spreadsheets and projections tend to be mostly right. In startups they are today’s best guess, which must be continually revised. Discovery-driven planning, as promoted by MacMillan and McGrath, might be a good starting point. If “fail early” rubs you the wrong way, understand it to mean disproving erroneous assumptions early, before you cast them in stone, only to have the market point them out to you.

Managers, having joined a startup, may tend to treat wild guesses, once entered into a spreadsheet, as facts, or may be overly confident in predictions derived from them. This is particularly critical for startups with complex enterprise products – just the kind of startup where corporate experience is most likely to be attractive. Such startups are prone to high costs and long development cycles. The financing Valley of Death claims many victims who budget against an optimistic release schedule and revenue forecast. It’s a reckless move with few possible escape routes, often resulting in desperate attempts to create a veneer of success on which to base another seed round.

In startups, planning must be more about prioritizing than about scheduling. Startups must treat development plans as a hypotheses to be continually refined. As various generals have said, essential as battle plans are, none has ever survived contact with the enemy. The Lean Startup’s build-measure-learn concept – which is just an abbreviated statement of the hypothetico-deductive interpretation of scientific method – is a good guide; but one that may require a mindset shift for most managers.

Zero defects

For Philip Crosby, Zero Defects was not a motivational program. It was to be taken literally. It meant everyone should do things right the first time. That mindset, better embodied in William Deming’s statistical process control methodology, is great for manufacturing, as is obvious from results of his work with Japanese industries in the 1950s. Whether that mindset was useful to white collar workers in America, in the form of the Deming System and later Six Sigma, (e.g., Motorola, GE, and Ford) is hotly debated. Qualpro, which authored a competing quality program, reported a while back that 91% of large firms with Six Sigma programs have trailed the S&P 500 after implementing them. Some say the program was effective for its initial purpose, but doesn’t scale to today’s needs.

Whatever its efficacy, most experienced managers have been schooled in Zero Defects or something similar. Its focus on process excellence emphasizing precision, consistency, and detailed analysis seems at odds with the innovation, adaptability, and accommodation of failure we see in successful startups.

Focus on doing it right the first time in a startup will lead to excessively detailed plans containing unreliable estimates and a tendency toward unwarranted confidence in those estimates.

Motivation and hierarchy

Corporate managers are used to having clearly defined goals and plenty of resources. Startups have neither. This impacts team dynamics.

Successful startup members, biographers tell us, are self-motivated. They share a vision and are closely aligned; their personal goals match the startup’s goals. In most corporations, managers control, direct, and supervise employees whose interests are not closely aligned with those of the corporation. Corporate motivational tools, applied to startups, reek of insincerity and demotivate teams. Uncritical enthusiasm is dangerous in a startup, especially for the enthusiasts. It can blind crusaders to fatal flaws in a product, business model, marketing plan or strategy. Aspirational faith is essential, but hope is not a strategy.

An ex-manager in a CEO leadership role might also unduly don the cloak of management by viewing a small startup team of investing founders as employees. It leads to factions, resentment, and distraction from the shared objective.

Startup teamwork requires clear communications and transparency. Clinkle’s Lucas Duplan notwithstanding, I think former corporate managers are far more likely to try to filter and control communications in a startup than those without that experience. Managing communications and information flow maintains order in a corporation; it creates distrust in a startup. Leading requires followers who trust you, says Drucker.

High degrees of autonomy and responsibility in startups invariably lead to disagreements. Some organizational psychologists say conflict is a tool. While that may be pushing it, most would agree that conflict is an indication of an opportunity to work swiftly toward a more common understanding of problem definition and solutions. In the traditional manager/leader distinction, leaders put conflict front and center, seeing it as a valuable indicator of an unmet organizational need. Managers, using a corporate approach, may try to take care of things behind the scene or one-on-one, thereby preventing loss of productivity in those least engaged in the conflict. Neutralizing dissenting voices in the name of alignment likely suppresses exactly the conversation that needs to occur. Make conflict constructive rather than suppressing it.

Strategy

I’m wary of ascribing wisdom to hoodie-wearing Ferrari drivers, nevertheless I’ve cringed to see mature businessmen make strategic blunders that no hipster CEO would make. This says nothing about intellect or maturity, but much about experience and skills acquired through immersion in startupland. I’ll give a few examples.

Believing that seed funding increases your chance of an A round: Most young leaders of startups know that while the amount of seed funding has steadily and dramatically in recent years, the number of A rounds has not. By some measures it has decreased.

Accepting VC money in a seed round: This is a risky move with almost no upside. It broadcasts a message of lukewarm interest by a high-profile investor. When it’s time for an A round, every other potential investor will be asking why the VC who gave you seed money has not invested further. Even if the VC who supplied seed funding entertains an A round, this will likely result in a lower valuation than would result from a competitive process.

Looking like a manager, not a leader: Especially when seeking funding, touting your Six Sigma or process improvement training, a focus on organizational design, or your supervisory skills will raise a big red flag.

Overspending too early: Managers are used to having resources. They often spend too early and give away too much equity for minor early contributions.

Lack of focus/no target customer: Thinking you can be all things to all customers in all markets if you just add more features and relationships is a mistake few hackers would make. Again, former executives are used to having resources and living in a world where cost overruns aren’t fatal.

“Selling” to investors: VCs are highly skilled at detecting hype. Good ones bet more on the jockey than the horse. You want them as a partner, not a customer; so don’t treat them like one.

___

Incommensurability and the Design-Engineering Gap

Posted by Bill Storage in Engineering, Innovation management, Interdisciplinary teams on April 4, 2014

Those who conceptualize products – particularly software – often have the unpleasant task of explaining their conceptual gems to unimaginative, sanctimonious engineers entrenched in the analytic mire of in-the-box thinking. This communication directs the engineers to do some plumbing and flip a few switches that get the concept to its intended audience or market… Or, at least, this is how many engineers think they are viewed by designers.

Truth is, engineers and creative designers really don’t speak the same language. This is more than just a joke. Many posts here involve philosopher of science, Thomas Kuhn. Kuhn’s idea of incommensurability between scientific paradigms also fits the design-engineering gap well. Those who claim the label, designers, believe design to be a highly creative, open-ended process with no right answer. Many engineers, conversely, understand design – at least within their discipline – to mean a systematic selection of components progressively integrated into an overall system, guided by business constraints and the laws of nature and reason. Disagreement on the meaning of design is just the start of the conflict.

Kuhn concluded that the lexicon of a discipline constrains the problem space and conceptual universe of that discipline. I.e., there is no fundamental theory of meaning that applies across paradigms. The meaning of expressions inside a paradigm comply only with the rules of that paradigm. Says Kuhn, “Conceptually, the world is our representation of our niche, the residence of the particular human community with whose members we are currently interacting” (The Road Since Structure, 1993, p. 103). Kuhn was criticized for exaggerating the extent to which a community’s vocabulary and word usage constrains the thoughts they are able to think. Kuhn saw this condition as self-perpetuating, since the discipline’s constrained thoughts then eliminate any need for expansion of its lexicon. Kuhn may have overplayed his hand on incommensurability, but you wouldn’t know it from some software-project kickoff meetings I’ve attended.

This short sketch, The Expert, written and directed by Lauris Beinerts, portrays design-engineering incommensurability from the perspective of the sole engineer in a preliminary design meeting.

See also: Debbie Downer Doesn’t Do Design

On Imperatives for Innovation

Posted by Bill Storage in Innovation management, Multidisciplinarians on December 9, 2013

Last year, innovation guru Julian Loren introduced me to Kim Chandler McDonald, who was researching innovators and how they think. Julian co-founded the Innovation Management Institute,and has helped many Fortune 500 firms with key innovation initiatives. I’ve had the privilege of working with Julian on large game conferences (gameferences) that prove just how quickly collaborators can dissolve communication barriers and bridge disciplines. Out of this flows proof that design synthesis, when properly facilitated, can emerge in days, not years. Kim is founder/editor of the “Capital I” Innovation Interview Series. She has built a far-reaching network of global thought leaders that she studies, documents, encourages and co-innovates with. I was honored to be interviewed for her 2013 book, !nnovation – how innovators think, act, and change our world. Find it on Amazon, or the online enhanced edition at innovationinterviews.com (also flatworld.me) to see what makes innovators like Kim, Julian and a host of others tick. In light of my recent posts on great innovators in history, reinvigorated by Bruce Vojac’s vibrant series on the same topic, Kim has approved my posting an excerpt of her conversations with me here.

How do you define Innovation?

Well that term is a bit overloaded these days. I think traditionally Innovation meant the creation of better or more effective products, services, processes, & ideas. While that’s something bigger than just normal product refinement, I think it pertained more to improvement of an item in a category rather than invention of a new category. More recently, the term seems to indicate new categories and radical breakthroughs and inventions. It’s probably not very productive to get too hung up on differentiating innovation and invention.

Also, many people, perhaps following Clayton Christensen, have come to equate innovation with market disruption, where the radical change results in a product being suddenly available to a new segment because some innovator broke a price or user-skill barrier. Then suddenly, you’re meeting previously unmet customer needs, generating a flurry of consumption and press, which hopefully stimulates more innovation. That seems a perfectly good definition too.

Neither of those definitions seem to capture the essence of the iPhone, the famous example of successful innovation, despite really being “merely” a collection of optimizations of prior art. So maybe we should expand the definitions to include things that improve quality of life very broadly or address some compelling need that we didn’t yet know we had – things that just have a gigantic “wow” factor.

I think there’s also room for seeing innovation as a new way of thinking about something. That doesn’t get much press; but I think it’s a fascinating subject that interacts with the other definitions, particularly in the sense that there are sometimes rather unseen innovations behind the big visible ones. Some innovations are innovations by virtue of spurring a stream of secondary ones. This cascade can occur across product spaces and even across disciplines. We can look at Galileo, Kepler, Copernicus and Einstein as innovators. These weren’t the plodding, analytical types. All went far out on a limb, defying conventional wisdom, often with wonderful fusions of logic, empiricism and wild creativity.

Finally, I think we have to include innovations in government, ethics and art. They occasionally do come along, and are important. Mankind went a long time without democracy, women’s rights or vanishing point perspective. Then some geniuses came along and broke with tradition – in a rational yet revolutionary way that only seemed self-evident after the fact. They fractured the existing model and shifted the paradigm. They innovated.

How important do you envisage innovation going forward?

Almost all businesses identify innovation as a priority, but despite the attention given to the topic, I think we’re still struggling to understand and manage it. I feel like the information age – communications speed and information volume – has profoundly changed competition in ways that we haven’t fully understood. I suppose every era is just like its predecessor in the sense that it perceives itself to be completely unlike its predecessors. That said, I think there’s ample evidence that a novel product with high demand, patented or not, gets you a much shorter time to milk the cow than it used to. Business, and hopefully our education system, is going to need to face the need for innovation (whether we continue with that term or not) much more directly and centrally, not as an add-on, strategy du jour, or department down the hall.

What do you think is imperative for Innovation to have the best chance of success; and what have you found to be the greatest barrier to its success?

A lot has been written about nurturing innovation and some of it is pretty good. Rather than putting design or designers on a pedestal, create an environment of design throughout. Find ways to reward design, and reward well.

One aspect of providing for innovation seems underrepresented in print – planning for the future by our education system and larger corporations. Innovating in all but the narrowest of product spaces – or idea spaces for that matter – requires multiple skills and people who can integrate and synthesize. We need multidisciplinarians, interdisciplinary teams and top-level designers, coordinators and facilitators. Despite all out talk and interest in synthesis as opposed to analysis – and our interest in holism and out-of-the-box thinking – we’re still praising ultra-specialists and educating too many of them. Some circles use the term tyranny of expertise. It’s probably applicable here.

I’ve done a fair amount of work in the world of complex systems – aerospace, nuclear, and pharmaceutical manufacture. In aerospace you cannot design an aircraft by getting a hundred specialists, one expert each in propulsion, hydraulics, flight controls, software, reliability, etc., and putting them in a room for a year. You get an airplane design by combining those people plus some who are generalists that know enough about each of those subsystems and disciplines to integrate them. These generalists aren’t jacks of all trades and masters of none, nor are they mere polymaths; they’re masters of integration, synthesis and facilitation – expert generalists. The need for such a role is very obvious in the case of an airplane, much less obvious in the case of a startup. But modern approaches to product and business model innovation benefit tremendously from people trained in multidisciplinarity.

I’m not sure if it’s the greatest barrier, but it seems to me that a significant barrier to almost any activity that combines critical thinking and creativity is to write a cookbook for that activity. We are still bombarded by consultancies, authors and charismatic speakers who capitalize on innovation by trivializing it. There’s a lot of money made by consultancies who reduce innovation to an n-step process or method derived from shallow studies of past success stories. You can get a lot of press by jumping on the erroneous and destructive left-brain/right-brain model. At best, it raises awareness, but the bandwagon is already full. I don’t think lack of interest in innovation is a problem; lack of enduring commitment probably is. Jargon-laden bullet-point lists have taken their toll. For example, it’s hard to even communicate meaningfully about certain tools or approaches to innovation using terms like “design thinking” or “systems thinking” because they’ve been diluted and redefined into meaninglessness.

What is your greatest strength?

Perspective.

What is your greatest weaknesses?

Brevity, on occasion.

Great Innovative Minds: A Discord on Method

Posted by Bill Storage in Innovation management, Multidisciplinarians on November 19, 2013

Great minds do not think alike. Cognitive diversity has served us well. That’s not news to those who study innovation; but I think you’ll find this to be a different take on the topic, one that gets at its roots.

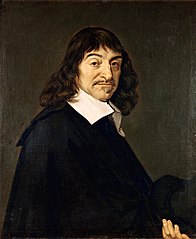

The two main figures credited with setting the scientific revolution in motion did not agree at all on what the scientific method actually was. It’s not that they differed on the finer points; they disagreed on the most basic aspect of what it meant to do science – though they didn’t yet use that term. At the time of Francis Bacon and Rene Descartes, there were no scientists. There were natural philosophers. This distinction is important for showing just how radical and progressive Descartes and Bacon were.

In Discourse on Method, Descartes argued that philosophers, over thousands of years of study, had achieved absolutely nothing. They pursued knowledge, but they had searched in vain. Descartes shared some views with Aristotle, but denied Aristotelian natural philosophy, which had been woven into Christian beliefs about nature. For Aristotle, rocks fell to earth because the natural order is for rocks to be on the earth, not above it – the Christian version of which was that it was God’s plan. In medieval Europe truths about nature were revealed by divinity or authority, not discovered. Descartes and Bacon were both devout Christians, but believed that Aristotelian philosophy of nature had to go. Observing that there is no real body of knowledge that can be claimed by philosophy, Descartes chose to base his approach to the study of nature on mathematics and reason. A mere 400 years after Descartes, we have trouble grasping just how radical this notion was. Descartes believed that the use of reason could give us knowledge of nature, and thus give us control over nature. His approach was innovative, in the broad sense of that term, which I’ll discuss below. Observation and experience, however, in Descartes’ view, could be deceptive. They had to be subdued by pure reason. His approach can be called rationalism. He sensed that we could use rationalism to develop theories – predictive models – with immense power, which would liberate mankind. He was right.

In Discourse on Method, Descartes argued that philosophers, over thousands of years of study, had achieved absolutely nothing. They pursued knowledge, but they had searched in vain. Descartes shared some views with Aristotle, but denied Aristotelian natural philosophy, which had been woven into Christian beliefs about nature. For Aristotle, rocks fell to earth because the natural order is for rocks to be on the earth, not above it – the Christian version of which was that it was God’s plan. In medieval Europe truths about nature were revealed by divinity or authority, not discovered. Descartes and Bacon were both devout Christians, but believed that Aristotelian philosophy of nature had to go. Observing that there is no real body of knowledge that can be claimed by philosophy, Descartes chose to base his approach to the study of nature on mathematics and reason. A mere 400 years after Descartes, we have trouble grasping just how radical this notion was. Descartes believed that the use of reason could give us knowledge of nature, and thus give us control over nature. His approach was innovative, in the broad sense of that term, which I’ll discuss below. Observation and experience, however, in Descartes’ view, could be deceptive. They had to be subdued by pure reason. His approach can be called rationalism. He sensed that we could use rationalism to develop theories – predictive models – with immense power, which would liberate mankind. He was right.

Francis Bacon, Descartes slightly older counterpart in the scientific revolution, was a British philosopher and statesman who became attorney general in 1613 under James I. He is now credited with being the father of empiricism, the hands-on, experimental basis for modern science, engineering, and technology. Bacon believed that acquiring knowledge of nature had to be rooted in observation and sensory experience alone. Do experiments and then decide what it means. Infer conclusions from the facts. Bacon argued that we must quiet the mind and apply a humble, mechanistic approach to studying nature and developing theories. Reason biases observation, he said. In this sense, the theory-building models of Bacon and Descartes were almost completely opposite. I’ll return to Bacon after a clarification of terms needed to make a point about him.

Innovation has many meanings. Cicero said he regarded it with great suspicion. He saw innovation as the haphazard application of untested methods to important matters. For Cicero, innovators were prone to understating the risks and overstating the potential gains to the public, while the innovators themselves had a more favorable risk/reward quotient. If innovation meant dictatorship for life for Julius Caesar after 500 years of self-governance by the Roman people, Cicero’s position might be understandable.

Today, innovation usually applies specifically to big changes in commercial products and services, involving better consumer value, whether by new features, reduced prices, reduced operator skill level, or breaking into a new market. Peter Drucker, Clayton Christensen and the tech press use innovation in roughly this sense. It is closely tied to markets, and is differentiated from invention (which may not have market impact), improvement (may be merely marginal), and discovery.

That business-oriented definition of innovation is clear and useful, but it leaves me with no word for what earlier generations meant by innovation. In a broader sense, it seems fair that innovation also applies to what vanishing point perspective brought to art during the renaissance. John Locke, a follower of both Bacon and Descartes, and later Thomas Jefferson and crew, conceived of the radical idea that a nation could govern itself by the application of reason. Discovery, invention and improvement don’t seem to capture the work of Locke and Jefferson either. Innovation seems the best fit. So for discussion purposes, I’ll call this innovation in the broader sense as opposed to the narrower sense, where it’s tied directly to markets.

That business-oriented definition of innovation is clear and useful, but it leaves me with no word for what earlier generations meant by innovation. In a broader sense, it seems fair that innovation also applies to what vanishing point perspective brought to art during the renaissance. John Locke, a follower of both Bacon and Descartes, and later Thomas Jefferson and crew, conceived of the radical idea that a nation could govern itself by the application of reason. Discovery, invention and improvement don’t seem to capture the work of Locke and Jefferson either. Innovation seems the best fit. So for discussion purposes, I’ll call this innovation in the broader sense as opposed to the narrower sense, where it’s tied directly to markets.

In the broader sense, Descartes was the innovator of his century. But in the narrow sense (the business and markets sense), Francis Bacon can rightly be called the father of innovation – and it’s first vocal advocate. Bacon envisioned a future where natural philosophy (later called science) could fuel industry, prosperity and human progress. Again, it’s hard to grasp how radical this was; but in those days the dominant view was that mankind had reached its prime in ancient times, and was on a downhill trajectory. Bacon’s vision was a real departure from the reigning view that philosophy, including natural philosophy, was stuff of the mind and the library, not a call to action or a route to improving life. Historian William Hepworth Dixon wrote in 1862 that everyone who rides in a train, sends a telegram or undergoes a painless surgery owes something to Bacon. In 1620, Bacon made, in The Great Instauration, an unprecedented claim in the post-classical world:

“The explanation of which things, and of the true relation between the nature of things and the nature of the mind … may spring helps to man, and a line and race of inventions that may in some degree subdue and overcome the necessities and miseries of humanity.”

In Bacon’s view, such explanations would stem from a mechanistic approach to investigation; and it must steer clear of four dogmas, which he called idols. Idols of the tribe are the set of ambient cultural prejudices. He cites our tendency to respond more strongly to positive evidence than to negative evidence, even if they are equally present; we leap to conclusions. Idols of the cave are one’s individual preconceptions that must be overcome. Idols of the theater refer to dogmatic academic beliefs and outmoded philosophies; and idols of the marketplace are those prejudices stemming from social interactions, specifically semantic equivocation and terminological disputes.

Descartes realized that if you were to strictly follow Bacon’s method of fact collecting, you’d never get anything done. Without reasoning out some initial theoretical model, you could collect unrelated facts forever with little chance of developing a usable theory. Descartes also saw Bacon’s flaw in logic to be fatal. Bacon’s method (pure empiricism) commits the logical sin of affirming the consequent. That is, the hypothesis, if A then B, is not made true by any number of observations of B. This is because C, D or E (and infinitely more letters) might also cause B, in the absence of A. This logical fallacy had been well documented by the ancient Greeks, whom Bacon and Descartes had both studied. Descartes pressed on with rationalism, developing tools like analytic geometry and symbolic logic along the way.

Interestingly, both Bacon and Descartes were, from our perspective, rather miserable scientists. Bacon denied Copernicanism, refused to accept Kepler’s conclusion that planet orbits were elliptical, and argued against William Harvey’s conclusion that the heart pumped blood to the brain through a circulatory system. Likewise, by avoiding empiricism, Descartes reached some very wrong conclusions about space, matter, souls and biology, even arguing that non-human animals must be considered machines, not organisms. But their failings were all corrected by time and the approaches to investigation they inaugurated. The tension between their approaches didn’t go unnoticed by their successors. Isaac Newton took a lot from Bacon and a little from Descartes; his rival Gottfried Leibniz took a lot from Descartes and a little from Bacon. Both were wildly successful. Science made the best of it, striving for deductive logic where possible, but accepting the problems of Baconian empiricism. Despite reliance on affirming the consequent, inductive science seems to work rather well, especially if theories remain open to revision.

Bacon’s idols seem to be as relevant to the boardroom as they were to the court of James I. Seekers of innovation, whether in the classroom or in the enterprise, might do well to consider the approaches and virtues of Bacon and Descartes, of contrasting and fusing rationalism and observation. Bacon and Descartes envisioned a brighter future through creative problem-solving. They broke the bonds of dogma and showed that a new route forward was possible. Let’s keep moving, with a diversity of perspectives, interpretations, and predictive models.

You’re So Wrong, Richard Feynman

Posted by Bill Storage in Innovation management, Multidisciplinarians on November 2, 2013

“Philosophy of science is about as useful to scientists as ornithology is to birds”

This post is more thoughts on the minds of interesting folk who can think from a variety of perspectives, inspired by Bruce Vojak’s Epistemology of Innovation articles. This is loosely related to systems thinking, design thinking, or – more from my perspective – the consequence of learning a few seemingly unrelated disciplines that end up being related in some surprising and useful way.

Richard Feynman ranks high on my hero list. When I was a teenager I heard a segment of an interview with him where he talked about being a young boy with a ball in a wagon. He noticed that when he abruptly pulled the wagon forward, the ball moved to the back of the wagon, and when he stopped the wagon, the ball moved forward. He asked his dad why it did that. His dad, who was a uniform salesman, put a slightly finer point on the matter. He explained that the ball didn’t really move backward; it moved forward, just not as fast as the wagon was moving. Feynman’s dad told young Richard that no one knows why a ball behaves like that. But we call it inertia. I found both points wonderfully illuminating. On the ball’s motion, there’s more than one way of looking at things. Mel Feynman’s explanation of the ball’s motion had gentle but beautiful precision, calling up thoughts about relativity in the simplest sense – motion relative to the wagon versus relative to the ground. And his statement, “we call it inertia,” got me thinking quite a lot about the difference between knowledge about a thing and the name of a thing. It also recalls Newton vs. the Cartesians in my recent post. The name of a thing holds no knowledge at all.

Feynman was almost everything a hero should be – nothing like the stereotypical nerd scientist. He cussed, pulled gags, picked locks, played drums, and hung out in bars. His thoughts on philosophy of science come to mind because of some of the philosophy-of-science issues I touched on in previous posts on Newton and Galileo. Unlike Newton, Feynman was famously hostile to philosophy of science. The ornithology quote above is attributed to him, though no one seems to have a source for it. If not his, it could be. He regularly attacked philosophy of science in equally harsh tones. “Philosophers are always on the outside making stupid remarks,“ he is quoted as saying in his biography by James Gleick.

Feynman was almost everything a hero should be – nothing like the stereotypical nerd scientist. He cussed, pulled gags, picked locks, played drums, and hung out in bars. His thoughts on philosophy of science come to mind because of some of the philosophy-of-science issues I touched on in previous posts on Newton and Galileo. Unlike Newton, Feynman was famously hostile to philosophy of science. The ornithology quote above is attributed to him, though no one seems to have a source for it. If not his, it could be. He regularly attacked philosophy of science in equally harsh tones. “Philosophers are always on the outside making stupid remarks,“ he is quoted as saying in his biography by James Gleick.

My initial thoughts were that I can admire Feynman’s amazing work and curious mind while thinking he was terribly misinformed and hypocritical about philosophy. I’ll offer a slightly different opinion at the end of this. Feynman actually engaged in philosophy quite often. You’d think he’d at least try do a good job of it. Instead he seems pretty reckless. I’ll give some examples.

Feynman, along with the rest of science, was assaulted by the wave of postmodernism that swept university circles in the ’60s. On its front line were Vietnam protesters who thought science was a tool of evil corporations, feminists who thought science was a male power play, and Foucault-inspired “intellectuals” who denied that science had any special epistemic status. Feynman dismissed all this as a lot of baloney. Most of it was, of course. But some postmodern criticism of science was a reaction – though a gross overreaction – to a genuine issue that Kuhn elucidated – one that had been around since Socrates debated the sophists. Here’s my best Readers Digest version.

All empirical science relies on affirming the consequent, something seen as a flaw in deductive reasoning. Science is inductive, and there is no deductive justification for induction (nor is there any inductive justification for induction – a topic way too deep for a blog post). Justification actually rests on a leap of inductive faith and consensus among peers. But it certainly seems reasonable for scientists to make claims of causation using what philosophers call inference to the best explanation. It certainly seems that way to me. However, defending that reasoning – that absolute foundation for science – is a matter of philosophy, not one of science.

This issue edges us toward a much more practical one, something Feynman dealt with often. What’s the difference between science and pseudoscience (the demarcation question)? Feynman had a lot of room for Darwin but no room at all for the likes of Freud or Marx. All claimed to be scientists. All had theories. Further, all had theories that explained observations. Freud and Marx’s theories actually had more predictive success than did those of Darwin. So how can we (or Feynman) call Darwin a scientist but Freud and Marx pseudoscientists without resorting to the epistemologically unsatisfying argument made famous by Supreme Court Justice Potter Stewart: “I can’t define pornography but I know it when I see it”? Neither Feynman nor anyone else can solve the demarcation issue in any convincing way, merely by using science. Science doesn’t work for that task.

It took Karl Popper, a philosopher, to come up with the counterintuitive notion that neither predictive success nor confirming observations can qualify something as science. In Popper’s view, falsifiability is the sole criterion for demarcation. For reasons that take a good philosopher to lay out, Popper can be shown to give this criterion a bit too much weight, but it has real merit. When Einstein predicted that the light from distant stars actually bends around the sun, he made a bold and solidly falsifiable claim. He staked his whole relativity claim on it. If, in an experiment during the next solar eclipse, light from stars behind the sun didn’t curve around it, he’d admit defeat. Current knowledge of physics could not support Einstein’s prediction. But they did they experiment (the Eddington expedition) and Einstein was right. In Popper’s view, this didn’t prove that Einstein’s gravitation theory was true, but it failed to prove it wrong. And because the theory was so bold and counterintuitive, it got special status. We’ll assume it true until it is proved wrong.

Marx and Freud failed this test. While they made a lot of correct predictions, they also made a lot of wrong ones. Predictions are cheap. That is, Marx and Freud could explain too many results (e.g., aggressive personality, shy personality or comedian) with the same cause (e.g., abusive mother). Worse, they were quick to tweak their theories in the face of counterevidence, resulting in their theories being immune to possible falsification. Thus Popper demoted them to pseudoscience. Feynman cites the falsification criterion often. He never names Popper.

The demarcation question has great practical importance. Should creationism be taught in public schools? Should Karmic reading be covered by your medical insurance? Should the American Parapsychological Association be admitted to the American Association for the Advancement of Science (it was in 1969)? Should cold fusion research be funded? Feynman cared deeply about such things. Science can’t decide these issues. That takes philosophy of science, something Feynman thought was useless. He was so wrong.

The demarcation question has great practical importance. Should creationism be taught in public schools? Should Karmic reading be covered by your medical insurance? Should the American Parapsychological Association be admitted to the American Association for the Advancement of Science (it was in 1969)? Should cold fusion research be funded? Feynman cared deeply about such things. Science can’t decide these issues. That takes philosophy of science, something Feynman thought was useless. He was so wrong.

Finally, perhaps most importantly, there’s the matter of what activity Feynman was actually engaged in. Is quantum electrodynamics a science or is it philosophy? Why should we believe in gluons and quarks more than angels? Many of the particles and concepts of Feynman’s science are neither observable nor falsifiable. Feynman opines that there will never be any practical use for knowledge of quarks, so he can’t appeal to utility as a basis for the scientific status of quarks. So shouldn’t quantum electrodynamics (at least with level of observability it had when Feynman gave this opinion) be classified as metaphysics, i.e., philosophy, rather than science? By Feynman’s demarcation criteria, his work should be called philosophy. I think his work actually is science, but the basis for that subtle distinction is in philosophy of science, not science itself.

While degrading philosophy, Feynman practices quite a bit of it, perhaps unconsciously, often badly. Not Dawkins-bad, but still pretty bad. His 1966 speech to the National Science Teacher’s Association entitled “What Is Science?” is a case in point. He hints at the issue of whether science is explanatory or merely descriptive, but wanders rather aimlessly. I was ready to offer that he was a great scientist and a bad accidental philosopher when I stumbled on a talk where Feynman shows a different side, his 1956 address to the Engineering and Science college at the California Institute of Technology, entitled, “The Relation of Science and Religion.”

He opens with an appeal to the multidisciplinarian:

“In this age of specialization men who thoroughly know one field are often incompetent to discuss another. The great problems of the relations between one and another aspect of human activity have for this reason been discussed less and less in public. When we look at the past great debates on these subjects we feel jealous of those times, for we should have liked the excitement of such argument.”

Feynman explores the topic through epistemology, metaphysics, and ethics. He talks about degrees of belief and claims of certainty, and the difference between Christian ethics and Christian dogma. He handles all this delicately and compassionately, with charity and grace. He might have delivered this address with more force and efficiency, had he cited Nietzsche, Hume, and Tillich, whom he seems to unknowingly parallel at times. But this talk was a whole different Feynman. It seems that when formally called on to do philosophy, Feynman could indeed do a respectable job of it.

I think Richard Feynman, great man that he was, could have benefited from Philosophy of Science 101; and I think all scientists and engineers could. In my engineering schooling, I took five courses in calculus, one in linear algebra, one non-Euclidean geometry, and two in differential equations. Substituting a philosophy class for one of those Dif EQ courses would make better engineers. A philosophy class of the quantum electrodynamics variety might suffice.

————

“It is a great adventure to contemplate the universe beyond man, to think of what it means without man – as it was for the great part of its long history, and as it is in the great majority of places. When this objective view is finally attained, and the mystery and majesty of matter are appreciated, to then turn the objective eye back on man viewed as matter, to see life as part of the universal mystery of greatest depth, is to sense an experience which is rarely described. It usually ends in laughter, delight in the futility of trying to understand.” – Richard Feynman, The Relation of Science and Religion

. .

Just a Moment, Galileo

Posted by Bill Storage in Engineering, Innovation management on October 29, 2013

Bruce Vojak’s wonderful piece on innovation and the minds of Newton and Goethe got me thinking about another 17th century innovator. Like Newton, Galileo was a superstar in his day – a status he still holds. He was the consummate innovator and iconoclast. I want to take a quick look at two of Galileo’s errors, one technical and one ethical, not to try to knock the great man down a peg, but to see what lessons they can bring to the innovation, engineering and business of this era.

Less well known than his work with telescopes and astronomy was Galileo’s work in mechanics of solids. He seems to have been the first to explicitly identify that the tensile strength of a beam is proportional to its cross-sectional area, but his theory of bending stress was way off the mark. He applied similar logic to cantilever beam loading, getting very incorrect results. Galileo’s bending stress illustration is shown below (you can skip over the physics details, but they’re not all that heavy).